There is a stark, often painful contrast between the development environment and the harsh reality of production. In a local setup, you have the luxury of breakpoints, hot-reloading, and complete control over the state. However, when code hits production, the rules change entirely. “It works on my machine” becomes a liability rather than a defense. Production debugging is the high-stakes discipline of identifying, diagnosing, and resolving issues in a live environment without disrupting the user experience or compromising data integrity.

As modern architectures shift toward microservices, serverless functions, and AI-driven agents, the complexity of Software Debugging has increased exponentially. We are no longer just looking for syntax errors; we are hunting down race conditions in Node.js Development, memory leaks in Python Development, and hallucinated responses in generative AI integrations. The traditional approach of “debugging = prayer” is insufficient. Engineering teams must adopt a rigorous testing layer and a culture of observability to survive.

This comprehensive guide explores the methodologies, tools, and best practices required for effective Production Debugging. We will move beyond basic Console Logs and dive into structured logging, distributed tracing, remote profiling, and automated testing strategies that act as a safety net for complex applications.

Section 1: The Foundation of Observability

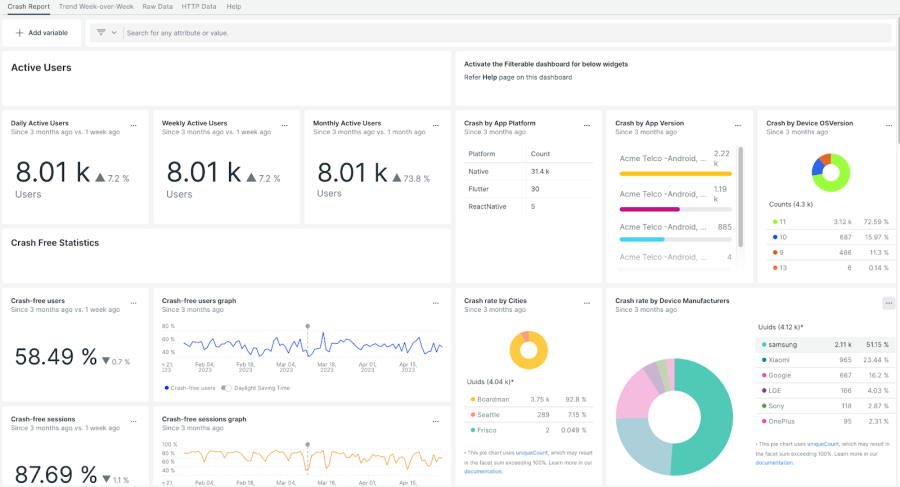

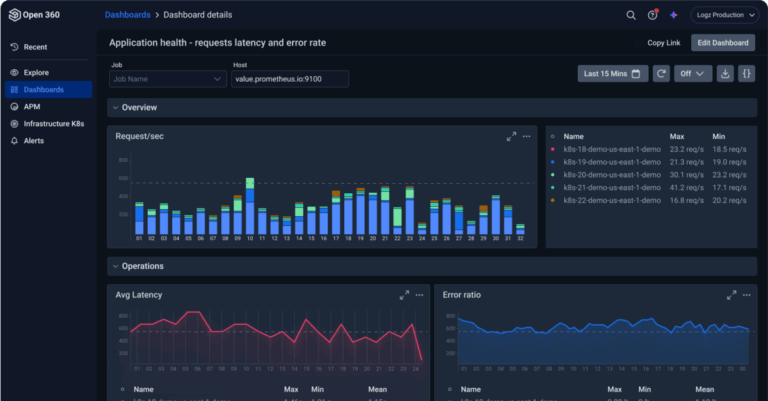

The first rule of production debugging is that you cannot fix what you cannot see. In a production environment, you cannot simply attach a debugger to a process handling thousands of requests. Instead, you rely on telemetry data. This pillar of Application Debugging rests on three legs: Logs, Metrics, and Traces.

Structured Logging Over Text

One of the most common pitfalls in Backend Debugging is relying on unstructured text logs. A log message like “Error in user processing” is useless when parsing millions of lines. To enable effective Log Analysis, you must implement structured logging (usually in JSON format). This allows log aggregation tools (like ELK Stack, Datadog, or Splunk) to index fields, making it possible to filter by user ID, error code, or latency.

Below is an example of implementing structured logging in a Python application using the structlog library. This approach captures context automatically, which is vital for Python Debugging.

import structlog

import logging

import uuid

# Configure structured logging

structlog.configure(

processors=[

structlog.processors.TimeStamper(fmt="iso"),

structlog.processors.JSONRenderer()

],

context_class=dict,

logger_factory=structlog.stdlib.LoggerFactory(),

)

logger = structlog.get_logger()

def process_payment(user_id, amount, currency):

# Generate a request ID for tracing

request_id = str(uuid.uuid4())

# Bind context to the logger for this scope

log = logger.bind(request_id=request_id, user_id=user_id)

log.info("payment_processing_started", amount=amount, currency=currency)

try:

if amount < 0:

raise ValueError("Negative amount not allowed")

# Simulate processing logic

new_balance = 100 - amount

log.info("payment_success", new_balance=new_balance)

return new_balance

except Exception as e:

# Log the exception with full stack trace info

log.error("payment_failed", error=str(e), exc_info=True)

raise e

# Example usage

try:

process_payment("user_123", -50, "USD")

except:

passDistributed Tracing and Correlation IDs

In Microservices Debugging, a single user action might trigger calls to five different services. If an error occurs in the “Inventory Service,” but the logs show an error in the “Checkout Service,” you are chasing ghosts. This is where Correlation IDs (or Trace IDs) become critical. By generating a unique ID at the ingress point (e.g., Nginx or API Gateway) and passing it through HTTP headers to every downstream service, you can stitch together the entire lifecycle of a request.

When performing API Debugging, ensure your Error Monitoring tools can visualize these traces. Tools like Jaeger or Zipkin are standard for this, allowing you to visualize latency bottlenecks and identify exactly which microservice failed.

Section 2: Error Tracking and Frontend Forensics

While backend logs are essential, Frontend Debugging presents a unique set of challenges. You have no control over the client’s device, browser version, or network conditions. When a JavaScript Error occurs in a React or Vue application, it often manifests as a white screen or a silent failure.

Source Maps and Stack Traces

Production JavaScript is almost always minified and bundled to optimize performance. A stack trace pointing to bundle.js:1:45032 provides zero insight. To solve this, you must utilize Source Maps. Source maps map the minified code back to your original source code. When integrating Error Tracking tools like Sentry or Bugsnag, uploading source maps during your CI/CD pipeline allows the tool to un-minify the stack trace, showing you the exact line of code in your original TypeScript or JavaScript file.

Here is a robust example of a global error handler in a Node.js (Express) environment that standardizes error responses, crucial for Node.js Debugging and API consistency.

const express = require('express');

const app = express();

// Custom Error Class for operational errors

class AppError extends Error {

constructor(message, statusCode) {

super(message);

this.statusCode = statusCode;

this.status = `${statusCode}`.startsWith('4') ? 'fail' : 'error';

this.isOperational = true; // Marks error as trusted/known

Error.captureStackTrace(this, this.constructor);

}

}

// Global Error Handling Middleware

const globalErrorHandler = (err, req, res, next) => {

err.statusCode = err.statusCode || 500;

err.status = err.status || 'error';

if (process.env.NODE_ENV === 'production') {

// Production: Don't leak stack traces to client

if (err.isOperational) {

// Trusted error: send message to client

res.status(err.statusCode).json({

status: err.status,

message: err.message,

});

} else {

// Programming or other unknown error: don't leak details

console.error('ERROR 💥', err); // Log to server/monitoring tool

res.status(500).json({

status: 'error',

message: 'Something went very wrong!',

});

}

} else {

// Development: Send full stack trace

res.status(err.statusCode).json({

status: err.status,

error: err,

message: err.message,

stack: err.stack,

});

}

};

// Example route triggering an error

app.get('/api/users/:id', (req, res, next) => {

const user = null; // Simulate database miss

if (!user) {

return next(new AppError('No user found with that ID', 404));

}

res.status(200).json({ status: 'success', data: { user } });

});

app.use(globalErrorHandler);

app.listen(3000, () => {

console.log('App running on port 3000...');

});Browser Debugging and Network Analysis

For Web Debugging, Chrome DevTools is the gold standard. However, debugging production issues often requires more than just the Console. Use the Network tab to inspect API payloads and headers. If you are dealing with React Debugging or Vue Debugging, install the respective framework developer tools. These allow you to inspect component state and props in real-time.

A critical aspect of Mobile Debugging or debugging specific user sessions is “Session Replay.” Tools like LogRocket or FullStory record the DOM mutations, allowing developers to replay the user’s interaction that led to the bug. This eliminates the “steps to reproduce” guessing game.

Section 3: Advanced Techniques: Profiling and Memory

Some production bugs are not crashes; they are slow deaths. Memory leaks and CPU spikes are notoriously difficult to diagnose because they often only appear under load. Performance Monitoring and profiling are the tools of choice here.

Memory Debugging and Leak Detection

In languages like Node.js or Python, objects that are referenced but no longer needed can consume all available RAM, causing the application to crash (OOM – Out of Memory). Memory Debugging involves taking heap snapshots. In a containerized environment (like Docker Debugging or Kubernetes Debugging), you might see pods restarting periodically.

Below is a Python example using tracemalloc to identify lines of code responsible for high memory allocation. This is a powerful technique for System Debugging.

import tracemalloc

import linecache

import os

def display_top(snapshot, key_type='lineno', limit=3):

snapshot = snapshot.filter_traces((

tracemalloc.Filter(False, "<frozen importlib._bootstrap>"),

tracemalloc.Filter(False, "<unknown>"),

))

top_stats = snapshot.statistics(key_type)

print("Top %s lines" % limit)

for index, stat in enumerate(top_stats[:limit], 1):

frame = stat.traceback[0]

# replace "/path/to/module/file.py" with "module/file.py"

filename = os.sep.join(frame.filename.split(os.sep)[-2:])

print("#%s: %s:%s: %.1f KiB"

% (index, filename, frame.lineno, stat.size / 1024))

line = linecache.getline(frame.filename, frame.lineno).strip()

if line:

print(' %s' % line)

other = top_stats[limit:]

if other:

size = sum(stat.size for stat in other)

print("%s other: %.1f KiB" % (len(other), size / 1024))

total = sum(stat.size for stat in top_stats)

print("Total allocated size: %.1f KiB" % (total / 1024))

# Start tracing

tracemalloc.start()

# --- Code causing memory pressure ---

class HeavyObject:

def __init__(self):

self.data = [x for x in range(100000)]

leaky_list = []

def create_leak():

for _ in range(50):

leaky_list.append(HeavyObject())

create_leak()

# ------------------------------------

# Take snapshot and display analysis

snapshot = tracemalloc.take_snapshot()

display_top(snapshot)Dynamic Analysis and Logpoints

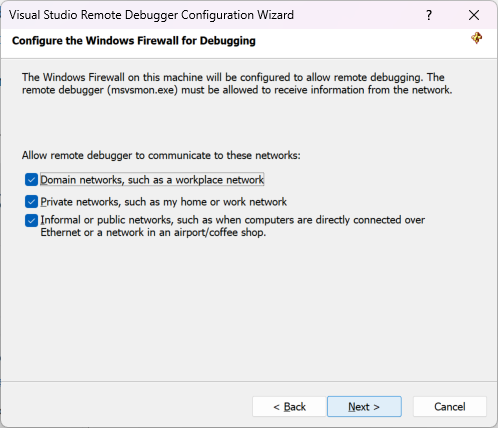

Sometimes you need to debug a live service without stopping it. Traditional breakpoints pause execution, which causes timeouts in production. Modern Developer Tools and cloud debuggers (like Google Cloud Debugger or non-breaking breakpoints in VS Code) allow you to set “Logpoints.” A Logpoint injects a dynamic log statement at a specific line of code without redeploying or pausing the app. This is invaluable for Remote Debugging when you need to inspect the value of a variable in a live user flow.

Section 4: Best Practices and Testing Strategies

Debugging shouldn’t start when an alert fires; it starts during development. The rise of non-deterministic software, such as LLM-based agents, highlights the need for a robust testing layer. Testing and Debugging are two sides of the same coin. If you have zero test coverage, you are debugging via “prayer.”

Integration Testing for Edge Cases

Unit tests are great, but Integration Debugging catches the issues that happen at the boundaries of systems. When dealing with AI agents or complex logic, you need to simulate edge cases. This ensures that when the “reality” of production hits, your system handles hallucinations or malformed data gracefully.

Here is an example of a test setup using Python’s pytest that mocks an external API response to test error handling logic. This prevents API Development bugs from leaking into production.

import pytest

from unittest.mock import patch, MagicMock

from my_app import fetch_user_data

# Mocking external dependencies is crucial for deterministic testing

@patch('my_app.requests.get')

def test_fetch_user_data_timeout(mock_get):

# Simulate a network timeout

mock_get.side_effect = TimeoutError("Connection timed out")

# The function should handle the error gracefully, not crash

result = fetch_user_data("user_123")

assert result['status'] == 'error'

assert result['message'] == 'Service unavailable, please try again later'

@patch('my_app.requests.get')

def test_fetch_user_data_malformed_json(mock_get):

# Simulate a 200 OK but with broken JSON (common in AI/LLM responses)

mock_response = MagicMock()

mock_response.status_code = 200

mock_response.text = "Incomplete JSON { data: ..."

mock_response.json.side_effect = ValueError("Expecting value")

mock_get.return_value = mock_response

result = fetch_user_data("user_123")

# Ensure the system catches the parsing error

assert result['status'] == 'error'

assert 'parsing_error' in result['code']CI/CD Debugging and Feature Flags

CI/CD Debugging ensures that bad code never reaches production. However, if a bug does slip through, you need a kill switch. Feature flags allow you to toggle functionality on or off without a new deployment. If a new “AI Assistant” feature starts throwing 500 Internal Server Errors, you can simply disable the flag. This decouples deployment from release and is a cornerstone of safe Full Stack Debugging.

Conclusion

Production debugging is not just about fixing bugs; it is about system resilience. By moving away from ad-hoc fixes and embracing a culture of observability, structured logging, and proactive testing, engineering teams can navigate the chaos of live environments with confidence. Whether you are doing Java Debugging in a legacy monolith or TypeScript Debugging in a serverless stack, the principles remain the same: instrument your code, centralize your logs, and test your edge cases.

As software becomes more autonomous and complex, the “testing layer” becomes the most critical part of the stack. Don’t wait for the 3 AM pager duty call to realize your debugging tools are insufficient. Invest in Static Analysis, set up your Error Monitoring today, and treat debuggability as a first-class citizen in your development lifecycle.