I caught a junior engineer trying to mount the host filesystem on a production node last Tuesday. It was 2 PM, middle of a deployment, and alerts were firing. He wasn’t trying to be malicious. He just wanted to check a log file that wasn’t streaming to our aggregator.

He typed kubectl debug node/worker-3... and almost blew a hole in our compliance posture wide enough to drive a truck through.

This is the problem with kubectl debug. It is simultaneously the best thing that ever happened to Kubernetes troubleshooting and a loaded gun pointing directly at your cluster’s foot. We treat it like a casual utility—a slightly sharper knife in the drawer. It’s not. It’s a backdoor. A sanctioned, authenticated backdoor, sure, but a backdoor nonetheless.

The “Magic” is Just Linux Namespaces

Let’s strip away the abstraction for a second. When you run a debug command, you aren’t just “entering” a pod. Kubernetes is spinning up an ephemeral container. That’s cool. It shares process namespaces. That’s useful.

But when you target a node? You are creating a privileged pod. If you use the flags everyone copies from Stack Overflow, you are dropping into the host’s PID namespace, network namespace, and mounting the host filesystem.

I see this command in shell histories way too often:

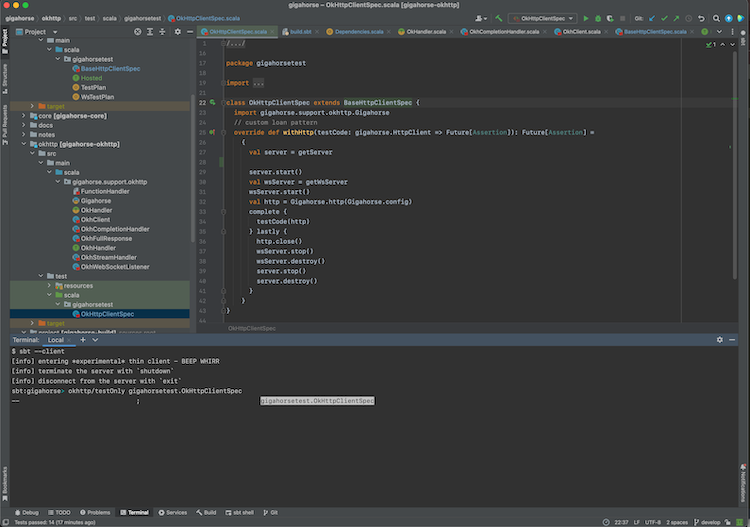

kubectl debug node/k8s-worker-1 \

-it \

--image=ubuntu \

--profile=sysadminThat --profile=sysadmin flag? It’s basically sudo. You are root on the box. You can kill the kubelet. You can read secrets from other pods if you know where to look in /var/lib/kubelet. You can mess with iptables.

I’ve had arguments with devs who think they need this level of access to debug a Java memory leak. You don’t. But because we haven’t given them the right tools (or the right sidecars), they reach for the nuclear option.

RBAC is Usually the Culprit

The permissions for debugging are weirdly granular and often misunderstood. To use kubectl debug effectively, you need permission to create ephemeralcontainers.

Most default ClusterRoles are too broad here. If you give someone edit access, they can probably attach a debug container. If that debug container runs as root (which it often does by default unless your Pod Security Admission blocks it), they have escalated privileges instantly.

Here is the scary part: Ephemeral containers bypass the original container’s startup command and readiness probes. They just start. If your security context constraints aren’t tight, I can attach a container with CAP_SYS_ADMIN to a restricted pod.

I spent a week last year auditing our RBAC because we realized our “read-only” support team could actually execute code in production via debug sidecars. We had to lock it down to a specific role that only SREs can assume during an incident.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: restricted-debugger

rules:

- apiGroups: [""]

resources: ["pods/ephemeralcontainers"]

verbs: ["create"]

# Note: This doesn't limit WHAT image they can use.

# That requires admission controllers.The “Break-Glass” Reality

So, do we ban it? No. That’s stupid. When production is burning down at 3 AM, I don’t want to be fighting with permissions. I want a shell.

But we need to treat node debugging as a “break-glass” procedure. It shouldn’t be part of the daily workflow. If you are debugging nodes daily, your automation is broken.

In my current setup, we use a specific “Debug” namespace for tools, and we force the use of curated debug images. You don’t get to pull ubuntu:latest. You get our sre-tools:v4 image which has netshoot, delve (the debugger, not the banned word, ha), and some custom scripts pre-loaded.

Also, Pod Security Admission (PSA) is your friend here. We enforce the baseline standard globally, but we have a specific exemption for the SRE group during incidents. It’s annoying to set up. It breaks things initially. But it prevents “accidental root.”

Copying Files is a Trap

Another thing that drives me nuts: people using debug containers to patch live code.

“I’ll just attach a container, install vim, edit the config file, and restart the process.”

Stop it. You are creating state drift that no one will remember in two days. The ephemeral container dies, but if you modified a shared volume or a file on the host? That change persists. I once spent three days chasing a bug that turned out to be a manual /etc/hosts edit someone made via a debug session three months prior.

A Better Workflow

If you need to inspect a crashing pod, don’t debug it in place if you can avoid it. Copy it.

kubectl debug pod/crashing-app \

--copy-to=app-debug \

--share-processes \

--image=my-debug-toolsThis command creates a copy of the pod with the debug sidecar attached. You aren’t touching live traffic. You aren’t risking the production pid namespace. You can mess around, crash it again, inspect the memory dump, and then delete it. No trace left behind.

For distroless images (which everyone seems to use now, making debugging a nightmare), this --copy-to approach combined with --share-processes is the only way to stay sane. You get your tools in one container, looking at the processes of the other, without needing a shell in the target container.

Audit Everything

If you take nothing else away from this rant: Turn on audit logging for ephemeral containers.

I want to know who spun up a debug container, when they did it, and what image they used. If I see kubectl debug node/... in the logs, that triggers a P2 alert for us. Not because it’s always wrong, but because someone needs to verify why we needed root access to a node.

Kubernetes gives us sharp tools. kubectl debug is a scalpel. Just make sure you aren’t running with it.