In the world of software development, writing code is often the easy part. The true test of a developer’s mettle lies in Bug Fixing. It is an intricate dance of logic, patience, and systematic analysis. Whether you are dealing with a silent failure in a Python script, a race condition in Node.js, or a rendering glitch in React, the ability to effectively diagnose and resolve issues is what separates junior developers from senior engineers. Debugging is not merely a reactive process of patching holes; it is a proactive discipline that involves understanding system architecture, data flow, and the subtle nuances of your language’s runtime environment.

The cost of bugs increases exponentially the further they travel down the deployment pipeline. A syntax error caught by a linter costs pennies; a logic error found during Unit Test Debugging costs dollars; but a critical failure found during Production Debugging can cost a company its reputation and significant revenue. This article explores the depths of Software Debugging, moving beyond simple print statements to advanced strategies involving Static Analysis, Memory Debugging, and Remote Debugging. We will dissect the methodology required to turn confusing Stack Traces into actionable solutions and ensure that your code reviews focus on architecture rather than arguing over broken logic.

The Scientific Method of Debugging: Core Concepts

Effective bug fixing is essentially the scientific method applied to computer science. It requires a shift in mindset from “why isn’t this working?” to “what is the current state of the system, and how does it diverge from the expected state?” The first step in any debugging session is Reproducibility. If you cannot reliably reproduce a bug, you cannot verify its fix. This is often the most challenging phase, particularly with Async Debugging where timing and network latency introduce non-deterministic behavior.

Understanding the Stack Trace

The Stack Trace is the map that leads to the treasure (or the disaster). In Java Development or Python Development, the stack trace tells you exactly the path of execution that led to the crash. However, novices often glaze over these logs. A seasoned developer knows to look for the “first cause”—the point where your code interacts with the library code. In JavaScript Debugging, particularly with minified production code, relying solely on stack traces can be difficult without Source Maps.

One of the most common pitfalls in Python Debugging is relying on `print()` statements for introspection. While quick, they clutter the code and offer no context regarding severity or timestamps. A robust debugging strategy utilizes proper Logging and Debugging frameworks.

import logging

# Configure logging to capture the severity and the message

logging.basicConfig(

level=logging.DEBUG,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

)

logger = logging.getLogger(__name__)

def process_user_data(user_data):

logger.info(f"Starting processing for user: {user_data.get('id')}")

try:

# Simulating a potential KeyError or logic error

if 'email' not in user_data:

raise ValueError("Missing email address")

# Simulating complex logic

processed_value = user_data['score'] * 1.5

logger.debug(f"Calculated score: {processed_value}")

return processed_value

except Exception as e:

# Capturing the stack trace is crucial for Error Tracking

logger.error("Failed to process user data", exc_info=True)

return None

# Simulation

data = {'id': 101, 'score': 50} # Missing email

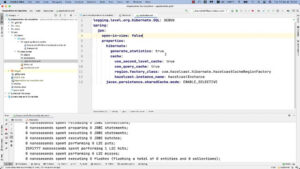

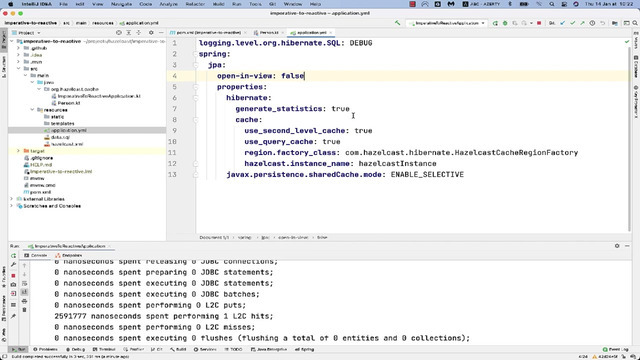

process_user_data(data)In the example above, using `exc_info=True` ensures that the full traceback is recorded in the logs. This is essential for Backend Debugging where you might be using tools like Sentry or Datadog for Error Monitoring. Without structured logging, you are flying blind in a production environment.

Frontend and Browser-Based Debugging Strategies

Frontend Debugging presents a unique set of challenges because the code runs on the client’s machine, an environment you do not control. Whether you are doing React Debugging, Vue Debugging, or Angular Debugging, the browser’s developer tools are your primary weapon. Chrome DevTools has evolved into a sophisticated IDE in its own right, allowing for Network Debugging, performance profiling, and memory analysis.

Breakpoints Over Console Logs

While `console.log` is ubiquitous in JavaScript Development, it is inefficient for complex state analysis. Using the `debugger` statement or setting conditional breakpoints in the browser allows you to pause execution and inspect the scope variables in real-time. This is critical when debugging TypeScript or modern frameworks where data flow is unidirectional and state changes can be subtle.

A common issue in Web Development is handling asynchronous API calls. When an API fails, the UI might enter an inconsistent state. Here is how you might handle and debug a fetch operation in a modern JavaScript environment, ensuring that JavaScript Errors are caught and analyzed.

async function fetchDashboardData(userId) {

const apiUrl = `/api/v1/dashboard/${userId}`;

try {

// Pauses execution here if DevTools is open

// debugger;

const response = await fetch(apiUrl);

if (!response.ok) {

// Throwing a custom error object helps in filtering logs later

throw new Error(`HTTP error! status: ${response.status}`);

}

const data = await response.json();

console.table(data); // A better way to visualize arrays/objects

return data;

} catch (error) {

// This block is critical for API Debugging

console.group('Dashboard Data Fetch Failed');

console.error('Endpoint:', apiUrl);

console.error('Timestamp:', new Date().toISOString());

console.error('Error Details:', error);

console.groupEnd();

// In a real app, send this to your Error Tracking service

// reportErrorToService(error);

return null;

}

}By using `console.group` and `console.table`, you make the debugging output readable. Furthermore, understanding Network Debugging implies looking at the “Network” tab to inspect request headers, payloads, and response bodies. Often, a bug reported as a “Frontend Issue” is actually a “Backend Issue” sending malformed JSON.

Advanced Techniques: Backend, Async, and Memory

As we move to Backend Debugging and Full Stack Debugging, the complexity increases. We deal with concurrency, memory management, and microservices. Node.js Debugging is particularly notorious for “Uncaught Promise Rejections” and memory leaks caused by closures or event listeners that are never removed.

Memory Leaks and Performance

Memory Debugging is the process of identifying code that consumes RAM but never releases it. In languages like Java or Python, garbage collection handles much of this, but developers can still create references that prevent collection. In Node.js Development, a common pattern causing leaks is attaching event listeners to global objects without detaching them.

Profiling Tools are essential here. For Node.js, you can use the `–inspect` flag to connect Chrome DevTools to your server instance. For Python Debugging, tools like `cProfile` or `memory_profiler` provide insights into hot paths and memory usage.

Debugging Microservices and Containers

In modern Cloud Native architectures, you are likely doing Docker Debugging or Kubernetes Debugging. A bug might only manifest when services interact. Distributed Tracing (using tools like Jaeger or Zipkin) becomes vital to trace a request as it hops from an Nginx load balancer to a Django service, and finally to a Postgres database.

Here is an example of a robust Node.js pattern to prevent unhandled exceptions from crashing a containerized service, which is a key aspect of System Debugging.

const express = require('express');

const app = express();

// Middleware for request logging

app.use((req, res, next) => {

console.log(`[${new Date().toISOString()}] ${req.method} ${req.url}`);

next();

});

app.get('/risky-operation', async (req, res, next) => {

try {

// Simulating an async operation that fails

await performHeavyCalculation();

res.send('Success');

} catch (error) {

// Pass errors to the default Express error handler

next(error);

}

});

// Centralized Error Handling Middleware

app.use((err, req, res, next) => {

// Critical for API Development: Don't leak stack traces to the client in production

const isProduction = process.env.NODE_ENV === 'production';

console.error('CRITICAL ERROR:', err.stack);

res.status(500).json({

error: 'Internal Server Error',

message: isProduction ? 'An unexpected error occurred' : err.message

});

});

// Global safety net for Node.js

process.on('unhandledRejection', (reason, promise) => {

console.error('Unhandled Rejection at:', promise, 'reason:', reason);

// In Kubernetes, we might want to exit so the pod restarts

// process.exit(1);

});

function performHeavyCalculation() {

return new Promise((resolve, reject) => {

setTimeout(() => reject(new Error("Out of memory simulation")), 100);

});

}

app.listen(3000, () => console.log('Server running on port 3000'));This code demonstrates Express Debugging best practices: centralized error handling and global rejection monitoring. This ensures that even if a developer forgets a try/catch block, the application logs the error gracefully rather than crashing silently or leaving the request hanging.

Best Practices: Prevention and Optimization

The ultimate form of bug fixing is bug prevention. This is where Code Analysis and Static Analysis tools come into play. Tools like ESLint for JavaScript, Pylint for Python, or SonarQube for general Code Debugging can catch type errors, potential leaks, and code smells before they ever reach a Pull Request.

Automated Testing and CI/CD Debugging

Integrating debugging into your workflow means embracing Test-Driven Development (TDD). Unit Test Debugging is significantly faster than manual testing. When a bug is reported, the first step should be to write a failing test case that reproduces the bug. Once the test fails, you fix the code until the test passes. This prevents regression.

In CI/CD Debugging, failures often happen in the pipeline but not locally due to environmental differences. Using Docker to replicate the CI environment locally is a crucial skill. Furthermore, ensuring your tests provide verbose output on failure helps diagnose why a pipeline broke.

Below is an example of a TypeScript unit test using Jest. Writing tests that explicitly check for error conditions is a vital part of Application Debugging.

// userService.ts

export class UserService {

private users: Map = new Map();

register(username: string): void {

if (!username || username.trim() === "") {

throw new Error("Username cannot be empty");

}

if (this.users.has(username)) {

throw new Error("User already exists");

}

this.users.set(username, "active");

}

}

// userService.test.ts

import { UserService } from './userService';

describe('UserService Debugging', () => {

let service: UserService;

beforeEach(() => {

service = new UserService();

});

test('should throw error when registering empty username', () => {

// We expect the function to throw, validating our validation logic

expect(() => service.register(" ")).toThrow("Username cannot be empty");

});

test('should prevent duplicate registrations', () => {

service.register("debug_master");

// This test confirms our "bug fix" for duplicates works

expect(() => service.register("debug_master")).toThrow("User already exists");

});

}); The Human Element of Debugging

Finally, it is vital to acknowledge that Bug Fixing is a collaborative effort. When submitting fixes, clarity is paramount. A fix that works but is unintelligible is a “future bug” waiting to happen. Clean, well-documented code reduces the friction in code reviews. If you find yourself debating the validity of a fix with a peer, the solution is often to improve the code’s readability or add a comprehensive test case that proves the logic is sound. When the code clearly demonstrates the solution through tests and clean architecture, the debate evaporates, and the focus returns to engineering excellence.

Conclusion

Mastering Bug Fixing requires a blend of technical knowledge, tool proficiency, and disciplined process. From utilizing Chrome DevTools for Web Debugging to implementing structured logging for Python Errors, and leveraging Static Analysis to prevent issues upstream, the landscape is vast. By adopting these Debugging Best Practices, you not only improve the stability of your applications but also streamline the development lifecycle.

Remember, a bug is simply a discrepancy between your mental model of the code and the reality of its execution. Use Profiling Tools, check your Stack Traces, write regression tests, and automate your checks. The goal is to move from “debugging the conversation” to debugging the code, ensuring that your software is robust, performant, and ready for production.