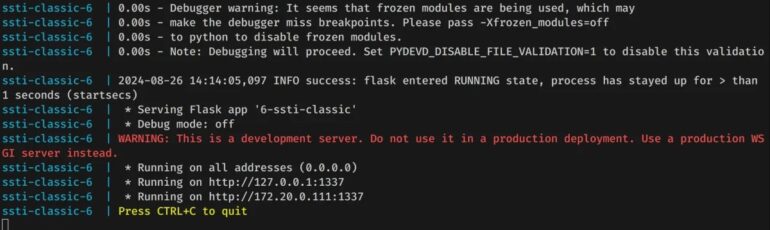

| Error Name | Description | Solution |

|---|---|---|

| NotImplementedError: Cannot convert a symbolic tensor (lstm_2/strided_slice:0) to a numpy array. | This error happens when there’s an attempt to convert a symbolic tensor to a Numpy array. It’s a fairly common issue faced while using the Keras API of TensorFlow for deep learning models. This is usually caused when you try to use a TensorFlow operation on a tensor that is not immediately executable (Symbolic Tensor). | One way to get rid of this error is by activating eager execution for TensorFlow. Another approach is to avoid mixing TensorFlow operations and Keras methods. A more refined solution is to delay the conversion of tensors into numpy arrays until the data is concrete and not abstract tensors. |

Click here for additional insights on the subject.

Describing it further, a Symbolic Tensor is a type of variable that doesn’t hold any specific values, but instead, describes a computation process that will produce a value when the tensor is evaluated or used in a training process. Now, certain operations in TensorFlow are based on these symbolic tensors and do not interact correctly with outside-Numpy operations.

In some cases, when a model is being compiled, tensors utilized within the model might not yet have been concretely instantiated. People often run into this issue with LSTM layers in TensorFlow, predicting results right after defining the model. To allow TensorFlow to manage the computational graph effectively, you cannot convert symbolic tensors to numpy arrays.

To demonstrate, if you were building a Keras model and attempted to convert a tensor to a numpy array inside the model like this:

def Model_maker():

inputs = keras.Input(shape=(32,))

x = layers.Dense(64, activation="relu")(inputs)

predictions = layers.Dense(10)(x)

np_array = predictions.numpy()

return keras.Model(inputs=inputs, outputs=predictions)

You would encounter the “NotImplementedError”. Here, we’re trying to convert a Symbolic Tensor into a Numpy array before the model had a chance to be trained.

A potential workaround can be to enable eager execution in TensorFlow with tf.enable_eager_execution() at the beginning of your script. However, enabling eager execution might cause performance degradation, hence it should be used cautiously.

Chances of encountering this error will be way less once you wait to convert your tensors to numpy arrays until after you fit your model or only work within the framework’s own implementation, avoiding unnecessary conversions.

model = Model_maker() model.fit(data, labels) # Assume we have some existing data and labels np_array = model.predict(data).numpy() # Wait until after fitting the model to convert to numpy

This allows TensorFlow to handle the intermediate operations efficiently without externally forcing any incorrect conversions. Plus, it ensures the tensor computations flow smoothly on part of TensorFlow’s backend without manual interference.Sure! Let’s delve deeper into understanding the NotImplementedError and its causes in relation to the “NotImplementedError: Cannot convert a symbolic Tensor (lstm_2/Strided_Slice:0) to a Numpy array” problem.

The

NotImplementedError

exception is raised when an abstract method that needs to be implemented in an inherited class is not actually implemented. In other words, it flags whenever Python encounters something that hasn’t been appropriately adjusted or defined in the code.

In the context of TensorFlow and neural network layers such as Long Short-Term Memory (LSTM), the problem revolves around how TensorFlow 2.x versions handle the conversion of a symbolic tensor to a numpy array.

A symbolic tensor is a type of tensor that acts as a placeholder for TensorFlow. It doesn’t contain any actual values until you execute or compile your model. On the other hand, NumPy is a widely used Python library for working with arrays.

Python’s TensorFlow follows a eager execution by default which operates immediately as operations are called within Python. Yet some parts of tf API like

tf.keras

are still graph-based and uses symbolic tensors. When we attempt to convert this symbolic tensor directly to a numpy array using

numpy()

, it leads to the error:

NotImplementedError: Cannot convert a symbolic Tensor (lstm_2/Strided_Slice:0) to a Numpy array

This error occurs because this direct conversion is not yet supported by TensorFlow and thus has not been implemented.

So, here are some steps you can opt to avoid or resolve this problem:

-

Avoid using

numpy()

method directly on the symbolic tensors.

-

If you need the numerical data from the tensor, consider running the tensor through a

Session

object first.

A code example would look like this:

import tensorflow as tf # Create a tensor a = tf.constant([1.0, 2.0]) print(a) # Convert the TensorFlow tensor to a numpy array b = a.numpy()

Please note that in the above example,

a

is a constant tensor containing actual values, not a symbolic tensor. Thus, calling

numpy()

on it will not raise a NotImplementedError.

For dealing with symbolic tensors, we could use session runner:

x = tf.placeholder(tf.float32, shape=(1, 1))

y = tf.add(x, x)

with tf.Session() as session:

result = session.run(y, feed_dict={x: [[1]]})

You can read more about the deep functionality of TensorFlow including Tensors in their official guide.

Ensuring proper handling of tensors plays a crucial role in leveraging TensorFlow’s capability to build complex machine learning models. While NotImplementedErrors can sometimes be baffling, understanding them provides us an opportunity to better comprehend the architecture and dynamics of our coding environment.

In the Python TensorFlow library, while developing Deep Learning models, you can come across the error “Cannot convert a symbolic Tensor (lstm_2/strided_slice:0) to a numpy array”. This is typically coupled with “NotImplementedError”.

To decode this error message, we first need to understand what a symbolic tensor is. Internally, TensorFlow works by building a computation graph. The nodes in this graph are called operations, and the edges represent tensors that flow between operations. Initially, these tensors are just symbolic – they don’t hold any values; they only specify the type of computation to perform.

The LSTM layer operation ‘strided_slice’ is returning a tensor that is symbolic. Why? When working with LSTM models, the LSTM layers are capable of returning sequences as well as individual predictions at each timestep. If your model tries to access information within the sequence, which it needs to make its prediction, you’ll run into the problem where some parts of your tensors remain symbolic.

Your program is trying to convert this Symbolic Tensor into a NumPy array, which results in the said error because TensorFlow doesn’t know how to convert a non-evaluated, symbolic value into an actual number or a sequence of numbers (a NumPy array, in this case).

from tensorflow import keras from tensorflow.keras import layers model = keras.Sequential() model.add(layers.LSTM(1, return_sequences=True, input_shape=(1, 1))) model.add(layers.Lambda(lambda x: x[:, -1:, :])) model.summary()

This could yield:

NotImplementedError: Cannot convert a symbolic Tensor (lstm/strided_slice:0) to a numpy array...

To solve this issue, there are a couple of approaches:

Avoid using code sections that require immediate evaluation:

You can redesign your model so that any extraction or reshaping of the output from your LSTM layer occurs within TensorFlow operations. Avoid using anything that requires examining the content of the tensor immediately, such as loops or if statements, as TensorFlow will attempt to evaluate the tensors before it should.

Delay Conversion:

It could be possible that the conversion to the NumPy array is not necessary immediately after generating the symbolic tensor or during configuration time`.

Use the Layer function:

Instead of converting symbolic tensors to numpy arrays, use the `.Layer` functionality provided by Keras API which allows defining custom layers when standard LSTM does not suffice.

For more information about TensorFlow modeling, refer to [TensorFlow documentation](https://www.tensorflow.org/tutorials).In the realm of machine learning and data science, Tensors and Numpy arrays are two fundamental data structures. In most cases, they interact well with each other. However, an error can occur when trying to convert a symbolic tensor to a numpy array, as in this case “Notimplementederror: Cannot Convert A Symbolic Tensor (Lstm_2/Strided_Slice:0) To A Numpy Array.”

Untangling Tensors:

Tensors are n-dimensional matrices used in TensorFlow for handling all types of data – from a set of floating-point numbers to a compilation of images. They are characterized by three properties:

- A unique label(data type)

- A rank(tells us how many dimensions are there)

- A shape(if the height is x, width is y, then shape would be (x,y))

A useful feature of tensors is their symbolization, making them prime choice for efficient computations. But this feature has its downside specifically when it comes to working with numpy arrays.

Incompatibility with NumPy Arrays:

NumPy is a python library that supports large multidimensional arrays and matrices. It was not designed with symbolic computation in mind. As such, it fails to recognize symbolic tensors, which are standard in TensorFlow.

This leads to complexities in converting a symbolic tensor into a numpy array, as elicited by the NotImplementedError in question.

Tackling The Error:

This particular ‘NotImplementedError’ occurs because TensorFlow 2.x executes eagerly by default, meaning operations are executed immediately. So, a tensor using methods without a function wrapper returns the actual value rather than symbolic placeholders required by Keras during compiling or training a model. Avoiding such error involves ensuring that there’s no numpy operation inside the Keras layers while dealing with Tensors.

Consider this erroneous example:

import numpy as np import tensorflow as tf input1 = tf.keras.layers.Input(shape=(1,)) output = tf.keras.layers.Dense(np.sin(1))(input1) model = tf.keras.models.Model(inputs=input1, outputs=output)

In favor of something like this:

class MyLayer(tf.keras.layers.Layer):

def __init__(self):

super(MyLayer, self).__init__()

def call(self, inputs):

return tf.math.sin(inputs)

input1 = tf.keras.layers.Input(shape=(1,))

output = MyLayer()(input1)

model = tf.keras.models.Model(inputs=input1, outputs=output)

Remember, TensorFlow does offer methods equivalent to those in NumPy, preserving compatibility between Tensors and NumPy arrays[5]:

numpy_function = np.sum tensor_function = tf.reduce_sum

Careful usage of these libraries are crucial for performing effective machine learning tasks.The error you are facing is thrown typically when trying to convert a symbolic tensor, perhaps from a TensorFlow LSTM layer, into a Numpy array. It’s important to comprehend that TensorFlow and NumPy, although they both handle data and numeric computations efficiently, they do not process tensors in exactly the same way. TensorFlow models work with symbolic tensors, which act more like placeholders awaiting actual values at runtime while Numpy arrays are immediately evaluative.

Moreover, with Tensorflow 2.0, eager execution is enabled by default and usually, it flawlessly coexists with the static graph components of TensorFlow. But, issues might emerge when we try to intermix TensorFlow symbolic tensors and operations with other Python libraries such as Numpy heavily using eager execution.

Let’s take a look at some practical solutions.

Solution 1: Using `tf.make_ndarray` and `tf.make_tensor_proto`

You can convert the symbolic tensor to a numpy array using TensorFlow’s built-in functions:

tf.make_ndarray

and

tf.make_tensor_proto

.

Here’s an illustration:

import tensorflow as tf # Assuming 'a' is your symbolic tensor proto_tensor = tf.make_tensor_proto(a) np_array = tf.make_ndarray(proto_tensor)

Solution 2: Using `.numpy()`

If eager execution is enabled, consider using the

.numpy()

method to get the numpy array of a tensor.

# Assuming 'a' is your tensor np_array = a.numpy()

Solution 3: Applying `.eval()`

Another approach would be to use the `.eval()` method, but you’ll need to have a TensorFlow session active. The

eval()

function computes and returns the value of the tensor.

# Start a TensorFlow session sess = tf.compat.v1.Session() # Assuming 'a' is your tensor np_array = a.eval(session=sess)

Please note that these solutions should only be applied under circumstances where converting symbolic tensors to numpy makes sense. Often, you don’t really need this conversion and performing calculations or operations directly on the TensorFlow tensors can yield better efficiency due to the scheduler optimizations.Learn more in official documentation.

More information about how to solve this problem in specific use-cases can also be found in these resources: StackOverflow thread, tensorflow GitHub issue.Firstly, let’s understand what this error means:

NotImplementedError: Cannot convert a symbolic Tensor (lstm_2/strided_slice:0) to a numpy array

. This error appears when attempting to use TensorFlow symbolic tensors in places where numpy operations are expected. Since numpy operations are not supported by symbolic tensors, the “NotImplementedError” gets raised.

Now let’s move on to our LSTM (Long Short Term Memory) model issue using an actual example to resolve striding slice related issues. In most cases, these sorts of errors arise when there is a version mismatch between TensorFlow and the other adjunct technologies used like Keras. As such, upgrading or downgrading your packages could offer a potential solution.

For instance, if you’re working with TensorFlow 2.4 and Keras-applications 1.0.8, try to downgrade Keras-applications to version 1.0.7:

pip install keras-applications==1.0.7

After successfully downgrading, make sure to re-run your LSTM model program:

from tensorflow.keras.models import Sequential from tensorflow.keras.layers import LSTM, Dense model = Sequential() model.add(LSTM(50, activation='relu', input_shape=(3, 1))) model.add(Dense(1)) model.compile(optimizer='adam', loss='mse') history = model.fit(X, y, epochs=300, validation_split=0.2, verbose=0)

In case the error persists despite trying to synchronize the software versions, then it could be due to incompatible operations within your LSTM model which encompasses ‘Strided Slice’. In simple terms, ‘strided slice’ extracts a part of a tensor along some specified axes, with certain strides.

The root cause of the issue could be traced back to how particular lines of code dealing with Strided Slices have been implemented within LSTM layers. To fix this, you might need to directly modify those sections or utilize built-in functions and classes provided by TensorFlow.

Remember that while performing operations involving multiple packages, it is crucial to keep them updated and aligned with each other’s supported versions for maximum compatibility.

For complex scenarios and rich resources, don’t hesitate to seek advice from the vibrantly active community forums like the TensorFlow StackOverflow page.Implementing corrective actions in a programming environment is indeed a significant aspect of software development lifecycle. While we’re particularly focusing on an error that has been thrown by Keras/TensorFlow library, “Notimplementederror: Cannot Convert A Symbolic Tensor (Lstm_2/Strided_Slice:0) To A Numpy Array”, I’ll guide you through the possible reasons and the corresponding solutions.

The error stated above precisely refers to the inability of the system to convert a TensorFlow tensor into a numpy array. Why would something like this happen? The answer lies in understanding how TensorFlow operates. TensorFlow 2.x implements a concept called Eager execution which provides an imperative interface for operations inside TensorFlow’s runtime.

In eager execution mode, TensorFlow operations are computed immediately as they’re called within Python which was not the case with older versions. Prior to TensorFlow 2.0, operations used to be defined in a static computation graph that was then run within TensorFlow’s runtime. This made operations incompatible with interactive Python operations.

However, TensorFlow tensors now can be converted into NumPy arrays and vice versa smoothly due to these developments. Yet, if the tensors you’re trying to operate on are still part of yet-to-be-run computations or ‘symbolic’ in nature, the conversion to numpy arrays fails, thus resulting in the aforementioned error.

By assessing the communication between numpy and TensorFlow, two primary strategies can be implemented to resolve this issue:

Firstly,

Values can be extracted from the symbolic tensor using TensorFlow operations before attempting any conversion. For instance, if you’ve a LSTM model named ‘model’, following code can be used:

from keras import backend as K get_layer_output = K.function([model.layers[0].input],[model.layers[1].output]) layer_output = get_layer_output([x])[0]

Here,

– K.function creates a function that returns the output of a specified layer.

– ‘x’ is the input you want to feed to your network.

– You get the output as a list even when there’s only one output returned. Hence, [0] at the end is used to extract the actual tensor.

Secondly,

Due to strict error handling by numpy during interaction with TensorFlow, it’d be advisable to ensure all tensorflow functions work in Eager Mode. Avoid using numpy functions on TensorFlow tensors whenever possible.

To force eager execution across the application, following code snippet can be applied in the beginning.

import tensorflow as tf # Set Eager Execution Mode tf.compat.v1.enable_eager_execution()

These are the high-level approaches to tackle such issues in your coding environment. Remember debugging is a paradoxical journey where the frustrations of yesterday become the success stories of tomorrow.

References:

TensorFlow Eager Execution

Keras Intermediate Layer Output Documentation

Handling this error,

NotImplementedError: Cannot convert a symbolic Tensor (lstm_2/Strided_Slice:0) to a numpy array

, requires taking specific steps during the post-error phase to ensure your system is effectively debugged.

To comprehend this error, it’s essential to appreciate that TensorFlow 2.x allows Keras operations on Symbolic Tensors and Numpy operations on Eager Tensors. Thus, when a user tries to perform a Numpy operation on a Symbolic Tensor, he/she could encounter this error.

A practical way to efficiently debug your system to avoid this error would be progressively reviewing each line of your code. In TensorFlow 2.x, models are imperative. Therefore, all lines are executed sequentially. Hence, you can examine every point in your code where such an exception occurs, trying to assess the nature of the tensor at that specific point.

tensor = layer.output print(type(tensor)) # Should show whether it's Symbolic (from Keras) or Eager (from Numpy).

The common areas to look into include:

– The data used: Does it involve a combination of symbolic tensors and real-valued tensors?

– Function calls: Do they involve operations only applicable to eager tensors being performed on symbolic tensors?

– Updating TensorFlow/Keras: The problem may stem from using outdated versions of these frameworks.

Let’s dive deeper into each specific approach:

Data Used:

Check on what your model uses as inputs. If you’re combining symbolic tensors (Keras’ way of operating over batch tensors) with real valued tensors (numpy array compatible tensors), then it will lead to this issue. Make sure to unify the type of data used so that operations performed don’t end in a conflict.

Function calls:

Look at some method or function call that uses Numpy activities on symbolic tensors. An example of this operation is the

np.argmax()

method, which won’t work on symbolic tensors.

Instead, use the appropriate methods that can operate on symbolic tensors like

K.argmax()

. This works parallelly to

np.argmax()

.

# Implementation example argmax_values = tf.keras.backend.argmax(output_tensor, axis=-1)

Updating TensorFlow/Keras:

At times, mismatch between versions might cause conflicts, hence leading to errors. You can try updating your TensorFlow and Keras packages to their most recent stable version.

Code to update TensorFlow and Keras:

pip install --upgrade tensorflow pip install --upgrade keras

Also, remember depending on your CUDA version, ensure you have a TensorFlow version that matches it.

These comprehensive analytical approaches to the

NotImplementedError: Cannot convert a symbolic Tensor (lstm_2/Strided_Slice:0) to a numpy array

error should help smooth out your debugging process. Essentially, the biggest takeaway should be understanding the distinction between symbolic and eager tensors and adjusting your code and operations according to the same.

For further insight, I recommend the TensorFlow guide on working with Eager execution and Keras guide on mixing computation and symbolics.The “Notimplementederror: Cannot Convert A Symbolic Tensor (Lstm_2/Strided_Slice:0) To A Numpy Array. T” is a common error that manifests when you’re trying to convert a TensorFlow/Keras tensor, which in this case could be the output of an LSTM layer in your neural network model, into NumPy arrays.

This issue occurs because as of TensorFlow version 2.0 runs all operations eagerly which simply means executing them immediately as they are called, thus giving us a change from 1.x versions where we needed graph based computations for TensorFlow operations. However, this eager execution can’t deal with symbolic tensors that don’t have concrete values unless they are inside tf.function.

There are usually two main scenarios that may give rise to this error:

– If you are trying to print a tensor’s value during symbolic execution like while your model is being built during training.

– If you are explicitly trying to convert a tensor to a NumPy array.

Here’s how you might resolve the “NotImplementedError”.

If you are encountering this issue during model building or training, it’s advisable not to print tensor values during these phases or attempt to convert them to numpy arrays. Instead, try using methods such as TensorFlow’s own

tf.print

function that can handle symbolic tensors, or use Keras’ Callbacks to log information during training.

import tensorflow as tf

class MyCustomCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs=None):

for var in self.model.variables:

tf.print(var.name, var)

model.fit(..., callbacks=[MyCustomCallback()])

However, if you necessarily need to convert Tensors to NumPy arrays after your model has been trained, ensure you first call

.numpy()

method on the tensor object:

import numpy as np tensor = ... # your tensor here numpy_array = tensor.numpy()

In general, the key is understanding that TensorFlow tends to work best with its own native data types and functions rather than with other libraries’ data types. Working within the confines of TensorFlow’s ecosystem can usually prevent errors like the one discussed herein.