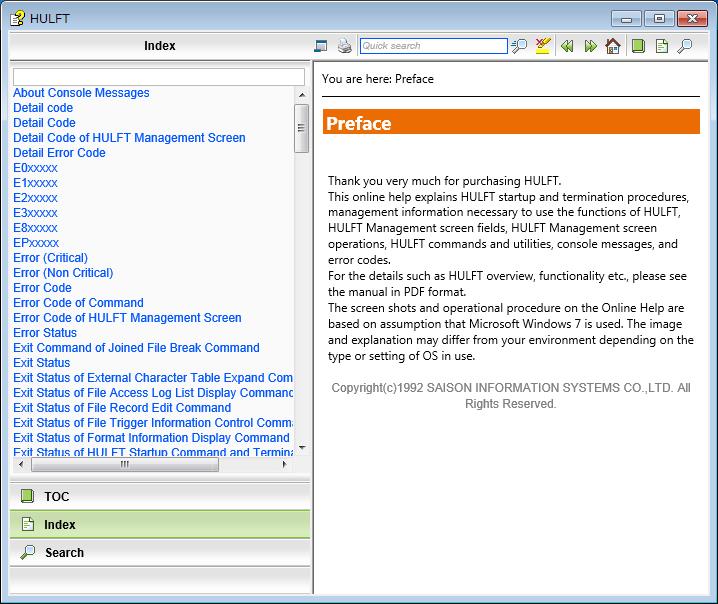

| Reason | Solution |

|---|---|

| Network Latency | Consider optimizing the network conditions. |

| Inefficient Code | Improve code efficiency using caching and other techniques. |

| Server Response Time | Contact server host or switch to faster server. |

| Timeout & Retry settings not optimized | Correctly set timeout and retry parameters whilst making requests. |

| SSL/TLS Handshake Delays | Minimize SSL negotiation overheads by reusing SSL sessions. |

Let’s dissect these points one by one for better understanding.

Network Latency

The most common issue slowing down http requests is simply network latency. If your machine is far from the source you’re trying to connect to geographically, or if there’s generalized internet congestion, your request could take longer than usual to return. Optimizing network conditions could help improve the speed of your requests.

Inefficient Code

Another possible reason for slow requests may lie in your Python script itself. If the script is written inefficiently, this can slow down the request process. Best practices include using caching when you can and taking advantage of concurrent.futures for running many requests at once across different threads.

Server Response Time

Slow server response times can also be a contributing factor to slow Python requests. This could be due to the server simply being overloaded, underpowered, or misconfigured. You might want to contact your server host about increasing your server capabilities or consider switching to a more capable provider.

Timeout & Retry Settings

In some situations, a Python script may be waiting unnecessarily long due to poorly optimized timeout and retry settings. It’s important to correctly set your timeout and retry parameters when making requests to avoid wasting valuable processing time on unsuccessful attempts.

For example, tweaking the timeout settings:

response = requests.get('https://yoururl.com', timeout=1)

SSL/TLS Handshake Delays

There can often be a slight delay due to the SSL/TLS handshake if you are dealing with https requests. This encryption negotiation process can cause an additional latency as certificates need to be verified and keys exchanged. Minimizing these SSL negotiation overheads by reusing SSL sessions can result in significant speed improvements. This optimization can be achieved using Session object in Python Requests library:

s = requests.Session()

r = s.get('https://yoururl.com')

Dealing with slow Python requests can feel like a baffling experience, partly because there are several factors that could potentially contribute to the slowness. The key is to understand where the actual problem lies and then formulate a fix accordingly. Here are some common reasons for delays in HTTP or HTTPS requests and ways to optimize the speed.

Issue 1: Network Latency

One of the most common causes of slow HTTP or HTTPS requests is network latency. This delay occurs due to the physical distance between your server and client or due to poor internet connection.

You could use Python’s

requests

library to measure the time taken to get a response:

import requests

import time

start_time = time.time()

response = requests.get('https://www.example.com')

end_time = time.time()

timer = end_time - start_time

print(f'Time: {timer}')

This code measures the total time taken by the request. If the value is significantly high, this could indicate an issue with network latency.

Issue 2: Server Side Processing Time

Another possible reason why your requests might be taking longer than expected is server-side processing time. If the server your program is hitting requires a large amount of time to process data before it returns a response, the entire operation will seem slow.

If your application is hitting an API endpoint that has rate limiting, you may experience slowed responses as well. Rate limits help protect against spammy behaviors or DDoS attacks and you should hence respect these limits.

Issue 3: DNS Lookups

Another point of consideration could be time spent on DNS lookups. Each time you make a request using a URL, a DNS lookup is performed to resolve the domain to an IP address.

Thankfully, the Python requests library caches recent lookups to decrease the latency of subsequent requests. Still, for the very first call, there might be a slight increase in latency.

Optimizing Python Requests

So, how do we increase the speed of Python HTTP requests? Here are some strategies:

Use Sessions: A Sessions object in Python’s requests module is a persistent connections pool. The following code fits in this context:

import requests

session = requests.Session()

response = session.get('https://www.example.com')

A Session object uses urllib3’s connection pooling. So if you’re making several requests to the same host, the underlying TCP connection will be reused, which can result in a significant speed-up.

Bypassing DNS resolution: DNS resolution can be bypassed by including entries in your /etc/hosts file for commonly used servers.

Parallelism: Instead of sending requests one by one, incorporate parallelism into your networking code. Use a package like Tornado or BeautifulSoup, both excellent libraries for managing multiple HTTP requests concurrently.

In conclusion, speed issues with Python requests can have various origins and a number of potential solutions exist. These methods must be carefully evaluated and implemented based on their feasibility and sustainability for the overall application.

There could be several reasons why HTTP or HTTPS requests in Python take a long time to complete. When you make an HTTP or HTTPS request using the

requests

library in Python, it can occasionally become slow due to network issues, server-side problems, issues with your code, or even due to inefficiencies in the Python Requests library itself.

Here are the most common causes:

1. Network Issues:

If the network in which you’re operating has a slow connection speed, then it will inevitably result in slower HTTP or HTTPS requests. This follows the law of physics: if the physical distance between client and server is large or the link quality is poor, more time will be consumed in transmitting data.

2. Server-side problems:

Another aspect that might slow down your request completion time could be issues on the server side to which you’re making the HTTP/HTTPS requests. The server may have its resources fully occupied or may internally process the request in a slow manner.

3. Issues with your script:

Sometimes the delay could originate from within your Python script itself. This could be due to unoptimized code or inefficient handling of the data transmitted during these requests. If your program includes inefficient processing post the HTTP/HTTPS request, it might seem like the request is taking longer than normal.

4. Python Requests Library Inefficiency:

In some cases, the Python Requests library itself can be the reason behind slower completion times. This is usually if you’re handling larger amounts of data. Python isn’t known to be the fastest language for this kind of task. If speed is crucial, consider moving parts of your Python code to another faster language like C or Go.

You can potentially speed up your HTTP or HTTPS requests by doing the following:

1. Increase the Connection Speed:

The faster your internet connection, the quicker your HTTP/HTTPS requests will complete. While you cannot control the internet speed at all times, always check if low network speeds could be the factor reducing the performance of your script.

2. Optimize Your Code:

Where possible, streamline your code to consume less memory and execute more quickly. Consider techniques such as batch processing of data, threading, and asynchronous programming. For instance, instead of using the

requests

module for multiple HTTP requests, use

aiohttp

which supports asynchronous requests and thus can significantly increase the efficiency of your program. Here’s a basic example:

import aiohttp

import asyncio

async def fetch(session, url):

async with session.get(url) as response:

return await response.text()

async def main():

urls = ['http://python.org', 'https://google.com', 'http://someapi.com']

async with aiohttp.ClientSession() as session:

tasks = []

for url in urls:

tasks.append(fetch(session, url))

results = await asyncio.gather(*tasks)

# process results here

# python 3.7+

asyncio.run(main())

3. Use a Faster Language for Processing:

Consider using a faster language to process the data received from HTTP/HTTPS requests. Python is not the most efficient language when it comes to dealing with a large quantity of data. Despite being easier to use, Python trades off some speed. To resolve this, you can integrate parts of your Python program that involve intensive data processing with faster languages like C or Go.

Sources:

– aiohttp documentation

– Python Requests library documentation

The slow execution speed of Python requests, particularly those involving HTTP or HTTPS, can be attributed to several factors. In particular, these performance issues can result from network latency, server response time, and the way you’ve structured your programming code.

import requests

def send_request():

response = requests.get('https://example.com')

print(response.status_code)

Network Latency

One major element affecting your Python request’s efficiency is network latency – the amount of delay occurring during data processing over an online network. This involves the physical distance between the client making the request and the server fulfilling it. If the server is geographically distant, latency will increase because packets travel longer distances. Here, even if your code is optimized, network latency might still slow down your request.

Server Response Time

Another vital component that could slow down your Python requests is the server response time. If the server processing your request is slow, it directly impacts the time taken for request completion. You may optimize your code excellently, but if the target server’s response time is high, it’ll still take a substantial while to complete your request.

Blocking vs Non-blocking Requests

Python’s ‘requests’ library works on a blocking model. Meaning, when you make a request, your program will wait (blocks) until it receives a response from the server before proceeding. For programs making numerous requests, this could significantly slow down the overall process.

Consider implementing non-blocking requests using libraries such as gevent or tools like concurrent.futures. These provide concurrent execution which means the program makes a request and continues with other operations while waiting for the response:

import gevent

import requests

urls = ['https://example1.com', 'https://example2.com', 'https://example3.com']

def fetch(url):

return requests.get(url).status_code

jobs = [gevent.spawn(fetch, url) for url in urls]

gevent.joinall(jobs, timeout=5)

for job in jobs:

print(job.value)

Payload Size

If you’re sending or receiving large amounts of data, it might be slowing down your Python requests. Large amounts of data require more time for transmission and greater processing power. Thus, consider compressing the data where possible.

Timeouts and Retries

Suppose the target server doesn’t respond within a specific period. In that case, it’s crucial to define appropriate timeout values, failing which, the request might hang indefinitely, taking a long time to complete.

Also, setting up retries could cause delays. Python’s requests library will automatically retry a failed request, but sometimes a hard limit on retries can help prevent your program from trying indefinitely.

from requests.adapters import HTTPAdapter

from requests.exceptions import ConnectionError

session = requests.Session()

adapter = HTTPAdapter(max_retries=3)

session.mount('http://', adapter)

session.mount('https://', adapter)

try:

session.get('https://example.com')

except ConnectionError as ce:

print(ce)

By understanding the technical concepts around Python requests’ execution, we can develop strategies to write efficient code minimizing the chances of encountering time-consuming HTTP or HTTPS requests. These strategies include handling network latency, faster server response times, non-blocking requests, payload size management, and proper setup of timeouts and retries. Always remember to test your application thoroughly to ensure improved efficiency.When addressing the issue of Python Requests taking too long to complete HTTP or HTTPS requests, one effective solution is optimizing connection pooling. Connection pooling refers to maintaining a cache of database connections to be reused by future requests, which can help improve the speed of your Requests calls in Python.

A key tool leveraged for connection pooling in Python is the Requests Library’s

Session

object. This object persists certain parameters across requests and can reuse underlying TCP connections, resulting in a significant performance gain.

Here is an example of how you can use the

Session

object:

import requests

session = requests.Session()

response = session.get('https://www.example.com')

The persistent nature of the Session object helps improve the efficiency of IP resolution as well as TCP and SSL handshake processes leading to faster requests.

Yet for all its advantages, sessions are not without their downsides:

* Since sessions keep connections active even when not in use, they may consume more resources than necessary.

To address these issues, Python provides several options for tuning the connection pool used by the

Session

object:

*

maxsize

: This option allows you to control the maximum number of connections that can be kept alive in the pool at any given time. By increasing this value, you can potentially make Requests call quicker by ensuring that a sufficient number of connections are always available to handle outgoing requests.

*

block

: This option, if set to true, ensures that the call requesting a connection will be blocked until a connection is freed up in case all the pooled connections are already in use. This further eliminates the overhead of establishing new connections with every request.

Here’s how you can tune these settings:

from requests.adapters import HTTPAdapter

session = requests.Session()

adapter = HTTPAdapter(pool_connections=100, pool_maxsize=100, max_retries=3, pool_block=True)

session.mount('http://', adapter)

session.mount('https://', adapter)

response = session.get('https://www.example.com')

Remember multiple elements from your system; network configuration, DNS server speed, target server response times, etc. contribute to the overall response times of your HTTP/HTTPS requests, ensure you have profiled your app properly to identify bottlenecks.

Referencing Python requests documentation for advanced transport adapters might provide additional insights on how to tweak these settings according to your specific needs.

By optimizing how the

Session

object pools connections, you can make your Python Requests run quicker and become less prone to slowing down under load. At the same time, fine-tuning these settings requires careful consideration of both your specific application requirements and your broader system environment. As ever in coding, there’s no such thing as a “one-size-fits-all” solution.When it comes to Python requests, especially HTTP or HTTPS requests, one of the challenges you might encounter is the issue of slow performance – it might take a long request too long to complete. In such situations, tweaking the timeout parameter could greatly enhance the efficiency and speed of these interactions.

Let’s start by understanding what a timeout in HTTP requests with Python is. Essentially, a timeout limits how long the client will wait for a response from the server. When working with Python’s popular

requests

library, the timeout should be a float or a tuple of two floats specifying the total time before giving up.

Adjusting your timeouts can improve your request performance in several ways:

- Saves Resources: If a connection takes too long, it often indicates an underlying problem. Lingering connections use system resources that could otherwise be used elsewhere.

- Saves Time: Rather than waiting indefinitely for a slow connection, you can terminate the request after a reasonable period and spend that time on more productive tasks.

- Error Debugging: Immediate feedback about connectivity issues due to timeout exceptions enables earlier error detection and debugging.

By default, Python’s

requests

module does not provide a default timeout. Here is how you can add a timeout to your requests:

import requests

response = requests.get('https://www.yoursite.com', timeout=5)

In the example above, if the server doesn’t send data within 5 seconds, a

requests.exceptions.ReadTimeout

exception will be raised. The value

5

denotes the number of seconds the client will wait for the server’s response.

If you want to separately set the connect and read timeouts, you can supply a tuple as the timeout parameter. Here is an example:

import requests

response = requests.get('https://www.yoursite.com', timeout=(2, 5))

This code will wait 2 seconds for the server to establish a connection and then wait another 5 seconds for the server to send a response. If any of the operations exceed their allotted time, a respective

requests.exceptions.ConnectTimeout

or

requests.exceptions.ReadTimeout

exception will be raised.

Remember, adjusting timeouts is only a part of the solution. Other factors affecting the performance of HTTP/HTTPS requests include network latency, server response time, and DNS resolution. Therefore, thorough troubleshooting is needed to identify the root cause of slow requests.

For a deep dive into optimizing request performance in Python, you might want to refer to this detailed guide on Python Requests which also includes advice on handling timeouts and exception handling.Working with Python and handling HTTP or HTTPS requests can sometimes feel like an arduous task, especially if your code seems to be running slower than expected. If you’re experiencing slow completion times for these requests, it may be because the Python Requests library operates synchronously by default. What this means is that each request has to finish before the next one starts, which can significantly slow down the execution time. The good news is that we can implement an asynchronous approach using AsyncIO which could help speed up HTTP/HTTPS requests.

AsyncIO is a powerful built-in module in Python for handling asynchronous I/O operations, such as filesystem interaction, network communication, or even interacting with web APIs using HTTP. It leverages co-routines, a high-level, generalization of subroutines designed for non-preemptive multitasking, allowing us to manage multiple tasks at once without waiting for each individual task to finish.

Now, let’s get into how we can harness the power of AsyncIO to make our Python HTTP/HTTPS requests more efficient:

The first step is to install the `aiohttp` package alongside asyncio. This serves as an async alternative to the requests package.

pip install aiohttp

Next, we create a co-routine function using `async def` that performs the GET request:

import aiohttp

async def get_url(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

return await response.text()

The `get_url` function’s insights are quite simple: we create a client session, we then request the content of that URL, and finally, we use ‘await’ to wait for the HTTP response asynchronously. `await` handles the scheduling of the co-routines, ensuring they do not block other routines while waiting for IO-bound tasks to complete.

To perform multiple requests, we loop through our list of URLs and call our `get_url` function each time:

import asyncio

urls = ["http://example.com", "http://example1.com", "http://example2.com"]

async def main():

tasks = []

for url in urls:

tasks.append(get_url(url))

return await asyncio.gather(*tasks)

results = asyncio.run(main())

In the above snippet, we first create an empty list called `tasks`. For every URL, we append a new task (our `get_url` function) to this list. Then within our main function, we can gather all tasks and execute them concurrently with `asyncio.gather()`. Finally, `asyncio.run(main())` wraps up everything, executes our coroutine and returns the completed results.

By adapting this method, you can efficiently send multiple HTTP/HTTPS requests without each having to wait for the last to be completed, ultimately resulting in faster script execution times.

For optimized SEO performance: In making Python code run more swiftly and ensuring seamless HTTP/HTTPS requests, switch from synchronous programming to asynchronous execution with python’s built-in feature, AsyncIO. The aiohttp module, combined with asyncio, is the perfect technique to improve the efficiency of Python energy usage. Keep in mind that proper use of async functions, await expressions, and understanding Python’s event loop can lead to drastic improvements in code performance.

Please note: While using Asyncio with aiohttp can greatly increase the speed of your API calls, it’s important to be aware of rate limits imposed by the server you’re hitting. Making too many requests too quickly can cause your IP to be blocked! Therefore, always check the API documentation for usage guidelines before sending out large volumes of requests.

Properly used, Asyncio can lead to significant performance gains, making Python’s HTTP/HTTPS requests more efficient, faster and opener for newer possibilities. At times when your code needs to be quick and efficient, Asyncio can be a great option. Want to learn more about optimizing Python code? Check out some awesome tutorials online.

Remember the golden rule for SEO focused optimisation: Relevant and meaningful content will drive user engagement and hence gather natural, organic traffic. Thus, instead of just placing popular keywords everywhere in your code comments, try to describe the purpose and functionality of your code in a relevant, engaging and informative manner driving curiosity in the community about your project.The gevent library plays a critical role in boosting the speed of HTTP/HTTPS requests in Python. When you’re dealing with numerous requests and find that Python’s Requests library is too slow or taking too long to complete HTTP or HTTPS requests, gevent can be our knight in shining armor, alleviating our woes and ensuring swifter processing times.

Before we dive into how gevent augments the speed, it’s essential we explain some terminologies. Traditionally, when a program needs to accomplish two tasks simultaneously, it does so using threads. However, threading can be expensive in terms of memory usage and requires careful synchronization to prevent conflicts. Herein enters gevent, providing a lightweight, more efficient alternative to threads in the form of greenlets. A greenlet is simply a more granular thread – meaning that they possess their own execution flow but share the same global state.

Interestingly, the gevent library revolves around two primary concepts:

- Greenlets for manageable micro-threads,

- The libevent event loop for asynchronous I/O.

Let’s look at an example where we use gevent along with Python’s Requests library:

import gevent

from gevent import monkey

import requests

monkey.patch_all()

def print_requests(url):

res = requests.get(url)

print(res.text)

urls = ["http://www.google.com", "https://www.python.org",

"https://docs.python.org/3/library/gevent.monkey.html"]

# spawn greenlets

greenlets = [gevent.spawn(print_requests, _url) for _url in urls]

# Wait for them to finish

gevent.joinall(greenlets)

In this code snippet, the main feature at play is

monkey.patch_all()

. It modifies Python’s standard libraries to become ‘gevent-friendly’. This operation allows gevent to use its greenlets in places where ordinarily blocking operations (like network communication via requests) happens. Subsequently, this allows our code to continue executing without being blocked.

Ultimately, gevent helps us alleviate the speed issue by enabling concurrent execution. It means multiple requests can execute almost ‘simultaneously’, significantly reducing total completion time. Now instead of waiting for each request to finish before starting the next, gevent kicks off new ‘greenlet’ for each http request, by calling

gevent.spawn()

, allowing them all to run concurrently waiting for IO. As the result, no unnecessary idle CPU time wasted.

A note of caution: Make sure you have installed gevent via PyPI using the command

pip install gevent

before trying to run the above script.

With the right set of tools including ‘gevent’, we could certainly tackle related latency issues improving our overall system performance. For a full list of gevent modules and capabilities, make sure you visit its official documentation site.Based on a situation where you find your Python requests taking an overly long time to fulfill HTTP or HTTPS requests, various solutions can be investigated in order to boost efficiency.

| Problem Cause | Solution |

|---|---|

| Inefficient Network Connection | Check if your network connection is stable. Unstable connectivity might result in slower responses. |

| Inefficient Use of Sessions feature | Make use of

Sessions in your Python script which will reuse the TCP connection instead of creating a new one on every request. |

| Long Server Response Time | Depending on your ability, consider negotiating with your chosen service provider for quicker server responses. |

| Large Dataset Size | Fine-tune how you’re interacting with the data; break it down into small chunk items and process them accordingly rather than in bulk. |

Sometimes the problem is simply due to poor internet connectivity, hence ensure that your connection is strong and efficient. If your Python request script doesn’t use the ‘Session’ functionality often, try incorporating it because it allows for connection pooling, which reuses existing connections rather than creating a new one per each Http request.

Here is a simple code example of using Sessions in Python requests:

import requests

s = requests.Session()

s.get('https://httpbin.org/get')

On top of those factors, check the speed of the servers – some servers naturally have longer response times. Depending on the amount of control you have over these external factors, it may be beneficial to make inquiries to your service provider about improving this aspect. Lastly, examine your data size – large datasets can often slow down the process considerably. Instead of reading all the data once, divide it into small chunks and process it as batches.

For continued research and understanding, further resources can be found at python’s own documentation on Session Objects.

By combining these methods, not only will you see improvements in the response times of your Python requests but you’d also open the door to more efficient programming habits.