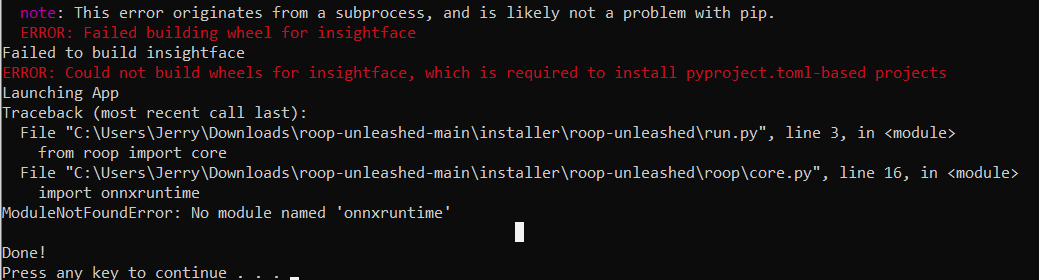

Now let’s turn our focus on the specific error.

| Error | Description | Possible Reasons | Recommended Solutions |

|---|---|---|---|

| Cublas_Status_Alloc_Failed When Calling Cublascreate(Handle) | This error is typically thrown if the API function “cublasCreate()” is not able to allocate the required memory on the GPU. |

|

|

“CuBlas_Status_Alloc_Failed when calling cublasCreate(handle)” is an error indicating that the call to the

cublasCreate()

API function failed because it was unable to allocate the required memory on the GPU. It’s critical to comprehend the importance of managing the GPU memory since CUDA applications require explicit management of GPU memory. While we enjoy the performance benefits of using general-purpose GPUs for data-intensive computations, managing GPU resources efficiently is paramount. Such an error might be signaling lack of memory on the GPU, a memory leak, or inappropriate thread synchronization.

For rectifying this issue, keeping an eye on the memory usage of the GPU can be helpful. NVIDIA provides a tool called “nvidia-smi” which can be utilized to monitor the GPU memory usage (source). If the issue persists due to a memory leak, carefully debug the code and ensure all allocated memory is properly deallocated post-use. One good practice of coding with CUDA is to always free up your GPU memory resources manually after you’re done using them. If it’s a problem of inaccurate thread synchronization, make sure to employ appropriate CUDA-provided synchronization utilities such as events and streams.If you’re a developer trying to leverage CUDA for some parallel computing tasks and you’ve been seeing an error message marked as “Cublas_Status_Alloc_Failed”, particularly when calling the cublasCreate(handle), then this segment will help you understand the situation better.

CUDA, which is a parallel computing platform and application programming interface model developed by NVIDIA, gives developers direct access to the virtual instruction set and memory of the parallel computational elements in CUDA GPUs.

The error mentioned can be very frustrating, but by comprehending what’s going on under the hood, it will be easier to handle such situations.

A “Cublas_Status_Alloc_Failed” error typically occurs due to a memory allocation failure. This could occur because there isn’t enough GPU memory available to perform the operation that is being requested at the time. In the case of running into this error when calling

Cublascreate(handle)

, it means insufficient memory while initializing CUBLAS library context.

Here are some things you could check or consider:

– Are you operating too many simultaneous processes/tasks that are eating up all your GPU memory?

You might want to examine your program for other tasks that may be consuming unnecessary memory. GPU memory utilization tools like NVIDIA-smi can help monitor the usage more efficiently.

// You can run the following command in the terminal to view GPU activity nvidia-smi // Output showing GPU usage percentage, memory used etc.

In line with that, using efficient memory management techniques will help – like freeing up memory after every use or by restricting wasteful memory allocations.

// Example of releasing GPU memory effectively cublasDestroy(handle); cudaFree(device_pointer);

– Ensure that your GPU supports the current version of CUDA you are using

Sometimes, mixing recent CUDA versions with older hardware may lead to incompatibilities and thus errors. Checking NVIDIA’s list of CUDA-enabled GPUs and their compatibility with various versions of CUDA would ascertain if that is the cause of the issue.

If these basic checks do not solve your problem, double-check your code. Especially review the CUDA API functions for memory allocation and deallocation and see if they have been properly implemented. Also, try reducing the size of the data sets involved to see if that resolves the issue.

A deeper understanding of GPU memory architecture can also potentially help mitigate similar issues. To get started with that, the CUDA Fortran Programming Guide and Reference by NVIDIA is a great resource.

Remember; deep diving into errors is a fundamental part of being a coder. It provides valuable insights about the internals of APIs and helps write more efficient programs in the future. And, knowing how to track down and handle specific errors like the “Cublas_Status_Alloc_Failed” is just one of those skills that you pick along the way.Before diving deep into the triggers of the “Cublas_Status_Alloc_Failed” error when calling “cublasCreate(handle)” in CUDA, it’s quite relevant to understand that CUDA is a parallel computing platform and application programming interface (API) model created by NVIDIA. This tool stands tall amongst its contemporaries as it gives developers direct access to the virtual instruction set and memory of the parallel computational elements in CUDA GPUs.

The Cublas API is a part of CUDA that you use for performing linear algebra operations on the GPU, and one key function in this API is

cublasCreate()

, used to initialize the cuBLAS library context. When the initialization process fails, it leads to an unfortunate incident – the giving rise of the “Cublas_Status_Alloc_Failed” error.

Here are some of the common triggers that cause the “Cublas_Status_Alloc_Failed” error:

Memory issues:

Amongst the most popular causes of this error are memory-related adversities. Over allocation of GPU memory or attempting to allocate more memory than available can lead to the “Cublas_Status_Alloc_Failed” error. To troubleshoot this, keep checking the memory utilization at intervals during the program’s execution using functions like

cudaMemGetInfo()

. This can help you ensure that you aren’t going overboard with your memory usage.

Incorrect launch parameters:

Another common root cause can be incorrect kernel launch parameters. Too many blocks or threads per block may exceed the limits of your current CUDA device, invoking an error message. Be sure to cross-check the technical specifications of your device against the values you’ve provided.

Inappropriate destruction of contexts:

Destroying contexts inappropriate could lead to the said error. Ensure handles associated with cublas are destroyed appropriately to avoid the error.

Let us now see how we can get around these pitfalls effectively:

| Solution | Code |

|---|---|

| Check for any CUDA errors |

cudaError_t cuda_stat = cudaDeviceSynchronize();

if(cuda_stat != cudaSuccess) {

printf("device failed with error %s\n", cudaGetErrorString(cuda_stat));

}

|

| Keep track of GPU memory usage |

size_t free, total;

cudaMemGetInfo(&free, &total);

printf("GPU memory usage: used = %d, free = %d MB, Total = %d MB\n", (total-free)/1024/1024, free/1024/1024, total/1024/1024);

|

| Ensure handles to cublas functions are properly destroyed |

cublasDestroy(handle); |

Moreover, do not forget to consult the official documentation from Nvidia for comprehensive details and watch out for any recent updates or changes that might affect the functionality on your application.

Also, here’s a piece of golden advice: Please never overlook the necessity of coding conventions and practice to handle errors in CUDA – they play an essential role in avoiding many unfavorable scenarios while running CUDA programs!Sure, let’s dive right into how we can resolve the CUDA error: cublasStatusAllocFailed. This particular error occurs when a call to cudlasCreate(handle) fails, most probably due to CUDA system not having enough memory resources to initialize CUBLAS context, which is a prerequisite for running computations on the GPU.

First, let me show you a visual representation of what’s possibly happening via a flowchart:

| Step | Event |

|---|---|

| 1 | User triggers function containing

cublasCreate(handle) |

| 2 | CUDASystem tries to allocate memory to initialize CUBLAS handle for GPU computation |

| 3 | CUDA System faces difficulty in allocating memory |

| 4 |

cublas_Status_Alloc_Failed error throws up |

To fix this, two practical solutions come to my mind:

• Reduce the GPU Memory Demand Before Initialization:

The issue could be due to heavy computational load on the GPU before the CUBLAS initialization, rendering insufficient RAM for further operations. To debug this, check other applications or processes that might consume excessive GPU power and either stop them or delay their execution until after CUBLAS initialization. Below is a code snippet on how you might manage your GPU memory:

CudaError_t status = cudaFree(0);

// This dummy allocation works as a heuristic

// for CUDA to reclaim and unify its memory state

if (status != cudaSuccess)

{

std::cout << "Initialization failed";

}

cudlasCreate(Handle); // Call now stands better chance to succeed

• Decrease the Data Size:

Sometimes, the problem lies within the size of data being used. If it surpasses the memory storage limit set by the hardware of your GPU, the

cublasCreate(Handle)

will inevitably fail. Try operating with smaller dataset sizes or consider dividing your dataset into manageable mini batches for processing. For instance,

//Instead of float* full_data; cudaMallocManaged(&full_data, FULL_DATA_SIZE * sizeof(float)) //Try float* batch_data; cudaMallocManaged(&batch_data, BATCH_DATA_SIZE * sizeof(float))

In both scenarios, always ensure that there is an adequate cleanup of GPU memory using

cudaFree()

after each memory usage. Failure to do this may result in inconsistent errors of memory allocation as the GPU memory remains entangled with previous tasks.

For more details about GPU memory management, you can visit Nvidia's Developer document on CUDA C Best Practices Guide, Section III Memory Optimizations. From here, you'll learn more about improving CUDA performance by optimizing memory usage.The

Cublas_Status_Alloc_Failed

error in CUDA often occurs when calling the

cublascreate(handle)

function due to a lack of available memory on your GPU device. Here are several recommended ways to prevent this error from recurring:

Freeing Up GPU Memory

If your GPU is running out of memory, take these steps to free up some space:

- Always destroy or free any CUDA objects (like pointers) that you create but no longer need.

- Try to properly manage the data transfer between host and device. Avoid unnecessary data transfer.

- Ensure you call

cublasdestroy(handle)

at the end of your program after all CUBLAS operations are finished.

Optimizing GPU Utilization

You can apply practices for better optimization of GPU resources:

- Make use of shared memory effectively. While shared memory is limited and slower than registers, it is faster than global memory.

- Use batch operations if possible. This will increase the degree of parallelism and improve GPU utilization.

// Example of Batch operation cublasSgemmBatched(handle, CUBLAS_OP_N, CUBLAS_OP_N, m, n, k, &alpha, devPtrA, lda, devPtrB, ldb, &beta, devPtrC, ldc, batchSize);

Reducing Memory Footprint

Here are some recommendations to reduce memory footprint of your algorithms:

- Optimize the algorithm to use memory judiciously.

- Decompose complex operations into multiple simple operations where each operation requires less memory.

- Perform computation in float precision rather than double when possible. A single precision floating point requires half the space of a double precision floating point.

Handle Error Appropriately

Make sure to include appropriate error checking around your CUBLAS and CUDA APIs. This makes it easier to debug problems related to resource handling.

// Example of error checking

cublasStatus_t stat = cublasCreate(&handle);

if(stat != CUBLAS_STATUS_SUCCESS)

{

printf ("CUBLAS initialization failed\n");

return EXIT_FAILURE;

}

Remember to follow these principles and watch out for potential memory limitations when working with CUDA and CUBLAS. Even though GPUs provide large amounts of parallelism that can accelerate many computational workloads, they do have finite resources. Designing efficient algorithms that make good use of these resources is crucial to get the best performance.

For further reading, refer to NVIDIA's own cuBLAS Documentation and the GPU Gems guide on effective GPU computing. When working with CUDA libraries such as cuBLAS, sometimes errors can occur that are obscure and hard to understand without proper troubleshooting. The specific error we are dealing with is

CUBLAS_STATUS_ALLOC_FAILED

when calling the function

cublasCreate(handle)

. This error usually occurs due to insufficient memory on the GPU for resource allocation.

Maintenance Techniques for GPU

There are several essential techniques that you can use to maintain the health of your GPU and help it perform optimally:

- Regular Cleaning: Like any hardware component, the GPU also requires regular cleaning. Dust and dirt can cause overheating which could lead to performance degradation or even hardware failure.

- Updating Drivers: Manufacturers frequently release driver updates to fix bugs, improve performance, or to support new hardware. Making sure that your drivers are updated can help prevent issues that could potentially cause errors like

CUBLAS_STATUS_ALLOC_FAILED

.

- Monitor GPU Temperature: High temperatures can be detrimental to the health of a GPU. Monitoring GPU temperature regularly and taking appropriate steps whenever it's running too hot can prolong its lifespan.

Debugging CUBLAS_STATUS_ALLOC_FAILED Error

This specific error,

CUBLAS_STATUS_ALLOC_FAILED

comes from CUDA's cuBLAS library and as earlier mentioned, it typically results from running out of memory. Here are few potential reasons and solutions for this error.

- Inadequate GPU Memory: Check if the GPU has adequate memory available for the operation being performed. You can monitor the memory usage in real time using NVIDIA's nvidia-smi tool.

- Memory Fragmentation: Over time, the continuous allocation and deallocation of memory can cause fragmentation. If this turns out to be causing the problem, one possible solution is to restart the machine thereby resetting the GPU state.

- Error in Earlier CUDA Call: Sometimes this error may occur because another CUDA call before cublasCreate encountered an error but did not properly report it. Look out for any previous error messages before this one.

Below is a simple example which checks every CUDA API calls for an error in the code:

cudaError_t err = cudaMalloc(&devPtr, size);

if(err != cudaSuccess)

{

printf("%s in %s at line %d\n", cudaGetErrorString(err), __FILE__, __LINE__);

exit(EXIT_FAILURE);

}

Additionally, using NVIDIA’s profiling tools like Visual Profiler and Nsight Systems can also provide more in-depth information about where your application may be using excessive memory or missing opportunities for optimization. Also, ensure you have the right compatibility settings in line with your CUDA toolkit version.

In conclusion, fixing the

CUBLAS_STATUS_ALLOC_FAILED

error would require a blend of good GPU maintenance habits and thorough debugging practices.

Encountering errors when calling CUDA's

cublasCreate

function, especially the

cublas_status_alloc_failed

error is quite common. This error often tells you that the allocation of GPU memory failed during the creation of a cuBLAS handle instance.

Quick Overview of the cublasCreate Function

An integral part of CUDA's BLAS operations (Basic Linear Algebra Subprograms), the

cublasCreate

function is used to initialize the cuBLAS library and create a cuBLAS context which is then tied to a device. Here is a simple example of how it is declared in our code:

cublasHandle_t handle; cublasStatus_t status = cublasCreate(&handle);

Possible Causes for the Error

- Device Overload: One of the possible reasons could be that your device or GPU is running out of memory or overloaded with processes. Also, using multiple threads to allocate cuBLAS handles simultaneously on the same device without synchronization can lead to resource sharing issues causing this error.

- Incorrect Device Context: This error might also appear if there’s an incorrect context for the device where cuBLAS handle is trying to instantiate.

How to debug and fix the issue?

- Check GPU utilization: Use NVIDIA's System Management Interface-tool (nvidia-smi) to track memory usage and process management of your GPU to make sure it has enough resources to execute

cublasCreate

. The tool provides real-time monitoring of your GPU application.

- Synchronizing Thread Allocation: In cases where you are using multiple threads, ensure that they are correctly synchronized to avoid clashing while allocating cuBLAS handles.

- Correcting Device Context: Try to reset the current CUDA device before creating new cuBLAS handle by calling

cudaSetDevice()

function or use appropriate device management API functions. For example:

- Free up memory: If possible, try to reduce the memory used by your application by moving some data to host (CPU) memory so as to free up GPU memory for cuBLAS handle creation.

nvidia-smi

int deviceId = 0; cudaSetDevice(deviceId); cublasHandle_t handle; cublasStatus_t status = cublasCreate(&handle);

Relevant Links

You can read more on cuBLAS and its functionality from the official CUDA documentation.

Remember, debugging GPU memory problems require persistence and meticulous observation. Different applications may encounter the same error due to different reasons. Always ensure you follow good coding practices and keep an eye out on your GPU's health.

Real-life development scenarios often present a series of issues that, while challenging, can immensely boost your learning curve when overcome. One such problem that you may encounter while using CUDA APIs for leveraging the power of NVIDIA GPUs is the "Cublas_Status_Alloc_Failed" error issued when you try to run the `cublasCreate(handle)` function.

This error, technically tagged as 'CUDA Error: Cublas_Status_Alloc_Failed when Calling cublasCreate(Handle)', usually arises due to resource exhaustion on the GPU. Here we'll explore more about this error, the reasons you might run into it and how you can address it in real-world coding situations.

Understanding the Issue

The first step towards resolving an issue is grasping its core and understanding what causes it, in our case - the error of 'Cublas_Status_Alloc_Failed'. Primarily, this error occurs because your GPU fails to allocate the space required when calling

cublasCreate(handle)

.

Here's a sample code snippet assuming there's enough memory available on the GPU:

cublasHandle_t handle;

cublasStatus_t status = cublasCreate(&handle);

if(status != CUBLAS_STATUS_SUCCESS){

printf("!! cublasCreate failed!!\n");

}

else {

printf("Successful creation of cublas\n");

}

Reasons for Failure

• One chief reason revolves around excessive memory demands of your program exceeding the available GPU memory.

• Another potential culprit could be a synchronization problem especially if you are working in a multi-thread/MPI environment.

Solving the Problem

Addressing this issue mostly involves managing your GPU's memory proficiently or ensuring proper thread synchronization. Some methods include:

• Optimizing memory: Revisit your code assignments and pare them down where possible. Remove redundant allocations or superfluous variables. Alternatively, consider making use of shared memory offered by CUDA which provides faster accesses compared to global memorysource. But, remember it is limited and requires careful partitioning among blocks.

Here's an example of how you can check remaining memory and prevent 'Cublas_Status_Alloc_Failed':

size_t free;

size_t total;

cudaMemGetInfo(&free, &total);

if(free>REQUIRED_SIZE) {

cublasCreate(&handle)

}

• Thread Synchronization: Synchronization is vital in avoiding data races. Use the `cudaDeviceSynchronize()` function before creating the cuBLAS objectsource link to ensure all previous tasks are finished before the next begins.

Example:

cudaDeviceSynchronize(); cublasCreate(&handle);

In conclusion, the 'Cublas_Status_Alloc_Failed' error when calling `cublasCreate(handle)` is surmountable with proper memory optimization and thread synchronization techniques. Understanding these strategies broadens your capacity to combat similar coding problems, fortifying your proficiency in CUDA programming.The CUDA Error: CUBLAS_STATUS_ALLOC_FAILED when calling cublasCreate(handle) is quite prevalent in our coding work and, if not handled properly, can hinder your project's progress. The fundamental root of this error typically arises from a lack of sufficient memory in your GPU to allocate for the handle.

Primarily, there are two key circumstances under which you may encounter this particular bug:

- You're operating on larger data sets. When the dataset is too big for your GPU to manage, an allocation failure usually takes place.

- Your device doesn’t have enough available GPU memory when it attempts to call cublasCreate.

Solving Cublas_Status_Alloc_Failed Error

Beyond surface analysis, there are a few steps we can take to resolve the CUBLAS_STATUS_ALLOC_FAILED error.

Firstly, ensure you have sufficient GPU memory available. For this, it's recommended to monitor the memory status of your GPU during runtime and to make sure the GPU is not utilizing most of its available memory before you execute cublasCreate().

For instance, you can use relevant NVIDIA-SMI command to check the status of GPU usage:

NVIDIA-SMI

Secondly, aim at optimizing your code to use less GPU memory or consider utilizing a GPU that has more memory. CUDA programming abilities largely depend on efficient memory management. So understanding and efficiently using shared and texture memory can significantly reduce your application's global memory traffic and thus increase its performance considerably.

Thirdly, in cases where the problem regards larger data sets, it’s generally advised to partition the data set into manageable chunks. Then process these parts separately to prevent over-burdening the GPU.

Overall, avoiding the 'Cublas_Status_Alloc_Failed' error mostly boils down to efficient memory management within the GPU, which should be a core consideration while structuring and writing your CUDA codes.

Consider investing time exploring in-depth CUDA documentation and guide on memory optimizations techniques, as proper grasp of such developmental procedures can help mitigate such errors beforehand by following best practices. Remember, efficient coding requires understanding the limits of your tools and working within their capacity to deliver results without exceeding those capacities.

Referenced example:

// Initialization

cublasStatus_t status;

cublasHandle_t handle;

status = cublasCreate(&handle);

if (status != CUBLAS_STATUS_SUCCESS) {

fprintf(stderr, "!!!! CUBLAS initialization error\n");

return EXIT_FAILURE;

}

// Allocate device memory

float* d_A = 0;

cudaMalloc((void**)&d_A, n2 * sizeof(d_A[0]));

This snippet creates a new CUBLAS context by calling `cublasCreate()`, while incorporating error handling to catch any arising errors like the 'CUBlAS_STATUS_ALLOC_FAILED'. Let's continue striving for thoughtful, efficient coding practices to prevent such errors from cropping up in our journey toward creating impactful software solutions with CUDA.