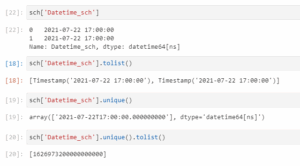

| Step | Description |

|---|---|

| Install Coverage.py And pytest-cov | These two Python packages are crucial for generating coverage reports. You can install them using pip by running the commands pip install pytest-cov and pip install coverage. |

| Mark Test Cases | In your Pytest plugins, mark your test cases using pytest.mark.parametrize. You'll provide parameters that will run your function or method under different scenarios to ensure comprehensive testing. |

| Run The Tests With --cov Option | To get the coverage report, run pytest with --cov option indicating you'd like a coverage report generated alongside the test results. Your command should look something like pytest --cov=my_plugin tests/. |

| Generate Report | The final step is to generate the coverage report by calling coverage report -m or coverage html for a detailed report with highlighted areas where coverage is lacking. |

When it comes to thoroughly verifying the functionality and reliability of your PyTest plugin, getting coverage reporting is incredibly critical. Code coverage tools such as Coverage.py and pytest-cov provide an efficient and robust method of assessing the comprehensiveness of your test suite.

This approach measures what portion of your codebase is being covered by your tests, ensuring that all likely behaviors and responses have been tested and accounted for effectively. It’s an insightful metric that can help illuminate where more attention and testing capacity needs to be allocated within the development process. Integrating a tool like Coverage.py within your PyTest testing can reveal gaps in test case scenarios, helping you reach higher confidence levels in software quality and stability.

The pytest-cov package provides seamless integration with the pytest framework, adding extra commands and options to specify the level of detail and output format of the coverage report. When testing your plugin, you’ll want to use the –cov option to tell pytest-cov which modules to measure coverage for.

By marking your test cases with pytest.mark.parametrize, you define multiple sets of arguments for a test function. Consequently, pytest-cov will run your plugin under varying circumstances, providing a broader overview of how well your plugin performs.

Lastly, don’t forget to generate the final report after running the tests. This summary contains detailed information about your test coverage and shows you precisely where any deficiencies may lie. The “coverage report -m” produces a console-based report while “coverage html” generates an HTML report, offering a more visual representation of results. Viewing these detailed reports, you can track your progress over time and ensure your tests’ continuous improvement.

Pytest is a robust testing tool for Python, and it gains a lot of its power from its wide range of plugins. When you’re developing a Pytest plugin, it’s crucial to ensure it functions correctly via comprehensive testing. As part of this testing process, achieving good test coverage and getting coverage reports is invaluable because it gives you an assurance that your tests examine all areas of the code.

One way to get test coverage when testing a pytest plugin is to use `pytest-cov`, a Pytest plugin itself used for measuring code coverage. It conveniently integrates with the Pytest framework and uses “Coverage.py” under the hood.

Pytest-cov Installation

You can install pytest-cov by running the following pip command:

pip install pytest-cov

After installation, this plugin can be invoked by using `–cov` flag followed by the modules or directories of which coverage needs to be measured.

Getting Coverage Reports

Executing the test suite with pytest-cov generates a coverage report possibly in several formats including text, XML, or HTML, depending on the specified arguments.

Here’s how you can generate a report:

Use the `–cov` option to tell pytest-cov what part of your code to cover.

pytest --cov=myplugin tests/

Then, add `–cov-report=term` to see coverage report in terminal output:

pytest --cov=myplugin --cov-report=term tests/

You can get an even more detailed report with the `term-missing` option, showing which specific lines are missing from the coverage:

pytest --cov=myplugin --cov-report=term-missing tests/

And if you prefer, you can generate an HTML report:

pytest --cov=myplugin --cov-report=html tests/

Running this will produce an HTML report in a directory named “htmlcov”.

Using the coverage report

The coverage report provides helpful insights, such as which parts of your pytest plugin are not covered by the tests, and thus where extra tests might need to be written for improved coverage. Analyzing the report helps in writing more effective tests and increases confidence in the plugin’s reliability.

Excluding certain parts from coverage reports

Sometimes, you might have blocks of code that you intentionally don’t want to test – for example error handling branches that are difficult to test properly. In those cases, use a `# pragma: no cover` comment in your source code to exclude them from coverage.

For more detailed documentation refer pytest-cov official docs.

Remember, while aiming for high code coverage is a good rule of thumb, it isn’t always practical or possible to reach 100% coverage. Focus should be on covering the critical paths and edge cases in logic rather than striving blindly for full coverage.Getting coverage reports for your Pytest plugins is an important step in the development process, as it allows you to see how much of your plugin’s code is being executed during testing.

Having a plugin for Pytest called ‘pytest-cov’ that produces coverage reports can be one solution to our scenario. This plugin uses the

Coverage.py

library to generate these reports. Below are the steps on how to get coverage reporting when testing a pytest plugin:

First, you’ll need to install the

pytest-cov

plugin. You can do this by using pip:

pip install pytest-cov

Once

pytest-cov

is installed, you can now use pytest to run your tests and generate a coverage report. To do this, you’ll want to use the

--cov

option followed by the name of the module you’re testing:

pytest --cov=myplugin tests/

In this case, “myplugin” would be replaced with the name of your pytest plugin, and “tests/” would be replaced with the directory containing your test files.

When running this command, pytest will execute your tests and then generate a coverage report showing how many lines of your plugin’s code were executed. The output may look something like this:

------------------------------- coverage: platform linux, python 3.7.0-final-0 ------------------------------- Name Stmts Miss Cover ----------------------------------------------------- myplugin/__init__.py 2 0 100% myplugin/myplugin.py 50 10 80% tests/test_myplugin.py 30 5 83% ----------------------------------------------------- TOTAL 82 15 82%

In this example output, the “Stmts” column represents the total number of statements (i.e., lines of code) in each file. The “Miss” column indicates how many of these statements were not executed during testing, and the “Cover” column shows the percentage of statements that were covered by testing.

This way of testing gives clarity about which parts of your codes have been tested during this test run and which part was left.

Even further, if you require your results in HTML format or XML output that becomes easily readable or for sharing, you could run these commands to serve your purpose:

.HTML

pytest --cov=myplugin tests/ --cov-report html

.XML

pytest --cov=myplugin tests/ --cov-report xml

References where you can learn more:

1. [Pytest-cov Plugin Documentation](https://pytest-cov.readthedocs.io/en/latest/)

2. [Coverage.py User Guide](https://coverage.readthedocs.io/en/coverage-5.5/)Testing your Pytest plugin with adequate coverage is crucial for ensuring your codebase’s reliability and maintainability. In fact, you’d ideally want to aim for a high test coverage result by covering as much of your code base as possible in your tests.

Pytest: It’s a robust testing framework that allows one to write simple and scalable test cases. In addition to this, it supports advanced features such as fixtures and hooks that add versatility to your plugin unit testing Source: Official Pytest Documentation .

Coverage.py: It’s a tool used for measuring the effectiveness of tests, providing visibility of how much of your code is tested. You can get detailed reports on which parts of your code aren’t covered by using Coverage.py combined with Pytest Source: Official Coverage.py Documentation.

Let’s see how one can combine both these libraries to get a test coverage report.

Firstly, install pytest and pytest-cov if you haven’t already done so.

pip install pytest pytest-cov

Next, run the test suite with coverage.

pytest --cov=myplugin

Here, replace ‘myplugin’ with the name of your plugin. This should generate a command line coverage report.

Follow these steps in order to get a better view of your plugin’s test coverage:

Create Detailed Reports:

By default, the `–cov` option in pytest gives a summary report, which displays the overall test coverage. For a more detailed report, specify a report type, like this:

pytest --cov-report term-missing --cov=myplugin

The `term-missing` report shows each missed line number, which can be useful to directly know which lines are not covered.

Specify a File/Folder:

If you want to specify a particular Python file or a folder to get the coverage, use this syntax:

pytest my_tests.py --cov=myplugin

or

pytest myfolder/ --cov=myplugin

This can help immensely when you want to get the coverage of individual files/folders.

Create an HTML Report:

Sometimes, it helps to have an HTML report because it lets you visually drill down into the codebase and see exactly which lines are covered and which aren’t. You can create an HTML report like this:

pytest --cov-report html --cov=myplugin

This command will create a folder called `htmlcov`. Open `index.html` in a browser to see the state of your code coverage.

Lastly, I must say that while chasing for 100% code coverage can be helpful in some situations, it’s not always required. The focus should be on testing critical paths and less likely edge cases, rather than achieving a perfect coverage score. Aim for thorough and thorough, not just high, coverage metrics.Getting coverage reporting while testing a Pytest plugin involves a variety of critical factors. First and foremost, to ensure your code is working as expected, getting accurate measurements of the areas your tests are hitting – or not hitting – is crucial. To get such a report, we leverage tools like Coverage.py. Coverage.py is designed specifically for Python applications – just what we need for Pytest plugins!

Coverage.py can assess both code coverage (how much of our code is being tested?) and branch coverage (are both paths of each conditional statement being evaluated?). This level of scrutiny guarantees an exhaustive exploration of our plugin.

Let’s delve into how we can harness this tool in our test environment. Here’s a demonstration on how to install Coverage.py using pip:

pip install coverage

We initialize and conclude coverage measurement using the following commands:

# Start measuring coverage run -m pytest # Generate the report coverage report -m

Using Coverage.py with Pytest requires some additional attention. Certain considerations include:

Configuration Files:

Coverage.py works with configuration files – ‘.coveragerc’ by default. These prove handy in complex projects where we might want to ignore certain lines, files, or even directories. An example .coveragerc file would look like the following:

[run]

omit =

*/tests/*

In the above setup, Coverage.py will overlook anything in the ‘tests’ directory.

Hooks:

Specifically when dealing with Pytest Plugins, hooks become central. Unfortunately, Coverage.py doesn’t inherently know about these. Explicitly including hook-invoked modules ensures they contribute to the coverage report. Let’s say you have a hook defined in your ‘my_hooks.py’ module that isn’t directly imported anywhere. You’ll have to update your ‘.coveragerc’ configuration file as:

[run] source_pkgs = my_plugin

Here, ‘my_plugin’ should be the actual name of your plugin module.

HTML Reporting:

For further visualization, one can generate HTML reports. It provides a fully-fledged report with line-by-line analysis.

# Generate an HTML report coverage html

This generates an ‘htmlcov/index.html’ file. Opening it in your browser will let you visualize which lines your tests hit and missed.

Integration with Continuous Integration (CI):

Integrating Coverage.py with CI/CD pipelines makes automated, regular monitoring achievable. Something like GitHub Actions or Jenkins could easily set up Coverage.py to produce reports each time new code is pushed, making the maintenance of high-quality, reliable software far simpler.

Read more about leveraging Coverage.py for your python pytest plugins.

Remember, code coverage isn’t everything—it can’t capture every scenario or edge case you need to worry about—but it’s a powerful metric to have in your toolkit. Understanding how well our Pytest Plugin code is tested provides confidence in its reliability and robustness. This understanding, coupled with proper use of coverage data, drives the development of sturdy, maintainable plugins.The role of a Pytest plugin in software testing is paramount. To put it simply, Pytest plugins extend the functionality of the Pytest framework.[source]. This functionality can cover various aspects of testing, including but not limited to adding command-line options, changing test collection, providing custom marks, or even changing the output format.

Now, when developing such plugins, one crucial part of maintaining high-quality code is coverage reporting. Coverage reports help us identify parts of our code that have not yet been covered by tests, and to understand how thorough our testing has been.

To obtain coverage reporting using the ‘pytest-cov’ plugin while running your tests, you will need the following steps:

### Step 1: Installation

Firstly, install the pytest-cov Python package. You can install it using pip:

pip install pytest-cov

### Step 2: Run The Tests With Coverage

Once the package has been installed, run your tests using pytest while enabling coverage using pytest-cov. Coverage should be enabled for the module you are looking to get the report from. Here is an example where the module name is ‘my_module’:

pytest --cov=my_module tests/

### Step 3: Introduce A `pytest.ini` File

To avoid typing in the parameter each time, make a `pytest.ini` file and put the options/defaults there.

Here’s an example of a configuration file: