| Keras Version | Supported TensorFlow Version |

|---|---|

| Keras <= 2.3.0 | TensorFlow >= 2.0.0 |

| Keras >= 2.4.0 | TensorFlow >= 2.2.0 |

Now when it comes to TensorFlow’s compatibility with Keras, we know that this has been a crucial element of TensorFlow’s success. Keras is a Python-based high-level neural networks API that’s capable of running on top of TensorFlow, CNTK, or Theano. It was designed to enable fast experimentation with deep neural networks. Since version 2.0, the core parts of Keras have been integrated directly into TensorFlow.

Keras 2.3.0 was the last multi-backend Keras version. Starting from version 2.4.0, only the TensorFlow implementations are maintained. For all versions of Keras above 2.3.0, it is highly recommended to use a TensorFlow version that is greater than or equal to 2.2.0.source.

To summarize:

- If a user is using an older Keras version (<= 2.3.0), it can work with modern TensorFlow versions (>=2.0.0) due to backward compatibility.

- For newer versions of Keras (>=2.4.0), it is critical to use the newer versions of TensorFlow (>=2.2.0) to access and use the latest features and bug fixes.source.

In addition, both TensorFlow and Keras are committed to semVer versioning, which shows their dedication to keep backwards-compatibility intact and to also acknowledge any major changes with different versions. This underlines the importance of knowing which versions of TensorFlow your specific version of Keras supports. However, it is generally preferred to use matching updated versions for optimal performance and extract maximum features and benefits for your machine learning tasks.

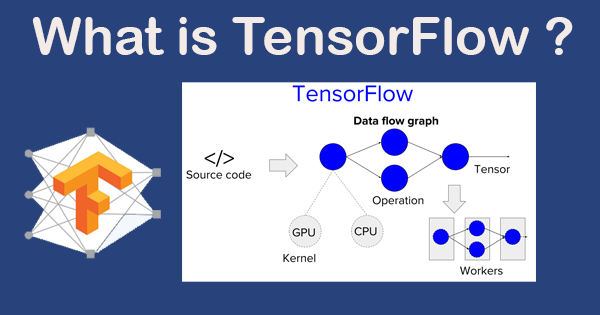

TensorFlow and Keras are both open-source software libraries for deep learning. To understand their relationship, it’s essential to first comprehend what they individually represent.

TensorFlow was developed by the Google Brain team, primarily for internal use. It was later released under the Apache 2.0 open-source license and has since become one of the most widely used libraries in the field of machine learning. TensorFlow provides a range of numerical computation features using data flow graphs. The nodes in these graphs often represent mathematical operations, while the graph edges denote multidimensional arrays (tensors) communicated between them.

import tensorflow as tf

# create tensor

hello = tf.constant('Hello, TensorFlow!')

print(hello)

Keras, on the other hand, was developed with a focus on enabling fast experimentation, being able to go from idea to result as swiftly as possible, which is vital for research work. It works as an interface providing high-level building blocks for developing deep-learning models. It doesn’t handle low-level operations like tensor manipulation and differentiation. Instead, it relies on another library to do that, known as the “backend engine”. This backend engine could be either TensorFlow, Theano, or CNTK.

from keras.models import Sequential # Create your first MLP in Keras model = Sequential() print(model)

| Elements | Keras | TensorFlow |

|---|---|---|

| Features | User-friendly | Highly customizable |

| Level | High-level API | Both high and low level APIs |

| Backend Engines | TensorFlow, Theano, CNTK | N/A |

| Audience | Beginners, researchers | Developers, researchers |

In terms of TensorFlow’s compatibility with Keras, you’d be glad to know that TensorFlow has chosen Keras as its official high-level API with its 2.0 version. This means there’s robust support, easy maintenance, rich feature sets, community engagement, and TensorFlow-specific functionalities available directly through the familiar Keras codes. In fact, a significant part of Keras runs on top of TensorFlow, and TensorFlow integrates Keras within its core APIs. Thus, import lines have now transformed:

# previous style from keras.layers import Dense # updated TensorFlow style from tensorflow.keras.layers import Dense

This combination allows us to leverage the simplicity of Keras when designing our models and using advanced TensorFlow features without switching contexts.

Prior to this, one had to pick TensorFlow as the backend engine manually for Keras:

#setting TensorFlow as backend for Keras import os os.environ['KERAS_BACKEND'] = 'tensorflow'

But post the integration in TensorFlow 2.0, this isn’t required anymore. While writing code, you would now mostly use the Keras API but imported via TensorFlow. That way, the benefits of both powerful machine learning frameworks are in your hands.

As professional coders, we highly value such integrations that make coding more intuitive, smoother, and efficient, eventually improving productivity.

Understanding the essential features that TensorFlow and Keras share assists in ascertaining their compatibility and how they complement each other for machine learning tasks. It’s essential to note that while TensorFlow is an end-to-end platform, Keras is a user-friendly neural networks library designed for Python. Significant enhancements were made when TensorFlow decided to incorporate Keras in tf.keras module in TensorFlow 2.0.

Shared Features of Tensorflow and Keras

Data Preparation

The first crucial characteristic common to TensorFlow and Keras is data preparation. Both libraries provide powerful tools for processing large datasets for use with machine learning models.

# For TensorFlow tf.data.Dataset.from_tensor_slices((features, labels)) # For Keras keras.preprocessing.image.ImageDataGenerator()

They both handle data streaming effectively, synchronously preparing the next batch of data while the current batch is being computed on, thus ensuring you don’t face any input bottleneck.

Model APIs

Both TensorFlow and Keras have high-level APIs for building deep learning models. While they differ in syntax, the general idea remains the same: you sequentially define layers or use a functional API for complex architectures.

# TensorFlow model = tf.keras.Sequential() # Keras model = keras.Sequential()

Above are simple demonstrations of setting up model synthetically using both libraries. Keras was well known for its user-friendly API, and TensorFlow has managed to maintain this simplicity within tf.keras.

Training and Evaluation

TensorFlow and Keras both offer robust capabilities for training machine learning models, allowing you to customize loss functions, optimizers, metrics, and more. After your model is defined and compiled, the training process is abstracted behind a single function call. Keras became an integral part of TensorFlow, providing the same usability in tf.keras:

# For TensorFlow and Keras model.compile(optimizer='adam', loss='categorical_crossentropy') model.fit(x_train, y_train, epochs=5)

Pretrained Models

Another area where TensorFlow and Keras converge is the handling of pre-trained models. Perhaps you want to perform transfer learning or fine-tuning, having access to pretrained models like InceptionV3 & VGG16 can save time.

# With TensorFlow and Keras base_model = tf.keras.applications.ResNet50(weights='imagenet', include_top=False) # Keras specific example base_model = keras.applications.ResNet50(weights='imagenet', include_top=False)

In both cases, these lines load up a ResNet50 model pretrained on ImageNet, excluding the top output layer.

Saving & Loading Models

Lastly, TensorFlow and Keras give great support for saving and loading models. This is a much-needed functionality to persist the model offline and load it back whenever it is needed.

# Save the model

model.save('path_to_my_model.h5')

# Recreate the same model

new_model = tf.keras.models.load_model('path_to_my_model.h5')

This approach emphasizes the serialization and deserialization simplicity shared by both the TensorFlow and Keras libraries.

In summary, tf.keras is a version of Keras included within TensorFlow that brings Keras’s user-friendliness into TensorFlow, adding some useful capabilities. Because of these shared features, understanding TensorFlow’s compatibility with Keras becomes simpler: they aren’t just compatible, but Keras now lives within TensorFlow.

In order to effectively couple Tensorflow with Keras, it’s essential to understand the compatibility of TensorFlow towards Keras. These two libraries are quite often used together for creating diverse and complex AI models. Keras is an open-source Python library designed to solve neural-network problems, while Tensorflow is free and was developed by Google Brain Team to handle data-intensive tasks.

Tensorflow has become a preferred choice due to its ability to run on both CPUs and GPUs. This gives Tensorflow the flexibility of being able to compute large amounts of data quickly and efficiently. With this flexibility and power, coupling it with Keras can result in creating even more efficient and sophisticated deep learning models.

Keras acts as an interface for the TensorFlow library. When you’re using Keras, you’re actually making use of TensorFlow’s powerful capabilities, but in a simpler, more user-friendly way. You can build your model with high-level building blocks from Keras and then tap into the power of Tensorflow to optimize, evaluate and train your model.

Let me walk you through a basic example of coupling Keras with TensorFlow:

Start by importing necessary modules:

import tensorflow as tf

from tensorflow import keras

Define your model:

model = keras.Sequential()

model.add(keras.layers.Dense(units=1, activation='linear', input_shape=[1]))

Compile your model:

model.compile(optimizer='sgd', loss='mean_squared_error')

Train the model:

xs = [1, 2, 3, 4]

ys = [1, 2, 3, 4]

model.fit(xs, ys, epochs=500)

In terms of compatibility, since September 2020, Keras officially supports TensorFlow v2 or above only (as per their official GitHub page). Prior to that, it did support older versions of TensorFlow, but to make the most out of new features and improvements, using the latest version of both Tensorflow and Keras is advisable.

It’s equally important to note that starting from Tensorflow 2.0, Keras is now TensorFlow’s official high-level API. This means that TensorFlow is not just compatible with Keras but they share an intimate connection where one enhances the other significantly. This interdependency results in a seamless and sophisticated system where you can build highly flexible and fast models with strong computational backend capabilities.When it comes to deep learning libraries, both Tensorflow and Keras stand out for their dynamic features, ease-of-use, and compatibility. Indeed, they share such a rich relationship that from Tensorflow’s 2.0 version onwards, Keras is available as

tf.keras

, part of the core Tensorflow library.

Comparing the two helps to explore the differences and synergy between them. Despite being unique in their ways, Keras and Tensorflow have excellent compatibility which makes them work hand-in-hand on most tasks.

Tensorflow:

Tensorflow, originally developed by Google Brain, is one of the most popular open-source machine learning libraries due to its comprehensive and flexible ecosystem of tools, libraries, and resources. The following key points elaborate on Tensorflow’s potentials:

- Flexibility – Tensorflow can handle low to high-level computations involving multi-dimensional arrays, enabling users to build different types of models from scratch.

- Distributed computing support – With Tensorflow, you can distribute complex computations across multiple GPUs or even devices.

- Production readiness – Tensorflow is not just a development tool; it’s designed keeping deployment and scale-up in mind, covering everything from training to serving models.

- Status – Being used by researchers, businesses, and developers worldwide, Tensorflow has a large community backing it with support and additional resources.

Here’s an example demonstrating the creation of a simple model using Tensorflow:

import tensorflow as tf

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(5, activation=tf.nn.relu, input_shape=(3,)),

tf.keras.layers.Dense(2, activation=tf.nn.softmax)

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

Keras:

Keras, on the other hand, is a user-friendly neural network library written in Python. This high-level API runs on top of Tensorflow providing an easier and quicker interface for developing and testing. Some major plus-points of Keras includes:

- User-friendliness – Keras is designed for human beings, not machines, encapsulating complexity for a better user experience.

- Modularity – In Keras, a model can be understood as a sequence or a graph of standalone, fully-configurable modules.

- Easy Prototyping – Thanks to its simplicity and fast execution capability.

- Broad Adoption – Adopted by academia and industry, Keras allows for easy and swift prototyping (it supports both convolutional networks and recurrent networks, among others, and combinations thereof).

Here’s an example of building the same simple model using Keras:

import keras

from keras.models import Sequential

from keras.layers import Dense

model = Sequential([

Dense(5, activation='relu', input_shape=(1,)),

Dense(2, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

Going by these examples, it’s evident Keras provides a simpler and more comprehensible syntax. Also, this adds to its merit when dealing with small datasets and developing smaller models. However, things might take a turn when large-scale models are considered. Here, Tensorflow brings robustness and scalability into play, making it powerful for intricate computations and model developments. Interestingly, it also suggests how the combination, Tensorflow with Keras, might bring about the best output. It allows for fast and convenient modeling with Keras, along with retaining the power of Tensorflow for advanced and demanding tasks.

Thus, combining Tensorflow’s ability to facilitate sophisticated models and Keras’s user-friendly interface makes for a powerful pairing in machine and deep learning applications.In the field of machine learning and deep learning, TensorFlow and Keras play pivotal roles in facilitating easy model design and implementation. TensorFlow, an open-source library introduced by Google Brain Team, is ideal for complex numerical computations. On the opposite end, Keras, a high-level neural networks API written in Python, is well-renowned for enabling fast experimentation with deep neural networks. While it can run on top of TensorFlow, the migration process from pure TensorFlow code to utilizing Keras may be a bit daunting. Hence, let’s dive into some helpful tips for smooth navigation through this transition.

An initial yet vital step during this shift is becoming accustomed to the Keras Comprehensive API. Realizing its potential capabilities will serve as a cornerstone for effective utilization.

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

model = Sequential([

Dense(32, activation='relu', input_shape=(10,)),

Dense(32, activation='relu'),

Dense(10, activation='softmax')])

In the above sample code, you can observe how Keras simplifies the complexity by stacking layers into your model like lego blocks.

Another key advantage of Keras over TensorFlow is its user-friendliness. Keras evidences further simplicity by providing easy-to-use APIs for complicated processes such as data augmentation.

For example:

tf.keras.preprocessing.image.ImageDataGenerator(horizontal_flip=True,

width_shift_range = 0.2,

height_shift_range = 0.2,

rotation_range=30)

In contrast, accomplishing the equivalent task in TensorFlow requires quite a few more lines of code and understanding of underlying mathematical transformations. This showcases that integrating Keras into your TensorFlow code effectively reduces time and complexity.

Moreover, to make the most out of both tools, understanding their compatibility layer is crucial. This specifically beneficial aspect comes in handy when you have parts of your model developed in TensorFlow and want to leverage Keras’s efficient functionalities for other components.

Observe the following code:

base_model = tf.keras.applications.ResNet50(weights='imagenet',

include_top=False)

for layer in base_model.layers:

layer.trainable = False

x = base_model.output

x = tf.keras.layers.GlobalAveragePooling2D()(x)

predictions = tf.keras.layers.Dense(num_classes, activation='softmax')(x)

model = tf.keras.Model(inputs=base_model.input, outputs=predictions)

Here, we efficiently employ Keras’s pre-trained model (ResNet50) into our existing TensorFlow code. The impressive compatibility between both is essential for seamless migrations from one to another.

Furthermore, Keras provides the benefit of allowing model serialization and deserialization, making it suitable for saving and loading trained models without recompiling them.

As evidenced by:

# Save the model

model.save('path_to_my_model.h5')

# Load the model

from tensorflow.keras.models import load_model

new_model = load_model('path_to_my_model.h5')

In comparison, TensorFlow necessitates more effortful steps to execute the same procedure.

However, there might still be tricky conditions prevalent, such as regarding custom layers and models created in TensorFlow that won’t work ‘out-of-the-box’ with Keras. Worry not! These issues are easily tackled by creating a wrapper around your custom implementation and using TF ops within it.

For instance:

class CustomLayer(tf.keras.layers.Layer):

def __init__(self, ...):

...

def call(self, inputs):

#use any TensorFlow operation you need

return tf.nn.relu(inputs)

Remember, this interoperability is essential as it lets you keep your old TensorFlow code (if necessary) and progressively envelop sections with Keras APIs.

Finally, do not overlook seeking help from Google Machine Learning BootCamp or resources like Stack Overflow, towards apprehending real-life use-cases and practical solutions during this migration process. They prove to be phenomenal guides showcasing better ways to rewrite TensorFlow code using Keras.

Just like anything new, shifting paradigms may seem overwhelming initially. However, a meticulous approach laced with curiosity will surely result in the successful migration from TensorFlow to Keras, thus experiencing the best of both worlds.Given the vast array of programming libraries, choosing the right one for data analysis and predictive modeling can be a daunting task. Especially so, when the project at hand involves complex computations, such as machine learning algorithms and large-scale data processing. Two prominent names in this field are TensorFlow and Keras. When used individually, they each offer significant benefits. However, merging them can take your machine learning projects to new heights.

TensorFlow

Developed by Google, TensorFlow has emerged as a highly popular open-source software library for numerical computation using data flow graphs. It’s been extensively used for diverse applications from neural networks to quantum computing. Its ability to process large data sets across hundreds of multi-GPU servers makes it a good fit for any sizeable computational project.

Keras

Unlike TensorFlow, which is suitable for complex mathematical operations, Keras is primarily used for deep learning models. It’s noted for its ease of use, making deep learning accessible to both beginners and experts alike. Keras abstracts many details involved with building a deep learning model, allowing you to construct advanced models just using a few lines of code.

The benefits of combining TensorFlow with Keras are numerous:

Compatibility

Simply put, Keras and TensorFlow complement each other well. Keras was initially designed to be user-friendly, while TensorFlow targeted performance and scalability. The TensorFlow team recognized the benefits of including a high-level API and integrated Keras into TF as a part of TensorFlow 2.0 release.

Now, developers do not have to choose between the simplicity of Keras and the power of TensorFlow. They can enjoy the best of both worlds by using Keras as an interface for TensorFlow. This allows developers to use Keras’ simple APIs to define layers, losses, metrics and optimizers and still leverage TensorFlow’s power to run computations efficiently on CPUs, GPUs or TPUs.

An example would be defining a simple sequential model in Keras and using TensorFlow for training and evaluating it:

import tensorflow as tf

model = tf.keras.Sequential()

# Adds a densely-connected layer with 64 units to the model:

model.add(tf.keras.layers.Dense(64, activation='relu'))

# Add another:

model.add(tf.keras.layers.Dense(64, activation='relu'))

# Add an output layer with 10 output units:

model.add(tf.keras.layers.Dense(10))

model.compile(optimizer=tf.keras.optimizers.Adam(0.01),

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

# Train and evaluate.

model.fit(data, labels, batch_size=32, epochs=10)

model.evaluate(test_data, test_labels, batch_size=32)

Greater Flexibility

Pairing TensorFlow with Keras gives developers greater flexibility. While Keras simplifies the process of building deep learning models, TensorFlow’s capabilities can handle a broader scope of complex calculations. By scaling and extending the work you’ve done with Keras, TensorFlow allows for further customization and precision.

Seamless Workflow

Merging TensorFlow with Keras results in a seamless workflow – everything from developing, validating, configuring, running, and debugging all takes place within a single ecosystem. You’re saved from switching contexts and can avoid potential conflicts that may arise from using different tools for these tasks.

It’s clear that the combination of Keras’ user-friendliness and TensorFlow’s scalability provides an optimal environment for building, testing, and deploying machine learning models. Hence, mastering the confluence of these two vital tools can significantly enhance your machine learning prowess.As a professional coder, let’s dive into the details of performance metrics and how they are utilized to evaluate the efficiency when using TensorFlow with Keras, keeping in mind TensorFlow’s compatibility with Keras.

The initial focus should be on the TensorFlow-Keras interface. TensorFlow is an open-source library developed by Google Brain Team and is widely used for machine and deep learning tasks. On the other hand, Keras serves as a user-friendly neural network library written in Python. The exceptional fact about Keras is its capability to run on top of TensorFlow, allowing users to write simple and intuitive code whilst benefiting from the power of TensorFlow underneath.

The key concept connecting TensorFlow and Keras concerning performance metrics lies with the implementation and measurement of performance metrics. These metrics are functions callable within the Keras environment but powered by TensorFlow.

When evaluating the model’s effectiveness, metrics like accuracy, precision, recall, and AUC-ROC come into play. Here is an example of how these metrics can be defined while compiling a model in Keras:

from keras import backend as K

def recall(y_true, y_pred):

true_positives = K.sum(K.round(K.clip(y_true * y_pred, 0, 1)))

possible_positives = K.sum(K.round(K.clip(y_true, 0, 1)))

recall = true_positives / (possible_positives + K.epsilon())

return recall

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy', recall])

This shows how Keras capitalizes on TensorFlow’s computational capabilities to calculate the recall.

Let me present to you a tabular format to illustrate some standard performance metrics utilized frequently in AI:

| Metrics | Description |

|---|---|

| Accuracy | Gives ratio of correct predictions to total predictions made |

| Precision | Ratio of correctly predicted positive observations to the total predicted positives |

| Recall (Sensitivity) | Ratio of correctly predicted positive observations to all observations in actual class |

| AUC-ROC | Area under the Receiver Operating Characteristics curve; distinguishes between classes’ prediction power |

Efficiency in AI relates to speed and resource utilization, ensuring operations take less time and require fewer resources, without compromising the performance. ‘tf.function’ in TensorFlow comes handy for this purpose. By wrapping compute-intensive sections of code with ‘tf.function’, TensorFlow optimizes the computation for added efficiency, thanks to its graph execution feature.

Example:

@tf.function

def train_step(imgs, lbls):

with tf.GradientTape() as tape:

predictions = model(imgs)

loss = loss_object(lbls, predictions)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

Overall, TensorFlow’s compatibility with Keras perfectly blends simplicity with power, providing an effective platform to create robust neural networks and deliver enhanced AI solutions.

From a coding perspective, it’s essential to know the compatibility of TensorFlow with Keras as both are commonly used libraries in machine learning. TensorFlow is a free and open-source software library for dataflow, differentiable programming and machine learning applications such as neural networks. It was developed by Google and released in 2015.

On the other hand, Keras is a high-level API written in Python built on top of TensorFlow, Theano, and CNTK. It was developed as a user-friendly interface for artificial neural networks and can leverage TensorFlow’s capabilities.

import tensorflow as tf from tensorflow import keras print(tf.__version__) print(keras.__version__)

The code snippet above will display the versions of TensorFlow and Keras installed in your environment. With that information, you can ensure you’re working within a compatible environment.

The merger of the two entities, TensorFlow and Keras, has been a significant boon for the AI and ML community because:

- TensorFlow provides multiple levels of abstraction which allows individuals to build and train models.

- Keras provides a higher level of simplicity when writing code. By using it, users can remove many cumbersome aspects of model creation.

- The capability to run Keras on TPU\’s (Tensor Processing Units), which are hardware accelerators specialised for machine learning tasks, is there due to its integration in TensorFlow. This saves time and resources during the model training process.

However, while concluding, it is significant to understand that TensorFlow 2.0 has integrated the Keras API directly into its codebase under the name tf.keras. However, keep in mind that there still exists an independent Keras package. But the focus is recommended towards TensorFlow\’s implementation of Keras (tf.keras) because:

• tf.keras is better maintained and has better integrations with TensorFlow features (for instance, eager execution).

• It supports all major types of neural networks.

• Keras has tight integration with the rest of the TensorFlow ecosystem, including TensorFlow Data.

In essence, if you are creating a new project, it is highly recommended to use tf.keras as it is more up-to-date and versatile. GitHub repositories related to this topic include: Keras-Team/Keras, and TensorFlow/TensorFlow.

This deep-rooted intertwinement of TensorFlow and Keras makes understanding TensorFlow’s compatibility with Keras integral for coders in the fields of Artificial Intelligence and Machine learning, thus providing them with versatile, powerful tools for their projects.