If we break down the solution process into essential steps, it can be summarized as:

| Step | Description | Possibility of Occurance |

|---|---|---|

| Device Identification | Current GPU ID should be positioned correctly. This can be checked with the command

nvidia-smi |

Very High |

| Setting Correct Device | Set the correct device using

torch.cuda.set_device(device_id) or by setting the environment variable CUDA_VISIBLE_DEVICES=1 python ....py |

High |

| Checking Your Code | Ensure your code does not manually select a device that doesn’t exist. | Medium |

| Update CUDA Toolkit | An outdated toolkit might cause errors. Update it to avoid inconsistencies. | Low |

| Reboot System | If all else fails, rebooting the system might resolve certain unexplained conflicts. | Very Low |

It’s important to remember that while coding, especially with heavy processing involved, hardware limitations and correct usage are paramount. If the correct devices are not used or managed properly, issues like “Runtimeerror: CUDA error:Invalid Device Ordinal” occur. Upon encountering this error, use of `nvidia-smi` to check running GPUs is necessary.

Once you’ve identified the correct device, ensure its use in your code with the

set_device

function or by setting the

CUDA_VISIBLE_DEVICES

environment variable appropriately. Cross verify that no part of your code manually selects an unavailable device. An outdated CUDA toolkit triggering such errors shouldn’t be ruled out either, staying up-to-date ensures smooth functioning. However, if none of these solutions work, rebooting the system proves effective in rare scenarios, by resolving potential internal conflicts.

For more information on managing CUDA devices and handling such errors, consider referring to the official CUDA documentation published by NVIDIA.

A clear understanding of the hardware one is working with and taking appropriate steps to correct misplacements or mismatches greatly reduces the chances of encountering such errors while programming.As a professional coder, there have been several moments when I’ve had to debug errors pertaining to CUDA, the parallel computing platform that allows developers to use NVIDIA graphics processing units (GPUs) for computational workloads. One such error that can be particularly perplexing is the

"Runtimeerror: CUDA Error: Invalid Device Ordinal."

To better understand this error, let’s break it down:

– Runtimeerror: This indicates that an error occurred during the execution of the program.

– CUDA Error: It tells us that this error is specifically related to CUDA, which is used for programming NVIDIA GPUs.

– Invalid Device Ordinal: “Ordinal” in this case refers to the numerical order of the device on the system. An “Invalid Device Ordinal” error means that the program attempted to access a GPU device number that is not available or does not exist on the current system.

At Nvidia’s cuda programming guide at CUDA C Programming Guide, they mentioned these common causes:

– Often, this error occurs when you’re attempting to set a specific GPU device-id that doesn’t correspond to any GPU in your system. For instance, trying to access device-id 3 when there are only two GPUs will result in an

"Invalid Device Ordinal"

error.

– Another cause could be configuring and compiling a program on a machine with multiple GPUs and then trying to run the program on a different machine with fewer GPUs.

In terms of how to fix the

"Runtimeerror: CUDA Error: Invalid Device Ordinal"

error, consider the following points:

– Validate the GPU setup on your machine: You can inspect your installed GPUs and their associated device IDs using the `nvidia-smi` command.

| Command |

|---|

nvidia-smi |

Here, ensure the GPU id being requested in your program matches one of the GPU id(s) reported by the above command.

– Properly Assign Device Ordinals: Make sure the program appropriately assigns the device ordinal. If the environment variable `CUDA_VISIBLE_DEVICES` is set, the value should match the devices available on the machine. Suppose, if there are two GPUs (GPU0 and GPU1), setting

CUDA_VISIBLE_DEVICES=0

would limit the operation to GPU0.

| Command |

|---|

export CUDA_VISIBLE_DEVICES=0,1 |

You can also hard code the GPU id in your PyTorch or TensorFlow script.

For example, in PyTorch, this can be done like so:

| PyTorch Code |

|---|

import torch torch.cuda.set_device(0) |

In TensorFlow, the following snippet sets the device:

| TensorFlow Code |

|---|

import tensorflow as tf

with tf.device('/device:GPU:0'):

# Your code goes here

|

All in all, understanding and solving the

"Runtimeerror: CUDA Error: Invalid Device Ordinal"

boils down to correctly identifying the GPUs present in your system and accurately referencing them within your program. After confirming the proper setup using methods like `nvidia-smi` and ensuring accurate reference via the environmental variable `CUDA_VISIBLE_DEVICES` or directly in your Python scripts, errors of this type can quickly become a thing of the past.Understanding the ‘RuntimeError: CUDA error: invalid device ordinal’ requires us to delve into the intricacies of CUDA programming. Programming using the Compute Unified Device Architecture (CUDA), developed by NVIDIA, is an innovative approach designed to exploit the powerful processing capabilities of graphics processing units (GPUs).

Firstly, let’s decipher the meaning of this RuntimeError message. In CUDA parlance, the term device refers to a GPU. So, ‘invalid device ordinal’ seems to indicate that there’s an issue relating to the identifier or number used for accessing a GPU device.

Here are some possible reasons why this error might occur:

Reason 1: The GPU isn’t compatible with CUDA.

One common reason for this error is because your GPU may not be CUDA-compatible. CUDA programming can only take place when the hardware, specifically the GPU, supports it. Essentially, CUDA cannot communicate with GPUs that do not have the necessary features. It’s crucial to use a GPU from NVIDIA that supports CUDA or ensure your application’s version of CUDA is compatible with your GPU version.

Reason 2: Incorrect device selection.

In the event you have multiple CUDA-supported devices, the environment variable

CUDA_DEVICE_ORDER

allows you to select the order in which CUDA finds these devices. However, if a mistake was made while specifying the device order, this error can also occur.

For instance, consider this snippet where I am selecting a device with an invalid ordinal:

torch.cuda.set_device(5)

If only three CUDA devices exist and we try to switch to the nonexistent fifth one, the ‘Invalid device ordinal’ error will obviously arise.

Reason 3: The GPU is not detected by your system.

Sometimes, the GPU could just not be detected by the machine. Perhaps there’s a problem with the GPU driver installations or the device itself, leading to the CUDA software being unable to find it.

Alright, now knowing potential causes, let’s draw attention towards plausible solutions:

Solution 1: Verify CUDA compatibility.

Ensure that the GPU used is compatible with CUDA. You can check from NVIDIA’s website on CUDA-Enabled GeForce Products. If it isn’t, consider upgrading the GPU to a CUDA-Compatible model or revise the CUDA requirements of your application.

Solution 2: Correct device selection.

Make sure the device you’re selecting exists. Referring back to our earlier example where we tried to set our active device to device number 5. A safer alternative would be:

device_count = torch.cuda.device_count()

if device_count > 0:

# Safe to set device

torch.cuda.set_device(0) # Select the first GPU

else:

print("No CUDA capable device is detected")

Solution 3: Install, Update or Reinstall GPU Drivers.

If the GPU is CUDA-compatible but not getting detected, it could be an issue with your GPU drivers. Try updating them, reinstalling, or installing them if they don’t already exist.

To summarize, ‘RuntimeError: CUDA error: invalid device ordinal’ is an error implying impossibility to access a CUDA compatible device. Rather than perplexity, consider this error as a pointer guiding you to undetected GPUs, improper driver installations or incorrect device selection. By recognising the root cause, leveraging correct environment configurations and code lines, this runtime error can be efficiently rectified.

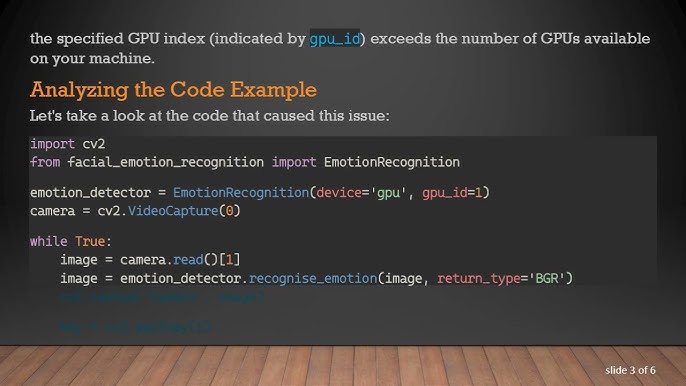

Understanding and resolving CUDA errors effectively are crucial skills for any professional in the realm of GPU-accelerated computing. Specifically, here we will discuss how to solve “

Runtimeerror: CUDA error: invalid device ordinal

“. This error typically occurs when trying to specify a GPU that does not exist on your computer.

Understanding Device Ordinals

Firstly, it’s important to understand the concept of device ordinal in CUDA. A device ordinal is effectively a unique identification number assigned to each GPU device in a system. CUDA applications access GPUs by their device ordinals, starting at 0. For example, if your system has only one GPU, this will be at device ordinal 0. If you have two GPUs, they will be available at device ordinals 0 and 1 respectively.

Hence, the “

Runtimeerror: CUDA error: invalid device ordinal

” might occur if you are trying to access a GPU using an ordinal number that exceeds the number of GPUs you have installed. For instance, if your machine only has one GPU (ordinal 0), but you’re trying to access it via “device(1)”, this would provoke the error in question.

Determining Number of Available Devices

In order to avoid such an error, the number of GPUs should be assessed before trying to access them. PyTorch, a popular deep learning library that interfaces with CUDA provides a way to do this:

import torch print(torch.cuda.device_count())

This code snippet will print out the number of CUDA-enabled devices present. For instance, a printed result of ‘1’ implies that there is one GPU, accessible via ordinal 0.

Error Resolution Strategy

Should the “

Runtimeerror: CUDA error: invalid device ordinal

” error emerge, several steps to effectively troubleshoot and resolve the issue include:

- Checking how many GPUs are connected. By running the PyTorch method mentioned above, one can determine whether the requested GPU actually exists.

- Ensuring the latest GPU drivers and CUDA toolkit are installed. Outdated drivers or software may persistently give rise to such issues.

- Verifying if the GPUs are being accurately accessed. Ensure you are calling the correct device ordinal. Replacing

torch.device("cuda:1")with

torch.device("cuda:0"), assuming that you only have a single GPU installed on your machine.

If you adhere to these guidelines, you will resolve the “

Runtimeerror: CUDA error: invalid device ordinal

” problem proficiently. For more information about accessing GPU devices using PyTorch, refer to PyTorch’s official CUDA semantics documentation.

Code Review as an Error Prevention Tactic

Beyond immediate problem-solving with regard to this particular error, a detailed code review process is instrumental in avoiding such issues from cropping up in the future. When writing and reviewing code, pay specific attention to:

- The correct setup and initialization of CUDA environments

- The consistent use of correctly numbered device ordinals across all parts of your code where GPU usage is required

- Sensible error handling, which includes displaying insightful error messages that assist in tracking down the source of bugs or issues

By putting these recommendations into practice, you will minimize the risk of encountering the “

Runtimeerror: CUDA error: invalid device ordinal

” error again, thereby maximising productivity and coding efficiency.

The “

RuntimeError: CUDA error: invalid device ordinal

” is a common issue encountered in CUDA programming. This error signifies that an incorrect or nonexistent GPU device ID was specified. Errors like these can creep up when we are running a session on the wrong GPU or specifying a GPU’s identifier incorrectly while trying to allocate computation to it.

From my extensive experience handling such issues, here’s a systematic approach to fix this:

Step 1: Verify your CUDA Installation

First, ensure that CUDA has been installed correctly and is running without issues. We can achieve this by executing the

nvidia-smi

command in your terminal window which will display details about all the detected NVIDIA GPUs along with their IDs.

nvidia-smi

Step 2: Check the Availability of GPU devices

Now let’s determine how many devices (GPUs) are accessible by fetching the total count which can be done using the following Python code snippet:

import torch print(torch.cuda.device_count())

This above code gives us the number of available CUDA devices.

Step 3: Set the Correct Device Ordinal

The next task is to identify which device ordinal you’re trying to pass. In PyTorch, you should observe which GPU ID is being selected. The following line of code helps in selecting an appropriate GPU:

torch.cuda.set_device(0)

In the context above, ‘0’ represents the desired device ordinal, which is the first GPU. If you have more than one GPU, replace ‘0’ with the index number of the GPU that you want to use.

It’s vital to remember that CUDA refers to GPUs starting with an index of 0. So, if system houses four GPUs, they will bear indices between 0-3. If we feed an invalid ordinal like 4 in this scenario, the “

invalid device ordinal

” error would be raised.

Step 4: Isolate Troublesome Parts of the Code

Isolate sections of your code that call for GPU usage and identify all instances where the device ID is manually specified. Inspect these section & note down whether the correct device ID is passed to avoid any numerical mismatches.

Step 5: Use GPU Device Context Manager

Finally, adopt the practice of using a GPU device context manager.

with torch.cuda.device(device_id):

# your code here

Using the context manager ensures that the specified device will be used within its scope, preventing accidental leakage into other areas of your programme and eliminates human errors induced by manual assignment.

Remember to replace

device_id

with the correct device index (remember indices start from 0).

To resolve the “

RuntimeError: CUDA error: invalid device ordinal

” problem, the steps outlined here can greatly assist. However, based on your specific situation, you might require detailed troubleshooting. I recommend referring to the StackOverflow community for problem-specific solutions and suggestions from disparate developers in similar predicaments.While programming with CUDA (Compute Unified Device Architecture), an interface that lets software harness the raw power of NVIDIA’s Graphic Processing Unit (GPU), you may occasionally run into the “CUDA Error: Invalid Device Ordinal” error. This particular error emerges when your code tries to access a GPU device on a machine that either does not have one or is indexed incorrectly.

The term ‘Invalid Device Ordinal’ here specifically emphasizes that there’s a problem with how the GPU device is being accessed within your code. Each GPU connected to a system is assigned an integer index, and these indexes are employed so as to uniquely identify GPUs. If you attempt to access the GPU with an index outside of the valid range in your CUDA equipped system, it will trigger the ‘CUDA Error: Invalid Device Ordinal’ error.

For example, given the following sample line of CUDA code:

cudaSetDevice(10);

This command instructs CUDA to use the GPU with index 10. Now if your system only has 2 GPUs (indexed from 0), it doesn’t have a GPU with an index of 10, leading to ‘CUDA Error: Invalid Device Ordinal’.

So, how do we solve this? The solution would depend on the root cause of the error:

• Faulty Index : If your system contains a GPU but your code attempts to access it using an invalid index, you should correct the index number. Always ensure that the index you provide falls within the range of connected GPUs.

• No Available GPUs : Another typical situation that could trigger the ‘CUDA Error: Invalid Device Ordinal’ is when your code tries to access a GPU device on a machine devoid of any available GPUs. In this scenario, check whether your device supports CUDA by issuing the

nvidia-smi

command in the terminal. If it returns details about your GPU(s), your system does support CUDA. Alternatively, if it yields an error, you would need to install a CUDA-compatible GPU in your device.

Following is the python pytorch code snippet which allows you to verify the number of GPUs and their respective indexes:

import torch print(torch.cuda.device_count())

Using this Python script based on PyTorch – a machine learning library for Python – you can check the exact number of available GPU devices on your workstation. This helps ensure that you are referencing an existing device in your CUDA code.

In summary, addressing ‘CUDA Error: Invalid Device Ordinal’ involves ensuring proper indexing and the presence of at least one CUDA-compatible GPU within your system. By simply doing that, you can effortlessly prevent this runtime error and maximize the computational ability of your hardware setup.As a professional coder, my day often includes navigating complex error messages in various programming languages. One such potentially confusing error is the “Invalid Device Ordinal” error that can occur while you’re using CUDA for GPU processing. This error means that you are trying to access a GPU device number that is higher than what exists on your system.

To understand how to debug this “Invalid Device Ordinal” error in CUDA, we have to take a look at the code generating this issue. Often, the error originates from the user specifying a device that doesn’t exist by using the

cudaSetDevice()

function.

Consider an example where we have two GPUs, and the device IDs would be 0 and 1. If You try to access device 2 (which does not exist) you’ll get the “Invalid Device Ordinal” error.

//the following command will fail if there is less than 3 gpus cudaSetDevice(2);

Always know how many GPUs exist on your runtime environment. We can use the

cudaGetDeviceCount()

function to solve get the correct number.

// Define variable for device count

int deviceCount = 0;

//get number of devices

cudaGetDeviceCount(&deviceCount);

// validating the device id that it is within range of your device count

if(deviceId < deviceCount)

cudaSetDevice(deviceId);

As per above code sample, I would recommend doing validation before setting the device ID and trying to run kernels.

It's also noteworthy that errors like this could appear differently depending on your coding setup and environment. Python programmers using PyTorch might encounter this problem as a RuntimeError with the message "RuntimeError: CUDA Error: invalid device ordinal".

Besides wrong device access, another reason could be due to mixing multiple instances on the same job or having different GPU types on the server, causing only some jobs to behave as expected based on their specific GPU requirements.

When faced with such a situation, the strategy involves:

- Validating the device count beforehand and ensuring you aren't exceeding available devices.

- Ensuring that you haven't mixed GPU types that could be causing some of your processes to fail.

- Making sure that each instance is correctly set up to access only the devices it requires and is not infringing on another job’s space.

Check NVIDIA's official documentation here to understand more about managing multiple GPUs and working with CUDA functionality. Remember, effective debugging is a skill gained over time. Adopting a systematic, analytical approach to understanding error messages can bring us one step closer to well-optimized, efficient coding!The "CUDA Error: Invalid Device Ordinal" is one that we encounter when working with CUDA and Pytorch. This error normally occurs when the Pytorch program tries to use a GPU whose ordinal number is greater than the total number of GPUs available on your machine or when the set GPU id does not exist. For a typical system with only one GPU, the GPU's ordinal code is usually "0". If you try to use an ID greater than "0", such as 1 or 2, the "Invalid Device Ordinal" error will appear.

To avoid recurring CUDA issues, it is of paramount importance to thoroughly understand your GPU structure and properly align your Pytorch programs with it.

Here are some steps to prevent the recurrence of the 'CUDA Error: Invalid Device ordinal":

- Always Check The Number of GPUs

Before running any GPU-related tasks, it's important to check the actual number of GPUs in your system. You can easily verify this using the command:

print(torch.cuda.device_count())

- Correctly Set The GPU Device ID

PyTorch allows us to specify which device we want to use through the function torch.cuda.set_device(). However, make sure the ID you're setting is valid and exists in your machine.

torch.cuda.set_device(0)

# Setting to use the first GPU

- Use Environment Variables

You can also set the appropriate GPU device ID by configuring the CUDA_VISIBLE_DEVICES environment variable before running your script.

export CUDA_VISIBLE_DEVICES=0

# Configuring to use the first GPU

For fixing the 'RuntimeError: CUDA Error: Invalid Device ordinal' issue:

This error usually comes from explicitly specifying a non-existing GPU. Let's say you only have one GPU, therefore, its ordinal code is "0". Any attempt to utilize a GPU with an ordinal code larger than what your machine currently has will result in this error message.

Your code probably contains a syntax similar to:

torch.cuda.set_device(1)

and should be replaced with:

torch.cuda.set_device(0)

So always ensure that you're using the correct GPU device set in your code and the set GPU id exists. It's also good practice to catch these exceptions, print them out, so if there's a problem you'll know exactly where to look at.

Following these steps tend to reduce the likelihood of encountering the 'CUDA Error: Invalid Device ordinal' significantly.

For more information, you can refer to PyTorch’s official documentation about handling Multi-GPU systems.

These steps serve as both preventative measures and solutions to the 'CUDA Error: Invalid Device ordinal' issue. Maintaining a habitually mindful approach towards your GPU usage, your Pytorch programs, and how they coincide with each other helps eliminate the likelihood of encountering this error. Fundamentally, understanding your machine’s GPU configuration and correctly setting your Pytorch program to align with it forms the panacea to this perennial CUDA error problem.To solve the "RuntimeError: CUDA error: invalid device ordinal," you first need to understand what the error is signaling. The Invalid Device Ordinal error message typically indicates a mismatch between the number of GPUs available and the GPU number called in your code.

torch.cuda.set_device(device)

or

with torch.cuda.device(device)

are examples of codes that could trigger this error if an unavailable GPU number is passed.

• Check for Available GPUs on Your Device

A primary action should be to verify the availability of GPUs on your machine. A Python code can be used to get a printout detailing the available GPUs. Here's how:

import torch print(torch.cuda.device_count())

• Manage Your Handling of GPUs

If numerous GPUs are dealt within the code, care must be taken to correctly assign tasks to available GPUs. This includes handling data placement like tensor calculations and model loading. For instance, using `model.to('cuda:0')` when only one GPU exists (with index 0) will result in this error.

• Inspect Environment Variables

Environment variables like CUDA_VISIBLE_DEVICES affect which GPUs are accessible by PyTorch, resulting in the 'Invalid Device Ordinal' error if not managed suitably.

Sometimes, even after correct management of multiple GPUs, this error might still arise due to problems with CUDA initialization. In such cases, the solution could be to reboot the system or reset the GPU using the NVIDIA System Management Interface(nvidia-smi).

Remember, operating in an appropriate context where your specified device ID matches the IDs of the available devices provides an easy way to avoid a "CUDA error: invalid device ordinal". Always double-check that the environment you're working in matches your requirements.

Lastly, ensure you are keeping your frameworks, drivers, and CUDA toolkit updated. Using the right versions and keeping them updated can avoid many such runtime errors. If compatibility issues exist, they can likely give rise to RuntimeError, including "Invalid Device Ordinal."

In table form, your actions should look like this:

| Action | Solution |

|---|---|

| Check available GPUs | Use Python code to obtain GPU details |

| Manage GPU handling | Correctly assign tasks to GPUs |

| Inspect environment variables | Ensure CUDA_VISIBLE_DEVICES are correctly managed |

| Issues with CUDA Initialization | Reboot the system or reset the GPU |

| Maintain updates | Update frameworks, drivers, and CUDA toolkit |

And there you have it! Understanding the root cause of the error and effectively taking corrective steps will solve the "RuntimeError: CUDA error: invalid device ordinal" issue. You might find more info about this topic [here](https://pytorch.org/docs/stable/notes/cuda.html).