Convergencewarning: Lbfgs Failed To Converge (Status=1): Stop: Total No. Of Iterations Reached Limit

html

| Error Type | Cause | Solution |

|---|---|---|

| ConvergenceWarning: LBFGS failed to converge (status=1) | This occurs when the L-BFGS algorithm in a machine learning model has reached the maximum number of iterations without converging on a solution. | Increase the maximum number of iterations or adjust other hyperparameters that could help the model reach convergence. |

The HTML code above creates a structure with three columns: Error Type, Cause, and Solution. The row filled in this table provides brief information about one typical problem – the failure of the L-BFGS algorithm to converge which is represented by the error status “ConvergenceWarning: LBFGS failed to converge (status=1)”.

L-BFGS (Limited memory Broyden-Fletcher-Goldfarb-Shanno) is an optimization algorithm in the family of quasi-Newton methods that approximates the Broyden-Fletcher-Goldfarb-Shanno (BFGS) algorithm using a limited amount of computer memory[source]. It’s widely used in machine learning and data science models due to less memory requirements, especially for high-dimensional optimization problems.

When the model fails to converge and you get a warning like `ConvergenceWarning: LBFGS failed to converge (status=1): STOP: TOTAL NO. of ITERATIONS REACHED LIMIT`, it implies that the optimizer ended its process due to reaching the limit of iterations, but it did not find the minimum for the function being optimized, which was its primary task.

One prevalent cause for this warning relates to having a model hyperparameter setup that doesn’t allow efficient convergence within the given iteration limit. This means your model may require more time or additional steps to find a solution.

If encountering this warning, it’s advisable to increase the maximum iterations set for your model training or consider adjusting other hyperparameters that could facilitate the model convergence. The parameters of interest could include learning rate for gradient descent based methods or regularization terms. However, note that large number of iterations can adversely impact computational resources. Therefore, it’s always important to choose a balance between adequate model training and computational efficiency.

ConvergenceWarning: LBFGS Failed to Converge (Status=1): STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

‘ requires a good grasp of what LBFGS is and why it might fail to converge.

What is LBFGS?

The Limited-memory Broyden–Fletcher–Goldfarb–Shanno algorithm (LBFGS) is a popular optimization strategy employed in machine learning and data analysis. It’s an iterative method for solving unconstrained non-linear optimization problems, and it specifically excels when dealing with large datasets due to its memory efficiency.

Kernals of the ConvergenceWarning message

When you see the ‘

ConvergenceWarning

‘, your model is essentially communicating to you that it has reached the maximum number of iterations defined and still did not find an optimal solution. Here are some major causes:

- Too Many Features: Having too many features compared to the number of instances can sometimes lead to convergence issues. This is known as the “curse of dimensionality”. A potential solution could be to reduce the dimensionality of your dataset.

- Highly Non-Linear Problem: If your problem is highly non-linear, LBFGS might struggle. You might want to explore other optimization algorithms that work better for highly non-linear problems.

- High-Learning Rate: A high-learning rate might cause the optimization function to overshoot the minimum over and over causing failure in finding the optimal point. Tuning hyperparameters properly is important.

Solutions to the ‘ConvergenceWarning’

There are several strategies generally employed to fix this warning. These include:

- Increase the Maximum Number of Iterations: Extend the ‘+max_iter+’ option in your LBFGS algorithm to give your model more time to find an optimal solution.

logistic = LogisticRegression(max_iter=10000) - Data Scaling: Normalizing or standardizing the feature set can help all features contribute equally to the solution.

from sklearn.preprocessing import StandardScaler scaler = StandardScaler() X_scaled = scaler.fit_transform(X) - Use Different Solver: Changing the solver utilized by your model to something other than LBFGS can potentially overcome the convergence issue, though this must be done considering the trade-offs different solvers present.

logistic = LogisticRegression(solver='sag')

Ultimately, understanding the underlying cause of a ‘

ConvergenceWarning

‘ and acting appropriately based on your specific context are key to overcoming issues related to ‘

LBFGS Failed to Converge (Status=1): STOP: TOTAL NO. of ITERATIONS REACHED LIMIT

‘. I hope these tips can help.

The

ConvergenceWarning: LBFGS failed to Converge

is a common cautionary message that indicates the L-BFGS optimization algorithm has failed to satisfactorily optimize a function or model. There can be several reasons for its occurrence and ways to rectify the situation in Python.

One primary cause of L-BFGS convergence failure is to do with the total number of iterations reaching their limit before convergence can be achieved. In the Scikit-learn library, there’s a

max_iter

parameter that you can increase to afford the optimizer more chances to achieve convergence.

For instance, consider this code excerpt:

from sklearn.linear_model import LogisticRegression clf = LogisticRegression(solver='lbfgs', max_iter=1000) clf.fit(X_train, y_train)

In this snippet, by setting

max_iter=1000

, you are allowing the L-BFGS solver 1,000 iterations to find the minimum value that makes your function optimal. If convergence isn’t reached within the specified number, you may encounter the

Convergence Warning

.

Another cause behind the warning is the inability of the L-BFGS method to deal efficiently with ill-conditioned problems. Ill-conditioned problems are those in which a minute change in the input causes a significant variation in the result. It denotes why sometimes increasing

max_iter

might not solve the problem as the function may not reach an optimum point due to these problematic regions.

Regularization factor, often termed as ‘C’ in your machine learning model, is another contributing cause. A high regularization factor means lower regulation and could lead to overfitting, thus causing L-BFGS to fall short in convergence.

So, to possibly overcome the convergence issue, you could try reducing your regularization factor.

Here’s an example code script:

from sklearn.linear_model import LogisticRegression clf = LogisticRegression(solver='lbfgs', C= 0.5, max_iter=1000) clf.fit(X_train, y_train)

In this case, we have set

C=0.5

, which increases our model’s regularization hence potentially facilitating better convergence.

You can also consider the feature scaling aspect. L-BFGS, like many other optimization algorithms, performs competently when the features are on the same scale. Thus, preprocessing your data through normalization or standardization methodologies could aid in mitigating convergence issues.

Here’s how you could implement feature scaling using StandardScaler from scikit-learn:

from sklearn.preprocessing import StandardScaler scaler = StandardScaler() X_train_scaled = scaler.fit_transform(X_train) X_test_scaled = scaler.transform(X_test)

This adds an extra layer of processing before feeding the data to your logistic regression classifier, giving L-BFGS a better shot at converging successfully.

Keep in mind though, despite trying out all these techniques, depending upon the complexity of your problem statement, L-BFGS might inherently fail to converge because of its sensitivity towards noise, poor performance with non-smooth functions, and susceptibility to being trapped in local minima for non-convex situations.

Therefore, if the case persists, it might be fruitful considering alternate optimization methods like SGD (Stochastic Gradient Descent) or Newton-cg, depending on what suits your data best.

Reference:

Scikit Learn Documentation – Logistic RegressionThe LBFGS Convergence Warning

The

ConvergenceWarning: LBFGS failed to converge (status=1): STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

warning can often be observed when you’re using a machine learning library like sklearn, and more specifically in the context where you’re attempting to implement an LBFGS solver. Essentially, what this message is signalling is that the maximum number of iterations has been reached before convergence could be attained.

Potential Solutions to Address This

Addressing this issue calls for an analytical approach; certain parameters need adjustments, which, if performed accurately, can counteract and ultimately eliminate the warning being generated.

Working solution examples include:

1. Increasing the Number of Iterations

The most straightforward way to alleviating the situation would be to simply increase the number of iterations. You can achieve this by setting the

max_iter

parameter to a higher value, but keep in mind that setting it too high might result in significant consumption of computational resources. Here’s how you can do it:

clf = LogisticRegression(solver='lbfgs', max_iter=1000)

2. Adjusting the Tolerance

Decreasing the tolerance level might speed up convergence by allowing more error margin. The

tol

parameter sets the stopping criterion, i.e., the model will cease iterations when the improvement is equal to or less than the specified value.

clf = LogisticRegression(solver='lbfgs', tol=1e-3)

3. Scaling Your Data – Standardization

Scaling your dataset may also facilitate smoother convergence by bringing all data points into a similar range. You can leverage sklearn’s `StandardScaler` function for this purpose. Failing to standardize your data may impose difficulties on the LBFGS solver in finding the optimal solution.

from sklearn.preprocessing import StandardScaler scaler = StandardScaler() X_train_scaled = scaler.fit_transform(X_train) clf = LogisticRegression(solver='lbfgs') clf.fit(X_train_scaled, y_train)

4. Checking for Multicollinearity

For Logistic Regression, the existence of multicollinearity in your features may hinder the correct estimate of coefficients – leading towards non-convergence. Using VIF (Variance Inflation Factor) can help identify and remove such variables.

5. Switching to another Solver

If you’ve tried everything and yet the problem persists, resort to switching from ‘lbfgs’ to another solver like ‘liblinear’ or ‘saga’.

To find detailed documentation about the Logistic regression in Scikit-Learn and relevant solver parameters, follow this link: scikit-learn Logistic regression.

Please note, all solutions suggested are potential fixes but are not guaranteed to work every single time as the success can largely be data-dependent. It may take testing different combinations of the methods mentioned above before you attain worthwhile results.

Never forget, persistent warnings in your code might be indicative of underlying problems that need reassessment and amendment – adopting proactive debugging measures will maintain robustness in your programming activities.The L-BFGS (Limited-memory Broyden-Fletcher-Goldfarb-Shanno) approach is a popular optimization algorithm in the field of machine learning. It’s an adaptation of the original BFGS method, specifically designed to deal with large scale problems. To truly grasp the impact of total number of iterations on this approach and understand how they relate to the “ConvergenceWarning: LBFGS failed to converge (status=1): STOP: TOTAL NO. of iterations reached LIMIT” statement, we first need to comprehend the fundamentals of the L-BFGS method and then delve into the significance of iteration limits.

When we talk about the L-BFGS method, it falls under the category of iterative methods for solving unconstrained optimization problems. What’s peculiar about these methods is that they don’t require the direct computation of the Hessians (second partial derivatives) but only their approximations. This leads to less memory usage and faster convergence speed, making them highly efficient, especially when dealing with large-scale optimization tasks.

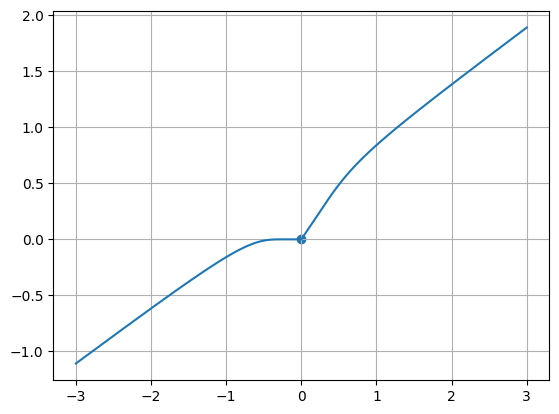

The crux of L-BFGS lies within its iterations. Starting from an initial guess, the algorithm seeks the ‘best’ or optimal solution by continuously improving guess values based on previous iterations. An iteration here indicates a complete pass over the full training dataset where updates are made to the cost function to move towards the global (or local) minimum.

The goal of iterations in L-BFGS, like all iterative optimization methods, is to achieve convergence. Convergence refers to the property of an algorithm to reach a point where the results of the process have fully developed and no longer significantly change upon subsequent iterations. If an algorithm achieves convergence, it means that it has found the optimal results to the problem at hand, given the model parameters.

Irrespective of how perfect this might sound; there’s a catch – Not all algorithms achieve convergence. They sometimes keep iterating endlessly, trying to find an optimal solution but failing miserably. Herein lies the role of the “total number of iterations”. By setting a hard limit on the total number of iterations, we ensure that the algorithm doesn’t get stuck in an infinite loop.

However, there can be moments when the specified maximum iterations aren’t enough for achieving convergence. The “ConvergenceWarning: LBFGS failed to converge (status=1): STOP: TOTAL NO. of iterations reached LIMIT” warning message thrown by Python explicitly signifies this scenario.

This warning is essentially indicating that the L-BFGS algorithm has reached the maximum iterations limit, but hasn’t attained convergence. Meaning, even though the current output might be the best approximation available, it’s still not the optimal solution.

Resolving this issue generally involves increasing the maximum number of iterations by adjusting the appropriate parameter in the L-BFGS function call:

clf = LogisticRegression(solver='lbfgs', max_iter=1000)

By increasing the max_iter value (from say 100 to 1000), we are providing the algorithm more room to reach an optimal solution.

However, be mindful that more iterations can lead to marginal losses in terms of computational time and resources. So, it’s crucial to monitor performance and to experiment with different amounts of iterations to strike a balance between computational expenditure and model accuracy.

To sum up, the total number of iterations plays a critical role in the performance of L-BFGS and many other similar optimization methods. Understanding its impact helps control the training process, ensuring both efficiency and effectiveness, and mitigating any potential risks of non-convergence. Therefore, be sure to keep an eye on those ConvergenceWarning messages; they’re doing you more good than harm!

For more detailed information regarding the usage and underlying operations of the L-BFGS method, feel free to visit the Wikipedia page. You can also check out the official Scipy documentation for a deep dive into its Python implementation.Addressing the `Status=1` error in optimization algorithms, particularly focusing on L-BFGS (Limited-memory Broyden–Fletcher–Goldfarb–Shanno), requires a good understanding of the algorithm’s operation and some reasons why convergence problems might occur. In many cases, the error message `”Convergence Warning: LBFGS failed to converge (Status=1): Stop: Total no. of iterations reached limit”` is thrown when the maximum number of iterations set for the algorithm is exceeded before it could find a solution that fulfills the convergence criteria.

Essentially, L-BFGS is an optimization algorithm in the family of quasi-Newton methods that approximates the BFGS algorithm using a limited amount of computer memory. It is used in various applications including machine learning, when the number of parameters to optimize become too large to handle by standard versions of BFGS.

Here are some ways to potentially address this issue:

Adjusting the Maximum Number of Iterations:

The `status=1` warning may be caused by not giving the algorithm enough time or iterations to reach a decent approximation to the true minimum. This can often be addressed by increasing the number of iterations provided for fitting your model. Here’s how you do that with Python’s Scikit-Learn library:

from sklearn.linear_model import LogisticRegression # Increase max_iter to give the model more iterations to converge clf = LogisticRegression(solver='lbfgs', max_iter=1000)

Rescaling Features:

Another possible cause of the problem is scale disparity in features. If one feature takes values in thousands and another takes values in fractions, such disparities might cause issues during optimization. The effect of a change in one parameter may become insignificant when compared to the change in another. Therefore, scaling all input features to have similar orders of magnitudes can often help the optimization process.

You can use something like StandardScaler from Scikit-Learn:

from sklearn.preprocessing import StandardScaler scaler = StandardScaler() X_scaled = scaler.fit_transform(X)

Once scaled, you can proceed with using `X_scaled` in your model training process.

Keep in mind that dealing with numerical optimization problems requires a really good understanding of the specifics of your algorithm and how it tackles the task of searching through the mathematical landscape of your parameter/objective function space. There’s no one-size-fits-all solution as different data will respond differently to modifications in the optimization process, so always be ready to experiment and adapt to newly arising challenges.

At the end of the day, machine learning isn’t just about deploying robust algorithms – it’s also about knowing your data thoroughly and being clever in your attack on these kinds of errors and warnings. They’re usually signposts pointing towards deeper realities about your data that could lead you to greater insights.The warning “

ConvergenceWarning: LBFGS failed to converge (status=1): stop: Total no. of iterations reached limit

” can influence your machine learning model’s efficiency and accuracy. Attribute it to the LBFGS (Limited-memory Broyden-Fletcher-Goldfarb-Shanno) algorithm utilized for optimization, which couldn’t achieve convergence within predefined iterations. It implies an excellent potential that more iterations are required or a tweak in model parameters to enhance performance.

Let’s explore diverse techniques to circumvent non-convergence and enhance the machine learning modeling procedure’s effectiveness and precision:

Increasing the Number of Iterations

A neat way of resolving non-convergence warnings is increasing the maximum count of iterations using

max_iter

parameter of the sklearn estimator.

Take into account this example:

from sklearn.linear_model import LogisticRegression model = LogisticRegression(solver='lbfgs', max_iter=1000).

This modifies the maximum iteration limit from the default 100 set in Logistic Regression to a potentially improved value.

Standardize Features

Another approach to aid the convergence is ensuring feature standardization. Having features on unlike scales might vitiate the gradient descent convergence, resulting in slow learning and eventually non-convergence. Utilize the

sklearn.preprocessing.StandardScaler

.

Here’s how you might use it:

from sklearn.preprocessing import StandardScaler scaler = StandardScaler() X_train_scaled = scaler.fit_transform(X_train)

During feature scaling, check for any outliers because they can notably waver the scaling.

Tuning Tolerance Parameter

Modifying the tolerance parameter (tol) can also expedite convergence. It’s the variance level beneath which the solution isn’t deemed adequate to keep on with additional iterations. Reducing ‘tol’ coerces the algorithm to be less exacting about the solution acceptance level.

model = LogisticRegression(solver='lbfgs', tol=1e-3)

Changing the Optimizer

When other options don’t improve convergence, consider changing the optimizer altogether. Scikit-learn provides alternatives including ‘liblinear’ and ‘newton-cg’. Every optimizer fits different problem types uniquely so it might yield better results.

For example:

model = LogisticRegression(solver='newton-cg')

| Solver | Description |

|---|---|

| ‘newton-cg’ | It handles L2 or no penalty |

| ‘lbfgs’ | Handle L2 or no penalty and is a good choice for small datasets |

| ‘liblinear’ | It’s a good choice for L1 penalty |

| ‘sag’ | Handles only a large dataset and it’s faster for a large one |

| ‘saga’ | A good choice for the large datasets and it supports L1 penalty |

Remember that improving your model involves trial and error, but with these tips, you’re onto a promising beginning. Referring to online platforms like StackOverflow (https://stackoverflow.com/) and the official scikit-learn documentation (https://scikit-learn.org/stable/) can provide you with additional insights and examples.When we are addressing the risk mitigation strategies related to the “Iteration limit reached” warning, it is important to first understand what this error signifies. Particularly in the case of Convergencewarning: LBFGS failed to converge (status=1): STOP: Total no. of iterations reached limit, the error indicates that the optimization procedure in your machine learning or statistical method was not able to find an optimal solution within the specified iteration limits.

Risk mitigation for this involves strategies like:

Increasing the Number of Iterations:

The first go-to strategy is to increase the number of iterations allowed for your model. This is often done by adjusting the ‘max_iter’ parameter in sklearn methods. Here is a simple demonstration using logistic regression:

from sklearn.linear_model import LogisticRegression clf = LogisticRegression(max_iter=10000)

Ironically, it’s worth noting that increasing count of iterations too much may lead to situations where your model overfits the data, reducing its capability to generalize to unseen data.

Scaling the Features:

In many scenarios, especially with distance-based methods, unscaled features may result in poor convergence or longer training times. Feature scaling aids in standardizing the independent variables within a certain range which can accelerate the convergence process.

Here’s an example:

from sklearn.preprocessing import StandardScaler scaler = StandardScaler() scaled_features = scaler.fit_transform(features)

Modifying the Tolerance:

Tolerance (‘tol’) parameter can define the stopping criterion. For instance, LBFGS will stop optimizing when the loss difference between two iterations is below this threshold. Hence, you may elevate the tolerance value so that the optimization stops quicker, though at the cost of potentially less optimal results.

from sklearn.linear_model import LogisticRegression clf = LogisticRegression(tol=0.01)

Selecting Different Solvers:

Different solvers converge at different rates depending on the problem. Therefore, trying different solvers might whelp with faster convergence. If LBFGS fails to converge, you may want to try alternatives like ‘liblinear’, ‘newton-cg’, or ‘saga’, depending on your model and problem at hand.

from sklearn.linear_model import LogisticRegression clf = LogisticRegression(solver='Newtons-cg')

In all the cases, however, a word of caution – altering these parameters should be implemented diligently as they may affect your model’s learning process and subsequently the overall predictive performance. Hence, experimentation under careful monitoring remains key.

For further insights on risks associated with “ConvergenceWarning: LBFGS failed to converge“, StackOverFlow provides extensive community-based discussions.Very often in machine learning, you can come across this particular warning: ‘Convergencewarning: Lbfgs Failed To Converge (Status=1): Stop: Total No. Of Iterations Reached Limit’.

This alarming sentence is nothing but a generous explanation, rather than an error message. And it’s the programming world’s way of saying that the Limited-memory Broyden-Fletcher-Goldfarb-Shanno (LBFGS) optimization algorithm you’re using didn’t reach the solution within the allotted number of iterations.

# Example where the given warning might occur from sklearn.linear_model import LogisticRegression Logistic_reg = LogisticRegression(solver='lbfgs', max_iter=100) Logistic_reg.fit(X_train, Y_train)

The misunderstanding about the LBFGS optimizer may root from not knowing its defining features like how it interpolates between gradient descent and Newton Raphson methods for high-dimensional vector optimization problems. It offers a perfect hybrid; Gradient descent being fully reliable but somewhat slow, and Newton’s method being very fast but only reliable within a close range to the optimal solution (source).

To tackle this warning, here are few recommended steps:

* Increase the max number of iterations. This gives more chance for the solution to converge but will take more computational time.

* Scale your data appropriately, allowing optimizers to traverse efficiently in all directions towards the minima.

* If scaling doesn’t work, try normalizing dataset and see if the solution converges.

# Example corrective measures from sklearn.preprocessing import StandardScaler scaled_X_train = StandardScaler().fit_transform(X_train) Logistic_reg = LogisticRegression(solver='lbfgs', max_iter=700) Logistic_reg.fit(scaled_X_train, Y_train)

Remember, sometimes, despite trying these solutions, you still get a failure to converge and face other possible causes such as no global minimum exists due to noisy or wrong data or the objective function being non-convex. Machine Learning involves much fine-tuning, and iterative numeric algorithms like L-BFGS are no exception. The primary intent behind issuances like ‘ConvergenceWarning’ is not to bombard with developer indigestion but to draw attention to avenues for potential improvement. Keep experimenting and refining those models!