| Error Issue | Causes | Potential Solutions |

|---|---|---|

RuntimeError: Expected All Tensors To Be On The Same Device |

The problem primarily originates when all tensors involved in operations are not present on the same device during runtime in PyTorch. | To rectify this, a coder needs to ensure all tensors function on the same device, either CPU or GPU. |

The RuntimeError: Expected All Tensors To Be On The Same Device is an error message you might encounter while using PyTorch, a popular machine learning library source. This error would occur typically when you’re performing operations involving multiple tensors, where at least two of the tensors reside in different devices (CPU and GPU are different devices) during runtime.

In PyTorch, every tensor is associated with a device, either a CPU or a GPU. It is unlikely for PyTorch to implicitly move data between devices. Consequently, if your code is trying to perform an operation that involves tensors on disparate devices, then PyTorch raises the ‘Expected all tensors to be on the same device’ error.

However, one shouldn’t fret as the issue has clear fixes. A simple technique is by meticulously ensuring all tensors involved in the operation are brought onto the same device before performing the action. This can be achieved using the tensor.to(device) method, where “device” could be either a CPU (denoted as torch.device(“cpu”)) or a specific GPU (denoted as torch.device(“cuda”)), dependent upon where you want your tensor data to reside.

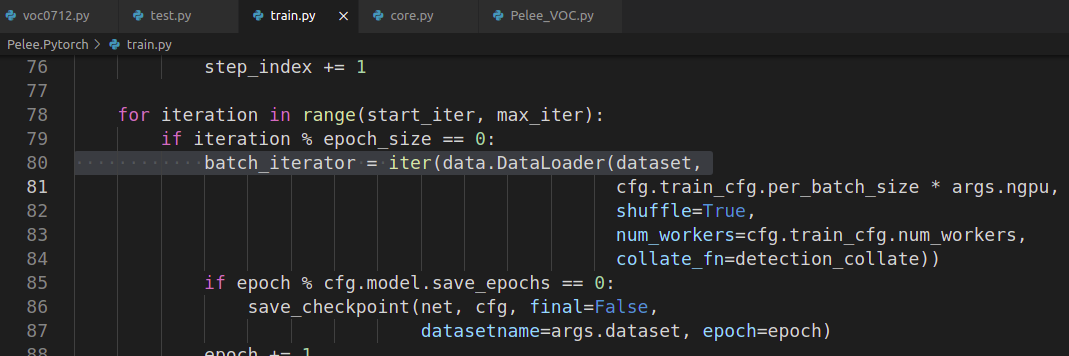

Here’s a basic example of how to carry out the device matching process:

device = torch.device("cuda")

tensor1 = tensor1.to(device)

tensor2 = tensor2.to(device)

Always remember, the secret lies in keeping all tensors involved in an operation on the same platform – either the CPU or the GPU. Constructing synchronicity between the datasets is going to smoothen your coding path immensely.The

RuntimeError: Expected all tensors to be on the same device

error typically takes place while working with PyTorch’s Tensor Processing system. This is a common error experienced during the learning, predictive or analytic operations where tensors should ideally be on the same device, either CPU or GPU along with the models for performing computations.

Causes of this RuntimeError

- Inconsistent allocation of tensors and model: One primary cause is when your data (tensors) and model are not placed on the same device; meaning that if your tensor is on a CPU and your machine learning model (such as in PyTorch) is on a GPU, or vice versa.

- Device availability: The error could also occur due to the unavailability or processability of the particular device. If the Tensor Processing is targeting an unavailable GPU while it’s busy processing another task or simply not present.

- Incorrect Code Syntax: Sometimes a simple coding mistake can lead to this error. When you’re inputting code to allocate the tensor and model into the same device, double-check syntax and alignments.

Here’s an illustrative example:

tensor = torch.tensor([1.0])

model = model.to('cuda')

output = model(tensor)

The above code will throw a RuntimeError because the tensor was not moved to GPU using cuda, which leads to inconsistency and thus, error.

Resolution techniques

To avoid this error, you need to maintain consistency in your data-model placement across devices.

- Your tensors and model should both reside in either the CPU or GPU:

- Perform checks prior to moving tensors and model:

- Finally, always make sure you’re adhering to documentation guidelines and conforming with the correct syntax.

For instance, let’s say you want to use the GPU. Then your code sample should look like this:

tensor = torch.tensor([1.0]).to('cuda')

model = model.to('cuda')

output = model(tensor)

You should implement conditions within your code to validate device existence and availability before attempting to move your data or model there. Like using

torch.cuda.is_available()

function in PyTorch.

For more comprehensive treatment of this topic, you can visit the official PyTorch Documentation. In there, you’d get detailed insights about handling devices in Tensor processing, preventing RuntimeErrors, and ways to write effective, error-free code.Sure, let’s dive into that. In machine learning tasks implemented using programming languages like Python, PyTorch is a widely-utilized library known for its capacity to work with tensors. A tensor can be thought of as an array that may have several dimensions, and they are used to feed data into models, especially in deep learning tasks.

Also, PyTorch has built-in functions that allow for the use of Graphics Processing Units (GPUs), which offer computational advantages over standard Central Processing Units (CPUs). This is made possible through parallel computation, permitting multiple operations to be done at once. Consequently, this ability enhances training times for neural networks, leading to a rapid iteration process, which is terribly useful in the model tuning phase.

However, as fantastic as this feature might appear to be, it does occasionally present an issue best described by RuntimeError: Expected all tensors to be on the same device’, which means your tensors are not located on the same device (either GPU or CPU).

So why does ‘device compatibility’ matter when working with Tensors? Well,

– Parallel Computation: GPUs consist of hundreds of cores that can handle thousands of threads simultaneously. For handling large datasets, which are common in the domain of Machine Learning, we need a potent processor like GPU. Therefore, ensuring that all tensors are on the same device (i.e., either all on GPU or CPU) allows for efficient computation.

– Data Transfer Overhead: Transferring data from CPU to GPU or vice versa isn’t cost-free. It takes a considerable amount of time and therefore may slow down your computations.

You generally see this error (‘RuntimeError: Expected all tensors to be on the same device’) when one tensor is on the CPU and another is on the GPU, and you’re trying to perform some operation involving both.

Here is an example of what it looks like in code:

import torch x = torch.tensor([1.0]) x = x.cuda() # moves `x` to GPU y = torch.tensor([2.0]) # `y` is on CPU print(x + y) # attempts to add `x` (GPU) and `y` (CPU)

This will throw an error similar to RuntimeError: Expected all tensors to be on the same device.

The fix is straightforward: ensure you place all your tensors on the same device before attempting operations involving them.

For instance:

import torch x = torch.tensor([1.0]).cuda() # `x` is on GPU y = torch.tensor([2.0]).cuda() # `y` is moved to GPU print(x + y) # no error since `x` and `y` are on the same device

In conclusion, device compatibility plays a significant role when dealing with tensors, primarily for reasons linked to computational efficiency and diminishing unnecessary delays.

Remember to keep all your tensors on the same device to prevent potential issues during runtime.

More details about PyTorch, tensors, and device management can be found in the official PyTorch tutorials.One of the intriguing challenges you might encounter while working with PyTorch tensors is receiving a RuntimeError message that says “expected all tensors to be on the same device”. This error simply implies that you are attempting to perform an operation involving tensors that reside on different devices – some may be on your CPU, and others on your GPU.

To avoid this RuntimeError and ensure smooth sailing in your tensor-related operations, several strategies should be adopted. These strategies have been outlined in detail below, including code snippets.

1. Use the .to(device) Strategy

Always opt for ensuring explicit tensor device specification using

.to(device)

. Create a `device` object and move all your tensors to the chosen device (CPU or GPU) before any operations. For instance, if you want to run operations using a GPU, here’s how:

device = torch.device("cuda")

tensorA = tensorA.to(device)

tensorB = tensorB.to(device)

In the code snippet above, we first determine the device and assign it to the variable ‘`device`’. Then we shift ‘`tensorA`’ and ‘`tensorB`’ to that device.

2. Use the nn.Module’s .to(device) Strategy

If you are using models from

torch.nn.Module

, you can use the

.to(device)

method with the `device` object. This method graciously moves all model parameters to the specified device. In the case of performing operations with a GPU:

device = torch.device("cuda")

model = model.to(device)

The command above takes every parameter of the ‘model’ and transfers it to the GPU designated under the ‘device’ object.

3. Use Implicit Tensor Placement

PyTorch has a provision for creating a tensor directly on the desired device which prevents the tensors hopping between devices. It looks something like this:

device = torch.device("cuda")

tensorA = torch.tensor([10., 20.], device=device)

tensorB = torch.tensor([15., 25.], device=device)

Here, ‘`tensorA`’ and ‘`tensorB`’ are created directly on the GPU, as indicated by the `device` parameter.

Nonetheless, you need to know that all these measures only help to prevent the runtime error. Whenever you have operations that involve more than one tensor, remember to confirm they all reside on the same device before performance.

Reference: PyTorch Official Documentation: torch.device.

Lastly, it’s important to mention that efficient GPU computing requires careful data management and these strategies can assist in leveraging the GPU’s potential effectively while minimizing errors. Always strive to use the device’s resources optimally – transferring large datasets multiple times between devices could deplete your system’s memory resources and elongate computation time.Dealing with the

RuntimeError: Expected all tensors to be on the same device

error in PyTorch is a common situation faced by programmers when they encounter mismatched devices on tensors. To clarify the concept, let’s first dive into the world of Tensors and GPUs.

In deep learning programming, particularly when using the PyTorch library, Tensors are used as the main data structure that encapsulates multi-dimensional arrays with uniform type (integers, float, etc.). These Tensors can be placed either on a CPU or GPU for computation purposes.

While using different computing devices like CPUs and GPUs, ensuring Tensor compatibility is important, otherwise unexpected behaviors may arise leading to errors such as this one.

At the core of this error

Expected all tensors to be on the same device

is an issue with tensor-device alignment. If you attempt to perform computations involving multiple tensors – say through operations such as addition, multiplication, or when feeding them to a model for training, PyTorch expects all those involved tensors to be on the same device. A potential trigger can be a scenario where some tensors were moved to the GPU while others were left on the CPU.

Let’s illustrate this with an example. Assume you have two tensor objects ‘a’ and ‘b’, and you’re trying to add them together:

import torch

a = torch.tensor([1.0, 2.0], device=torch.device('cpu'))

b = torch.tensor([3.0, 4.0], device=torch.device('cuda'))

c = a + b

Here, the tensors ‘a’ and ‘b’ exist on different devices (‘cpu’ and ‘cuda’ respectively). When you try to perform the addition operation, Pytorch raises the `RuntimeError: Expected all tensors to be on the same device`.

To handle this issue, it’s important to consistently track what device your tensors are on and ensure to move all tensors to the same device before performing any operations. Modifying the code above:

a = a.to('cuda')

b = b.to('cuda')

c = a + b

Now, both of the tensors ‘a’ and ‘b’ are on the GPU device, allowing the operation to be carried out smoothly. The use of the

.to()

function ensures that the tensor is transferred to the GPU device denoted by ‘cuda’.

It’s also worth noting that sometimes, this problem can emerge when you forget to move your model to the appropriate device before starting the training process.

The bottom line here is that it’s critically important to maintain proper hygiene when dealing with operations involving multiple tensors in PyTorch across various devices. Particularly, make sure all tensors involved in these operations are properly aligned to the same type of computational resource, be it CPU or GPU, to avoid getting this runtime error. Following such practices not only streamlines the working of computations but also brings about efficiency in your deep learning models.

For more detailed information about the Tensor operations and PyTorch, head over to PyTorch documentation link.When dealing with PyTorch and other similar libraries, it’s not uncommon to run into the RuntimeError expressing “expected all tensors to be on the same device”. This error generally arises in the context of GPU-based computation when you try to perform an operation combining tensors located on different devices (i.e., CPU and GPU). Therefore, ensuring tensor and device alignment is crucial to avoid this pitfall.

To comprehend the situation, let’s consider this exemplar scenario:

# some tensor definitions tensor1 = torch.tensor([0, 1]).cuda() tensor2 = torch.tensor([2, 3]) # computation attempting to combine tensors from different devices result = tensor1 + tensor2

Here,

tensor1

lives on the GPU due to the

.cuda()

call, while

tensor2

resides in the CPU by default. The execution of the operation

tensor1 + tensor2

will throw a runtime error because the involved tensors are not harmonized on the same device.

To get rid of these kind of issues, you can solve it with two principal strategies:

* Derive device information from existing tensors.

device = tensor1.device tensor2 = torch.tensor([2, 3], device=device)

In the snippet given above, we obtain the device from an already existing tensor by using the `.device` attribute, and then utilize it to locate tensor2 on the right device. When both tensors reside on the same device, computation between them proceeds smoothly.

* Explicitly send tensors to the desired device.

device = torch.device("cuda")

tensor1 = torch.tensor([0, 1]).to(device)

tensor2 = torch.tensor([2, 3]).to(device)

In the occurrence above, we manually define the device and use the `.to(device)` method to move each tensor to it. Be sure to note that sending large tensors repeatedly between CPU and GPU can have significant overhead. It’s recommended to have all tensors on the same device for an operation before the commencement of the said operation.

Keeping in mind your model may need to execute on various systems with unique configurations (e.g., a system might not always have a GPU), I often find it helpful to dynamically select the device based on what’s available.

This succinct code could serve as a template:

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

tensor1, tensor2 = torch.tensor([0, 1]).to(device), torch.tensor([2, 3]).to(device)

With the above steps, you will ensure that tensors and operations line up accurately, and evade the common pitfall of “Expected all tensors to be on the same device” error. Remember, aligning the tensors and operations on the same device plays a vital role in exploiting the efficiency gains obtained through GPU-accelerated computing.

When programming with tensors, especially in deep learning frameworks such as PyTorch, you may encounter the error:

"RuntimeError: Expected all tensors to be on the same device."

. The root cause of this error message is often an inconsistency between the devices where your tensors are located. It’s crucial to understand that PyTorch supports multiprocessing and allows computations on both CPUs and GPUs (represented by device types in PyTorch like ‘cpu’, ‘cuda’). These provide flexibility, but also open the door for errors if not managed correctly.

Error Handling Techniques for Managing Off-Device Tensors

Here’s how you can handle these off-device tensor issues to eliminate the RuntimeError:

- Ensure Consistency in Devices: The foremost step requires confirming that all the tensors involved in an operation are on the same device, either CPU or GPU. If some tensors reside on a CPU while others are on a GPU, the error will appear. Use methods like

tensor.to(device)

to ensure consistent placement of tensors.

- Set Default Tensor type: It’s possible to set a default dtype at the beginning of your code that will place all created tensors on a specified device. This uniformity prevents disparity in tensor placement.

- Cross Device Operations: For operations including multiple devices, clearly specify which device should perform the operation. Afterwards, move the resulting tensor back onto its original device.

- Check Model and Input Tensor Device Compatibility: If a model is on GPU and the inputs are on CPU (or vice versa), it will result in a mismatch. Make sure both the model and input are on the same device.

# Example

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

tensor_a = tensor_a.to(device)

tensor_b = tensor_b.to(device)

# Example

torch.set_default_tensor_type('torch.FloatTensor')

# Example

tensor_a = tensor_a.to('cuda')

tensor_b = tensor_b.cpu()

result = tensor_a + tensor_b.to('cuda') # Perform operation on GPU

result = result.to('cpu') # Move the result back to CPU

# Example model = model.to(device) inputs = inputs.to(device) output = model(inputs)

These techniques will assist you in handling off-device tensor issues reliably and preventing the “RuntimeError : Expected all tensors to be on the same device.”

Remember, PyTorch’s flexibility in terms of device management doesn’t come without responsibility [Pytorch Docs].

Runtimeerror: Expected All Tensors To Be On The Same Device is a type of error message commonly encountered when building or using PyTorch models. It’s an instruction to the developer that every tensor used in your operations should be on the same device.

The concept behind this error is fairly straightforward—your tensors are not the place they need to be. More specifically, if you’re attempting to perform operations between tensors, those operations expect all tensors to be on the same device.

Understanding Device Context in PyTorch:

In PyTorch `

tensors.device

` could represent two kinds of devices:

* CPU: Central Processing Unit

* GPU: Graphics Processing Unit

They reflect where the tensor is currently located. Therefore, the mentioned RuntimeError implies one or more tensors are not on the correct device (CPU/GPU) for computations performed on them.

Here is how we can manually control which device a tensor resides on:

# Create a tensor on CPU tensor_cpu = torch.tensor([1.0, 2.0], device='cpu') # Create a tensor on GPU tensor_gpu = torch.tensor([1.0, 2.0], device='cuda')

Solving the Error:

To fix the RuntimeError at hand, we have to ensure that all tensors in operation reside on the same device. This can be typically done by either moving your tensor to CPU or to GPU as per requirement.

* Moving a Tensor to CPU:

tensor_gpu = tensor_gpu.to('cpu')

* Moving a Tensor to GPU:

tensor_cpu = tensor_cpu.to('cuda')

Please note that moving tensor to ‘cuda’ assumes you have a working CUDA-enabled GPU. If you try to move a tensor to GPU on a machine without a GPU unit, you’ll encounter another RuntimeError. In this case, fallback to using the CPU.

It’s also worth mentioning that if you’re doing an operation involving multiple tensors, ensure all of them are on the same device. Suppose you’re summing two tensors. Both must be on the same device.

# both on CPU result = tensor_cpu1 + tensor_cpu2 # both on GPU result = tensor_gpu1 + tensor_gpu2

If you attempt to add a CPU tensor to a GPU tensor, you will encounter the expected all tensors to be on the same device runtime error.

For more insights into dealing with tensor manipulations and PyTorch operations, refer to the official PyTorch documentation here. By understanding the use of devices within Python and PyTorch, you can better manage tensor operations and prevent such errors from interrupting your processing work.The Runtimeerror: Expected All Tensors To Be On The Same Device is a common issue encountered in PyTorch. It arises when tensor computations involve tensors from different devices as PyTorch requires all tensors involved in the operation to be on the same device, either CPU or GPU.

Let’s delve into an example. Suppose you’ve initialized your tensor in the CPU and there’s an attempt to perform computations involving a GPU tensor:

import torch x = torch.tensor([1.0]) # This tensor is on CPU y = torch.tensor([1.0]).cuda() # This tensor is on GPU z = x + y # Runtime error will occur here

This results in a RuntimeError because the ‘x’ tensor is on CPU, while the ‘y’ tensor is on GPU.

To resolve this issue, one of the possible solutions would be to ensure that all tensors involved in the operation are on the same device. Here’s how to achieve it:

x = x.cuda() # Move the tensor to GPU z = x + y # Now, this won't throw a runtime error

Alternatively, if you want to conduct operations on the CPU:

y = y.cpu() # Move the tensor to CPU z = x + y # This will also not throw a Runtime Error

In summary, the key point to grasp is that for any computation involving multiple tensors, all tensors should be on the same device. Many developers, particularly newcomers to PyTorch and CUDA programming, often run into this issue. Ensuring data consistency across devices represents one of the fundamental principles of efficient parallel computing, which underlines the utility of this error message. Taking steps towards aligning your computations with this essential rule will steer clear of the ‘RuntimeError: expected all tensors to be on the same device’ problem.

Would you like to dig deeper? I suggest familiarizing yourself with [PyTorch’s CUDA semantics documentation] for a comprehensive overview on managing devices in PyTorch.

Remember, rectifying these minor hiccups significantly betters your understanding of PyTorch’s workings, and gradually transforms you into a more seasoned PyTorch programmer. Happy coding!