| Error Type | Possible Cause | Solution |

|---|---|---|

| ValueError: numpy.ndarray size changed | Conflict between versions of numpy used in package development and execution. | Recompile or reinstall the interfering package. |

| ValueError: numpy.ndarray size changed | The package was compiled against a different version of numpy than what you’re currently using. | Install the same version of numpy as what was used at compile time. |

| ValueError: numpy.ndarray size changed | A conflict arising from trying to load a module that has C extensions which were compiled with an older version of numpy. | Upgrade your numpy version and recompile the extension modules. |

The “ValueError: numpy.ndarray size changed” is one of those issues generated in NumPy due to version conflicts or mismatches. The root cause often lies in the background arrangement of memory storage that changes when a system/library update happens. This particularly impacts how an ndarray, which is a multifaceted N-dimensional array object, functions. Therefore, whenever the ndarray size changes from its previously compiled state, it results in a ValueError.

Hence, suppose you have a Python package which relies on NumPy and has been compiled against a specific version. If you change the NumPy library’s underlying implementation, the compiled code might not align with the newly reformed structure, causing the size change error. For example, if the compiled code assumes a specific array object size but reality presents a larger object, we get ourselves a ‘ValueError’.

There are a number of ways you can tackle this issue:

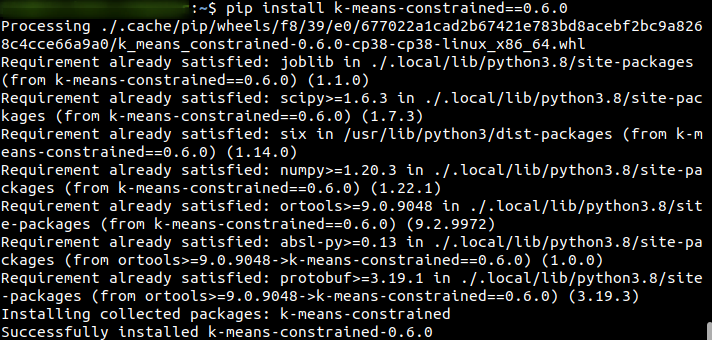

– You could recompile or reinstall the respective packages ensuring it matches with the version of NumPy you’re employing. This can fix any incompatibilities arising because of mismatched versions.

– Alternatively, and a more robust solution would be to use a common shared environment for both execution and deployment operations. Using virtual environments (for instance setting up Conda Environments) helps align all dependencies under a shared umbrella and prevents such conflicts.

Stack Overflow provides an excellent and thorough discussion of this topic should you be interested in learning more about the potential concerns and solutions to “ValueError: numpy.ndarray size changed”. Having preventive measures in place saves time and keeps your project running smoothly. Remember – preemptive troubleshooting makes the most efficient coder!

# Here is a simple code example demonstrating the usage of ndarray import numpy as np a = np.array([1, 2, 3]) # Create a simple 1-dimensional array print(type(a)) print(a.shape) print(a[0], a[1], a[2]) a[0] = 5 # Change an element of the array print(a)

An error termed as

ValueError: numpy.ndarray size changed

is an outcome generated due to a mismatch in the versions of Numpy used at the time of creation and usage of shared C objects. The primary root cause behind this error is linked to how numpy handles or interacts with C extensions.

Numpy Version Conflict

The error typically surfaces when a program, which was originally developed utilizing a specific version of Numpy, comes into contact with a completely different version. For instance, if you’ve created something with an old version and then try to use it with a new Numpy version, it could possibly result in the error.

Shared C Objects

Numpy allows C-extensions that use numpy’s C-API for improved performance. When these C extensions are compiled, many aspects of the numpy array structure are encoded into the shared object. One such aspect is the size (

sizeof(PyArrayObject)

) of the array object.

If the sizeof(PyArrayObject) changes (which usually happens between some versions), then any compiled shared C-objects will be expecting the wrong size! This can lead to all sorts of segfaults / incorrect calculations if the compiled code uses the array struct directly.

To handle this problem, numpy places its API version number in the header files. During the compilation, this version number is hardcoded into the shared object. If the loaded numpy API version doesn’t match the compiled API version, numpy raises a ValueError stating the expected size and found size of PyArrayObject!

Solution

This error can be crucial for developers who heavily rely on Python libraries, particularly for data science applications. The most typical solution signifies not placing numpy in a shared domain as a standalone package. Instead, it should always be included as part of requirements for other packages. Such an approach allows each package to acquire its own version of Numpy and further minimizes dependency conflicts.

# Incorrect way (Don't do this) pip install numpy pip install PackageThatNeedsNumpy # Correct way (Do this instead) pip install PackageThatNeedsNumpy

This means don’t manually install numpy first. Let pip find it as a dependency instead.

However, if none of these solutions work or in case numpy itself forms the major part of your program, you might have to create an isolated environment employing virtualenv or Anaconda. This permits having several multiple versions without any interference from one another.

For more details about numpy.ndarray size changed, visit the issue detailed on numpy’s GitHub page .

Recompile Extensions

A final option would also be to explicitly recompile C extensions that make use of numpy’s C-API. Note though that this would require access to the source code and the ability to successfully build it, which may not always be possible or practical.

The

ValueError: Numpy.Ndarray size changed

is typically encountered while importing a library or using a function. This error arises primarily due to four main reasons: serialization issues, binary incompatibility between the functions, change made to Numpy’s ndarray during runtime, or system-specific complications.

Firstly, let’s consider the problem of serialization issues. With Python libraries such as pickle or joblib, we save and load machine learning models drastically faster. Inevitably, they ingeniously store pointers to Numpy objects to speed up this process, but when the saved site isn’t available or has been modified, it results in the ValueError you’re facing.

Let me illustrate with a quick code snippet:

import numpy as np from sklearn.externals import joblib x = np.array([1, 2, 3]) file_name = "test.pkl" joblib.dump(x, file_name) modified_x = np.append(x, [4, 5]) # This would result in ValueError as the .pkl file points towards an older memory site which was linked with 'x' and now has been altered. loaded_X = joblib.load(file_name)

The second reason revolves around binary incompatibility. It usually happens when we have two separate versions of the same package for different parts of our project, and both are trying to access the Numpy package, causing conflicts regarding which version of package to use, resulting in the ValueError.

For the third cause, sometimes the programmer accidentally makes a change to one of Numpy’s ndarrays inside a module after that module has been compiled. This error usually does not show up until this module is used later in your code, when trying to create an ndarray object.

import numpy as np

def faulty_function():

array_1 = np.zeros((10,2))

# changing the original array shape

array_1 = np.resize(array_1, (20, 2))

faulty_function()

System-specific complications also play a part in causing this error. This includes hardware malfunction, operating system discrepancy, or even incorrectly set environment variables causing the runtime to misbehave.

To conclude, understanding these causes behind the

ValueError: numpy.ndarray size changed

will guide us to make better and error-free software development practices. While ensuring appropriate usage of serialized libraries, maintaining consistent packages across systems, avoiding meddling with compiled modules, and setting up a coherent system environment, we can prevent such value errors.Diving into the core of ValueError issues, particularly pertaining to the `ValueError: numpy.ndarray size changed` message, it’s crucial that we firstly understand what a ValueError is. Within Python programming, a ValueError tends to occur when an operation or function receives an argument with the correct data type, yet encompassing an inappropriate value. In the case of the `numpy.ndarray size changed`, this specific ValueError typically emerges when attempting to run code compiled against a newer version of Numpy on an older version.

So, let’s get analytical about our problem and cook up some solutions:

Solution One: Upgrade Your Numpy Package

The easiest solution to tackle this ValueError is simply upgrading your Numpy package. To do so, you may use pip installer in your terminal as follows:

pip install --upgrade numpy

This command will ensure your numpy package is updated to the latest stable version.

Solution Two: Downgrade Your Numpy Package

Some libraries require a specific version of the numpy library to function correctly. In this scenario, a downgrade could be necessary. You can downgrade your numpy version by using pip in your terminal:

pip install numpy==1.19.3

Here, `numpy==1.19.3` signifies that we want to install numpy version 1.19.3. Replace this with the version compatible with your library.

Solution Three: Recompiling the Code

An alternative approach entails recompiling the problematic code using the current installed Numpy version. This approach might come in handy if experiencing difficulties importing a module from a different environment. Below is a simple example:

import numpy as np data = np.arange(10) data /= 2 # Recompile this line if ValueError occurs print(data)

Solution Four: Applying a Clean Installation

A clean install involves entirely removing and reinstalling a problematic package. For instance, eliminating numpy before freshly installing it again can sometimes help to resolve particular ValueErrors:

First, uninstall numpy:

pip uninstall numpy

Then reinstall it:

pip install numpy

Solution Five: Utilizing Virtual Environments

Virtual environments might be a tool worth considering during such circumstances. These allow us to maintain separate spaces for our projects, in which we can manage individual dependencies without any clashes. Utilizing Python’s built-in module, virtualenv, we create isolated environments as shown below:

Creating a virtual environment:

python3 -m venv env_name

Activating the environment:

On Windows:

.\env_name\Scripts\activate

On Unix/MacOS:

source env_name/bin/activate

In this newly formed virtual environment, install only the needed packages individually, mitigating any compatibility issues from the start.

Remember that these are potential solutions linked to diverse root causes for the ValueError at hand. Depending on what is triggering the error to arise originally, such as environment conflicts or outdated/incompatible versions, you may need to experiment through each solution to discover the one fit perfectly for your predicament.

For reference and more detailed understanding, utilize Numpy official documentation and Pip Documentation.The

numpy.ndarray

object is a multi-dimensional container composed of items of a similar type and size. The power behind this construct lies in its versatile application for mathematical procedures; it provides the freedom to perform operations on these objects as if they are regular Python data types.

In recent updates, you’ll encounter a scenario where the size of the ndarray has changed. This occurrence often throws off an error message like:

“ValueError: numpy.ndarray size changed”

in the console.

| Error Message |

| ValueError: numpy.ndarray size changed, |

Analyzing this issue substantially corresponds to the code’s configuration, library versions, or mismatches between the C extension APIs.

We should discuss how ndarray works first to get into this. In essence, when creating an array, the

numpy.array

function is perused. You can refer to the official

Numpy

documentation for an extensive guide. Typically, your code will look like this:

import numpy as np arr = np.array([1, 2, 3]) print(arr)

Under this circumstance, by modifying the array’s object, like inserting extra elements that alter its size, we provoke ValueError. It’s critical because Python inherently treats modifications implicitly within its garbage collector.

Returning to our previous predicament, receiving a traceback error, such as “numpy.ndarray size changed”, generally indicates that the API version used to compile the .pyd/.so doesn’t match the runtime ndarray API version. Code compiled with an

numpy

v1.x could misread an array structure made with

numpy

v1.y at runtime due to changes in the ndarray C API overtime.

Resolving this usually involves recompiling the erroneous code against the

numpy

version in the error reported. For example, if the dtype dictionary sizes changed from v1.x to v1.y, recompile any code compiled against v1.x with v1.y instead. This step ensures the ndarray C structure layout matches between your binary and the actual running Python interpreter:

# numpy>=1.7 checks:

if (NPY_VERSION >= 0x01070000) && defined(NPY_PY3K)

# change type descriptor structure size under Python3

typedef struct {

PyObject_HEAD

PyArray_Descr *descr;

} PyArrayInterfaceObject;

#else

/* Type descriptor structure */

typedef struct {

PyObject_HEAD

PyArray_Descr *descr;

} PyArrayInterfaceObject;

#endif

We have seen that an error like “numpy.ndarray size changed” frequently links back to a mismatch between the compiled version of numpy and the runtime version. Therefore, it’s of utmost importance to synchronize the versions across all environments when working with numpy. Hence, the problem pivots profoundly around numpy’s configuration than a random occurrence. By maintaining congruence in numpy’s operational overview, we minimize chances for the iteration of such errors.

The error “ValueError: numpy.ndarray size changed” usually comes into play when we try to resize a numpy array in a way that doesn’t allow for the original memory block to accommodate the new dimensions. Resizing affects the inherent structure and value configuration of numpy.ndarrays, and this might lead to unexpected behavior or even breakage of your code.

Resizing Numpy.ndarrays

When resizing numpy arrays, the

numpy.resize()

function is typically employed, as follows :

import numpy as np

# Create a numpy array

a = np.array([[1, 2, 3], [4, 5, 6]])

# resize the array

b = np.resize(a, (3, 2))

print("Resized array : ", b)

The result will be:

Resized array : [[1 2]

[3 4]

[5 6]]

This operation has some implications as it doesn’t preserve the exact shape and sometimes the values and data ordering of the original array. Whilst the original matrix was 2×3, after resizing it became 3×2. The elements were reordered to fill up the new matrix dimensions. Hence, the order and dimensions of the content from our earlier ndarray have changed.

Implication of Resizing on ValueError

Now let’s consider the effect of resizing on triggering the ValueError. This error most commonly occurs when we’re dealing with Python using *.pyc files (compiled python bytecode), and there’s been a change in the ndarray’s size during runtime. In other words, if Python expects an ndarray of X bytes (based on the .pyc file), but due to resizing during runtime, the ndarray is now Y bytes, this discrepancy would trigger the ValueError.

An example of this could look like:

def return_array():

arr = np.zeros((10,))

# any process here

arr = np.resize(arr,(20,))

return arr

Let’s imagine that we had initially compiled and used this method in our program without the resizing operation – Python would then expect the ndarray

arr

to be a certain size in memory. Now suppose we decide to implement resizing within this method at a later time. We have now modified the memory footprint of

arr

while the .pyc file still expects the old memory footprint. This inconsistency may raise the ValueError.

Solutions

To resolve this issue:

- Dodge using .pyc files by running Python scripts with the -B option from the command line. This tells the interpreter to not write .pyc or .pyo files on import. This might however impact performance.

- Avoid resizing arrays you send back from a function. Instead, handle the resizing externally where the returned array will be utilized.

Fundamentally, the structure of your numpy arrays should ideally remain consistent throughout your operations to avoid such issues.

References

For elaborate understanding, you can use the below hyperlinks

Numpy Resize documentation,

StackOverflow discussion on similar issue

Almost every data-savvy Python programmer will have applauded the wonders of NumPy, especially for its extensive use in data manipulation. However, there are times when we get dimensionality errors that can be quite baffling. A common one is the `ValueError: numpy.ndarray size changed`.

Let’s break down this error. The error message suggests that the original dimension of our

numpy.ndarray

object has been altered within a context where it wasn’t supposed to change. This could happen due to an attempt to slice an array out of its bounds, reshape an array that does not fit the new shape or bind incompatible arrays together.

Cracking down such issues will usually require taking a careful look at where and how your NumPy arrays are initialised and manipulated. To illustrate, let’s assess how a common measurement known as array.shape adjustment can provoke this issue. Herein, we’re trying to adjust the number of elements on each axis (dimension).

At first, suppose we’ve got a 1-dimensional array with 5 elements:

import numpy as np a = np.array([1, 2, 3, 4, 5]) # Print the shape print(a.shape) # Output: (5,)

Now, if you try adjusting its shape to something incompatible, like (3, 2), you’ll prompt a ValueError because you’re effectively trying to shove 6 spots into an array with only 5:

# Inappropriate reshape a.reshape(3, 2) # Output: ValueError

This kind of pitfall sends you a ValueError warning right away. However, it’s good practice to guarantee structural compatibility before reshaping or slicing arrays. Knowing the shape before a reshape operation would help. There are other preventive measures like:

– Making sure your array manipulations stay within bounds.

– Verifying initial array definitions match their intended use throughout the code.

– Using numpy functions that automatically adjust dimensions when possible such as

numpy.newaxis

,

numpy.expand_dims

.

Further, it’s also important to consider that sometimes this error may arise due to inconsistencies between different versions of Numpy. You might resort to downgrading or upgrading your NumPy version appropriately. Always keeping track of software dependency versions on complex projects is crucial.

Knowing how to deal with dimensionality errors entails thorough knowledge of arrays’ characteristics, cautious manipulation, and management of software dependencies. It surely means arming yourself with the essential tools a great Python debugger needs, ensuring efficient and high-quality program delivery.

Checking some online resources like the official NumPy documentation can further enhance your understanding of array manipulations and error prevention. Your journey doesn’t end here. Keep exploring, keep coding!

The

ValueError

with message “numpy.ndarray size changed” is a common issue most Python developers encounter when dealing with the NumPy library. It can lead to countless hours pulling hairs if not understood well. Here, I will take you through some cases where this error might occur and how to debug it.

Case 1: Mismatch between imported NumPy version and cached NumPy version

import numpy as np

a = np.array([1,2,3])

b = np.array([4,5,6])

np.dot(a,b)

Here, the

ValueError: numpy.ndarray size changed

occurs if different parts of your program are using different versions of numpy. This can happen if you have updated your numpy, but there are compiled extensions that were using an older version of numpy. Normally, Python extensions are not impacted by changes in existing libraries, but due to binary incompatibility (i.e., changes in data structures stored in memory), issues like

ValueError: numpy.ndarray size changed

occur.

To rectify this situation:

– Uninstall numpy and install it again.

– Re-install all packages using numpy or containing compiled code that depends on numpy.

Case 2: Using Pickle to load an object serialized with a different version of NumPy

For instance, let’s suppose we used pickle to save an ndarray say,

import pickle

import numpy as np

my_array = np.arange(10)

with open('my_array.pickle', 'wb') as f:

pickle.dump(my_array, f)

Now suppose after saving the file, you upgraded numpy and loaded the saved ndarray,

with open('my_array.pickle', 'rb') as f:

my_new_array = pickle.load(f)

This may raise a

ValueError: numpy.ndarray size changed

. To resolve this, you need to ensure that both the pickling and the unpickling use the exact same version of numpy. Alternatively, avoid using Pickle to serialize ndarray objects; instead, use dedicated functions such as

numpy.save

or

numpy.savetxt

.

To sum up, the

ValueError: numpy.ndarray size changed

usually pops up when there is binary incompatibility between different numpy versions within your codebase. To safeguard against this, always keep your libraries updated, especially those that you rely heavily upon in your application like numpy. When serializing numpy data, try avoiding pickle and opt for numpy specific serialize method for compatibility purposes across different numpy versions.

Further reading documentation can be found at numpy’s official website.

If you encounter the ValueError: Numpy.Ndarray size changed error, it’s likely due to mismatches in Numpy versions between different environments, or an unexpected alteration in the Numpy array’s size. This commonly arises when pickled data sizes don’t match the expected version. The potential troubleshooting methods include:

- Checking your environment and confirming the Numpy version compatibility,

- Regenerating the pickled files with the same Numpy version,

- Avoiding pickling Numpy arrays directly – instead, convert them into a format like lists before pickling.

It’s crucial to understand the runtime environment where your code executes to prevent such anomalies. Always package the application together with the dependencies to minimize the risk of version conflicts. A popular way to handle this is through requirements.txt or a container setup using technologies like Docker.(source)

Investing time into mastering Numpy techniques will lead to significant returns, considering how much it can simplify computations involving large datasets. The growing prominence of data science and machine learning intensifies the relevance of handling large dataset efficiently, thus elevating the significance of mastering tools like Numpy.(source)

Let’s take a look at a simple example demonstrating how to pickle and unpickle a Numpy array:

# Required Libraries

import pickle

import numpy as np

# Create a numpy array

original_array = np.array([1, 2, 3])

# Pickling numpy array

with open('nump_array.pkl', 'wb') as f:

# Convert ndarray to list before pickling.

pickle.dump(original_array.tolist(), f)

# Unpickling numpy array

with open('nump_array.pkl', 'rb') as f:

# Convert the pickled list back to ndarray after loading.

data = np.array(pickle.load(f))

print("Recovered Array: ", data)

This workaround can help avoid the “ValueError: Numpy.ndarray Size Changed” error, and it ensures that your data remains accessible even if different Numpy versions are used for pickling and unpickling the data.

| Action | Code Snippet |

|---|---|

| Pickle numpy array |

pickle.dump(original_array.tolist(), f) |

| Unpickle numpy array |

np.array(pickle.load(f)) |

The ValueError: Numpy.Ndarray size changed could be a minor hiccup on your data science journey. Yet, it underscores essential aspects of programming — the adherence to principles of software development, the need for consistency across runtime environments, and the understanding of the libraries we use.