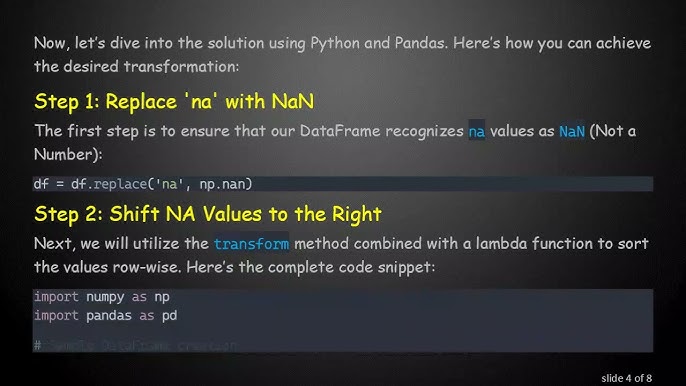

| pd.NA | np.nan | |

|---|---|---|

| Data Type | Pandas’s new NA value (Pandas 1.0.2 onwards) | Numpy’s scalar for missing values. |

| Type | Object type irrespective of array dtype | Float type irrespective of array dtype |

| Inclusive Operation | A property such as

df['column'].isna()

will include pd.NA |

A property such as

df['column'].isna() will include np.nan |

| Performance issue | No performance problem. Use for large dataframe as dtype=’Int64′, ‘boolean’ etc. Supports nullable integer | Its a float – May cause memory and performance issues in a large dataframe |

Both `pd.NA` and `np.nan` can be detected and managed by utilising efficient functions like `isna()`. However, significant differences lie in their impacts on the array datatype and overall memory consumption.

While `np.nan` always upcasts to float types despite the dataset’s original data type, pd.NA maintains the same data type. Furthermore, `np.nan` might lead to performance degradation when managing large scale datasets as it requires larger memory storage due to upcasting. On the contrary, `pd.NA` persists the data type and therefore takes care of this shortcoming, making it a more efficient choice for bigger data sets while maintaining optimal performance.

By considering these pivotal factors, coders can better handle data operations when working with missing or null data values in any programming-scenario. Always remember, understanding these subtle differences provide great benefit in implementing the most effective data management strategies.Understanding the basics of

pd.NA

and

np.NaN

in Pandas is critical for anyone well-versed in data analysis. These two are key aspects when it comes to handling missing values in data sets within Pandas.

Let’s examine more closely:

pd.NA

is a relatively new introduction in Pandas, suitable for any data type. This “missingness” indicator provides a consistent approach to handle null or missing values, irrespective of whether you’re dealing with numerical, object, or boolean data.

Here is an example usage:

import pandas as pd

# Creating a simple DataFrame with some missing elements using pd.NA.

df = pd.DataFrame({

'Column1': [1, 2, pd.NA],

'Column2': [pd.NA, 'b', 'c'],

})

print(df)

Whilst

np.NaN

(Not A Number) is an older method from NumPy library, mainly intended for float data. It morphs the behaviour of an entire data set or array to floating point arithmetic, which can lead to unexpected results.

For instance, consider the snapshot below:

import numpy as np # An integer array arr = np.array([1,2,np.NaN]) print(arr.dtype) #You'll find the array changed to float; 'float64'.

Now, comparing `pd.NA` and `np.NaN`:

– Data Type Handling:

| pd.NA | np.NaN |

|---|---|

| Provides a consistent approach across several data types. | Limited primarily to float type, even coerces integer arrays to float. May result in loss of precision. |

– Functionality:

| pd.NA | np.NaN |

|---|---|

| Particularly helpful for logical operations. For instance, when used with Boolean data, evaluating to True or False returns pd.NA if the condition cannot be determined because of a missing value. | May behave strangely when used in logical operations. Since NaN != NaN in numpy, logically inspecting np.NaN might deliver incorrect outcomes. |

Despite these distinctions, they both serve alike in crucial situations – filling in the blanks in data processing workflows. Understanding where to use each of these can optimize your data handling process.

To augment your understanding on this topic, you can visit

Pandas Documentation on Missing Data.If you’re using Python for data analysis, pandas is one of those libraries you can’t afford to ignore. And when it comes to pandas, the way we handle missing data can greatly influence the result of our analysis. One of the things that often pop up in data is the concept of “null” or “missing” values. It’s critical how we deal with these values. Among others, pandas provides us two ways pd.NA and np.nan.

To begin with,

pd.NA

is a newer approach introduced in version 1.0.0 with an objective to provide a standard “missing” value marker. The benefit is, across different data types like integer, boolean which were not supported by NAN,

pd.NA

can be used consistently.

On the other hand,

np.nan

is the classic way of handling missing data but with some limitations. It’s undefined over integers, booleans and it tends to slow down computation as every arithmetic operation involving

np.nan

results in a float output.

Let’s see how they behave differently:

#Import Pandas and Numpy

import pandas as pd

import numpy as np

#Creating DataFrame with np.nan and pd.Na

df = pd.DataFrame({

'With_NaN': pd.Series([4, 5, np.nan]),

'With_NA': pd.Series([1, 2, pd.NA])

})

print(df)

#Output will be:

With_NaN With_NA

0 4.0 1

1 5.0 2

2 NaN

Notice, how integer values combined with

np.nan

got converted to float due to upcasting, and how well

pd.NA

worked with integer.

In comparison to

np.nan

,

pd.Na

aids in efficient memory usage as it doesn’t unnecessarily convert integers to floats when combined with missing value – this helps especially when dealing with large datasets where every bit of efficiency counts.

Moreover,

pd.NA

operates similarly like NULL in SQL in logical operations. You can use bitwise operators (‘|’ for OR, ‘&’ for AND) with NA and get expected outputs unlike with

np.nan

.

Let’s see how:

# Logical operation with pd.NA print(True | pd.NA) #Output:# Logical operation with np.nan print(True | np.nan) #Output: True # You'd rather expect the first case behaviour from a missing value.

Although, There are still some pandas operations where

np.nan

is treated as the default missing value marker (like in GroupBy.nth). But, pandas ensures compatibility by propagating

np.nan

values.

While either approach has its own advantages,

pd.NA

provides a more standardized way to deal with missing values across different datatypes including the extension types.

To learn more you may check out Pandas official documentation on missing data.In the realms of data analysis and treatment, it is quite commonplace to stumble upon missing or undefined data points. Two common methods implemented in Python for handling such data are

pd.NaT

from Pandas and

np.nan

from NumPy [source] .

Comparatively,

np.nan

(NaN meaning “Not a Number”) is the older method stemming from NumPy’s influence by the IEEE floating-point standard [source], which identifies missing or undefined number data.

Yet, if we closely analyze, within Pandas’ ecosystem, the usage of

np.nan

poses certain limitations:

- It might not work correctly with non-floating types.

- When performing operations on integers or boolean data fields, the dataset gets converted into floats, thereby losing initial type information.

- Type Flexibility: A notable distinction can be noticed in their type flexibility. While

np.NaN

is a floating point value, thus implicitly converting any integer or boolean arrays to float arrays when used, on the other hand,

pd.NA

represents a “missing” value, regardless of the array’s data type. Hence,

pd.NA

can be used in arrays of any data type without altering them.

- Arithmetic Operations: With regards to arithmetic operations, both function differently. When

np.NaN

encounters with numbers during computation, it results in another NaN but if

pd.NA

is engaged in an operation with a number, then it gives

pd.NA

.

- Logical Operations: Also, logical comparisons involving

np.NaN

are always False, except NaN != NaN which is True. However, in case of

pd.NA

, all logical comparisons returns

pd.NA

.

Here is a quick glimpse of how this could provide misleading results:

import numpy as np

import pandas as pd

# Create a DataFrame containing integer and np.nan

df = pd.DataFrame({'A': [1, 2, np.nan]})

# Check what type the column "A" is

print(df["A"].dtype)

The answer will be

float64

even though you initially started with an integer.

That being said, on the other hand, Pandas introduced a new NA scalar, penned as

pd.NaT

, intending to become more flexible when dealing with different data types.

pd.NaT

stands for ‘Not a Time’ and is used to represent missing time data. Yet, its significance transcends its basic functionality- it serves as a general-purpose “missing” marker that can handle all data types [source]. That resolves the constraints observed with

np.nan

.

Check out this following snippet:

# Create a DataFrame containing integer and pd.NA

df = pd.DataFrame({'A': [1, 2, pd.NA]})

# Check what type the column "A" is

print(df["A"].dtype)

Surprisingly, the answer will be

Int64

, thus reflecting the maintaining of original data types while including missing or undefined data.

Furthermore, the additional advantage stretching with

pd.NaT

is its compatibility with Boolean data, cleverly overcoming yet another stumbling block poised by traditional NaN – here’s how it works:

# Introduce Boolean values

df = pd.DataFrame({'B': [True, False, pd.NA]})

# Test the dtype

print(df['B'].dtype)

You will get

boolean

as a result, signifying that the sync with both Integer and Boolean data works effortlessly well, hereby advocating for the preference of

pd.NaT

as the ideal ‘missing’ marker.

Therefore, through our engaging study, we unravel that while working within the Pandas library, opting for

pd.NaT

over

np.nan

enables us to comfortably handle varied data types without wreaking havoc on the original data format.Here’s a deep dive comparing two essential elements in data analysis with Python, specifically with Pandas library: the designation of missing or null values. The two alternatives we’re looking at are

Pd.NA

and

np.nan

.

The Basics: Pd.NA and np.nan

Both

Pd.NA

and

np.nan

serve the purpose of denoting missing or undefined values in our data. NaN means ‘Not a Number’. However, they come from different libraries and their behavior can somewhat vary.

Utilisation

Pd.NA

is specific to the Pandas library. It was introduced recently in version 1.0.0 (released in January 2020) as an experimental feature.

“The goal of pd.NA is to provide a “missing” indicator that can be used consistently across data types.”source

On the other hand,

np.nan

comes from numpy library, which underpins many other Python data libraries, including pandas.

Difference in Operation

Let’s consider sum, mean, and equality operations performed using both

Pd.NA

and

np.nan

.

Sum and Mean

In general, when performing an operation like a sum or a mean with

np.nan

, the result is also

np.nan

. This ensures consistency and reduces errors when operating over arrays including missing values.

However,

Pd.NA

works differently. When calculating the mean or sum, if the operation encounters

Pd.NA

, it ignores this value and continues the operation.

Consider the following example:

import pandas as pd import numpy as np # With np.nan data_nan = np.array([1, np.nan, 3]) print(data_nan.sum()) # Output: nan print(data_nan.mean()) # Output: nan # With pd.NA data_NA = pd.array([1, pd.NA, 3], dtype="Int64") print(data_NA.sum()) # Output: 4 print(data_NA.mean()) # Output: 2

Equality

For the equality comparison,

np.nan

is not equal to anything, even itself. In contrast,

Pd.NA

: is not equal to anything, but it doesn’t assert it either. It returns a

Pd.NA

when compared.

Consider the following example:

print(np.nan == np.nan) # Output: False print(pd.NA == pd.NA) # Output:

Memory Usage

Pd.NA

uses less memory than

np.nan

. This is because Pandas does not have to create a float object for each missing value.

The Verdict: Pd.NA vs np.nan

When working strictly within the Pandas ecosystem,

Pd.NA

offers advantages thanks to its more consistent behavior, lower memory usage, and better handling of missing values in aggregation functions.

The downside of

Pd.NA

is that it is still marked as ‘experimental’, thus backwards compatibility is not guaranteed, although it is reasonably stable according to Pandas documentation.

On the other hand,

np.nan

has been around longer, so you will encounter this much more frequently; especially when working with packages outside of Pandas that are yet to support

Pd.NA

. Moreover, certain NumPy operations are not available when using

Pd.NA

.

Ultimately, your choice between

Pd.NA

or

np.nan

should take into account these differences and the larger context of your project. Both have their roles, advantages and limitations in the realm of data analysis.Sure, let’s have a discussion about using

pd.NA

versus

np.nan

for handling missing data in Pandas. We’ll focus on certain aspects such as their characteristics, how to use them effectively in Pandas, and certain scenarios where one might be preferred over the other.

The Characteristics of pd.NA and np.nan

– The

np.nan

is a special floating-point value recognized by all systems that implement the standard IEEE floating-point arithmetic. As a result, it has a type of float. It’s used in Pandas to represent missing or undefined numerical data.

– The

pd.NA

, introduced recently in Pandas 1.0, stands for ‘Pandas NA’. It’s more versatile than

np.nan

because it can be used with any type of data, not just numerical data. It’s meant to make operations involving missing data more consistent.

Effective Implementation Tips When Using pd.NA and np.nan

– If you’re working with non-numerical data (e.g., boolean, string), you might consider using

pd.NA

. It was created to address the need to mark missing data regardless of whether it is a float, integer, boolean or object datatype. With

pd.NA

, you are able to maintain the dtype of your array.

Here’s an example:

import pandas as pd

s = pd.Series(["dog", "cat", None, "rabbit"], dtype="string")

print(s)

– On the other hand, if you’re dealing with numerical data and older functions/packages that may not recognize

pd.NA

,

np.nan

can be more appropriate. In this regard, while

pd.NA

may offer greater consistency, there’s still a major advantage to

np.nan

‘s widespread recognition and compatibility across different areas of the Python ecosystem.

A usage case could be:

import numpy as np

import pandas as pd

s = pd.Series([1, 2, np.nan, 4])

print(s)

Favoring pd.NA Over np.nan in Pandas

Here’s why you might favor

pd.NA

over

np.nan

:

– It supports more dtypes

– It introduces consistent behaviour, which leads to fewer surprises when performing operations on missing data

Considering these factors, my advice is to start adapting to the

pd.NA

paradigm. Nevertheless, for backward compatibility, smooth transition, and adaption over time, both

np.nan

and

pd.NA

will continue to co-exist in the Pandas universe[^1^].

[^1^]: Missing data: pandas User GuideSure, let’s take a deep dive into the intricacies of

Pd.NA

and

Np.NaN

, both significant entities in the realm of data handling using the powerful Python libraries: Pandas and NumPy.

To clarify for anyone unfamiliar with these terms:

–

Pd.NA

is a newer representation of missing values introduced by the Pandas library.

– On the other hand,

Np.NaN

(standing for *Not a Number*), is a more traditional way of representing missing numeric values, coming from the Numerical Python (NumPy) library.

For striking a comprehensive comparison between

Pd.NA

and

Np.NaN

, I will spotlight their primary differences across three main respects:

– **Representation**

– **Data Types Compatibility**

– **Operations Handling**

Understanding these distinctions thoroughly is vital for data practitioners, as it significantly affects how the missing values are handled during data cleaning, manipulation, and analysis.

REPRESENTATION:

Np.NaN

originates from the IEEE floating-point standard denoting a “Not a Number” value. It was first adopted in data-handling programming due to its property of propagating through all computations without raising an exception. However, one significant limitation of using

Np.NaN

is that it upcasts other types, such as integers, to floats. This behavior leads to some unintuitive results, particularly when working with integer or boolean arrays.

Contrarily,

Pd.NA

symbolizes a “missing” value of any type, transparently propagating through operations just like

Np.NaN

. But unlike NaN,

Pd.NA

doesn’t force upcasting and can exist alongside any data type, enhancing its versatility and usage.

DATA TYPES COMPATIBILITY:

Due to its base in the float type,

Np.NaN

proposes issues when utilized with various non-floating types. Using the missing value NaN within an array of integers or booleans enforces those elements to be cast to a float, which might not always be desirable.

On the contrary,

Pd.NA

is designed to coexist seamlessly alongside any data type, including numeric, string, and boolean, without imposing casting and retaining the original data types.

Pd.NA

is essentially a “type-less” NA value, bolstering its dexterity over

Np.NaN

.

OPERATIONS HANDLING:

Pandas has historically used

Np.NaN

as a marker for missing data, ensuring that operations (like mean or sum) ignore these missing values. Nonetheless, it also induces a certain ambiguity – for instance, operations like an equality check (

np.nan == np.nan

) would yield an unexpected False.

Pd.NA

provides an alternative placeholder for missing data and is also compatible with the

pd.NA

equality operations. A comparison of

Pd.NA

with any value (including itself) yields a

Pd.NA

. This way, it eliminates the instances of possible ambiguities.

It’s worth noting that the introduction of

Pd.NA

doesn’t spell the end for

Np.NaN

. Both representations have distinct roles in managing missing data, and their usage depends upon the precise need of the task at hand. For much of the numerical computation,

Np.NaN

remains the preferred choice due to its longer legacy and extensive application. At the same time, in cases with mixed-type data or where type casting is a concern,

Pd.NA

offers a newer, equally effective alternative.

In a practical context, consider this code snippet for exemplification:

import numpy as np

import pandas as pd

# Np.NaN in use

print(np.nan == np.nan) # output: False

arr = np.array[1,np.nan,3]

print(arr.dtype) # output: float64

# Pd.NA in use

s = pd.Series([1, pd.NA, 3])

print(s.dtype) # output: Int64

Python’s Pandas documentation also provides additional insights into handling missing data with

pandas

.

In summary,

Pd.NA

and

Np.NaN

are both instrumental in representing missing data – the former being more flexible concerning datatype retention, while the latter finds widespread use in numerical computations thanks to its longstanding heritage. Understanding when to use each correctly is key to successful data manipulation with Python.In Python, data can sometimes be non-existent or undefined. To deal with such situations, two well-known objects are used:

pd.NaT

(Pandas Not a Time) and

np.NaN

(NumPy Not a Number). Both of these allow us to handle missing or invalid data in different ways.

Firstly, we have

pd.NaT

. This Labeled Not a Time object is employed by Pandas, a popular library in Python used for data manipulation and analysis. It completes the need for a null value time indicator within the data structures available via Pandas, including Series, DataFrame, or Panel. This, however, doesn’t mean it cannot be used in other scenarios. On the contrary, we can utilize it anywhere where the data requires to display the lack of accurate timing data.

For example, if you want to signal that a particular date isn’t available or doesn’t exist, you can consider using

pd.NaT

.

import pandas as pd

s = pd.Series([pd.NaT, '2015-05-01', '2014-05-01 00:10:00'])

print(s)

However, do note that

pd.NaT

is a special type of floating point number under the hood which might cause unexpected behavior when being processed with numerical algorithms that don’t specifically account for it.

Next, we have

np.NaN

. Unlike ps.NaT that was designed for time representations, np.NaN, a special floating-point value, is designed to represent Not a Number and is provided by NumPy, another indispensable library in Python known for its mathematical abilities.

Here’s an instance where

np.NaN

is utilized:

import numpy as np

array_with_nan = np.array([1, 2, np.nan, 4, 5])

print(array_with_nan)

Critical pointers include: both

pd.NaT

and

np.NaN

can help manage voids in your dataset. While

pd.NaT

is limited to representing missing time data and operates subtly differently to a traditional NaN,

np.NaN

is capable of handling mathematical operations better, given its general nature for floating points.

Though managing both in practical code might sound complex at first glance, Python comes pre-equipped with functions for handling them effectively.

For example, if you want to remove these values from your DataFrame, you can use the

dropna()

function:

import pandas as pd

import numpy as np

df = pd.DataFrame({"A": [1, 2, np.nan], "B": [5, np.nan, 7], "C": [8, 9, 10]})

print(df)

print(df.dropna())

Unfortunately, elimination is not always the best option. Severe loss of information may occur, making the remaining values misleading or significantly biased. In such instances, you could fill them with representative values with the command

fillna()

like below:

import pandas as pd

import numpy as np

df = pd.DataFrame({"A": [1, 2, np.nan], "B": [5, np.nan, 7], "C": [8, 9, 10]})

print(df)

print(df.fillna(value=0)) # Replace all NaN elements with 0s.

Nonetheless, while tackling errors in Python involving

pd.NaT

and

np.NaN

, remember that each strategy has its unique advantages, and the context typically dictates usage. Referencing the official documentation of pandas and numpy for more details on managing missing data can also pave a smoother path during this journey!

Remember, careful error and exception management are crucial aspects of writing efficient, robust Python code, especially when dealing with external data sources that might throw unexpected wrenches into our virtual gears!Digging deep into the world of data analysis with Pandas library in Python will often lead you to handle missing or null values in your data. Pandas provide two options –

pd.NA

and

np.NaN

for managing these null values.

Let’s dive into some contrasts between pd.NA and np.NaN:

Let me provide a small snippet to depict the above difference:

import numpy as np import pandas as pd # Mathematical Operation print(np.NaN + 1) # Returns: nan print(pd.NA + 1) # Returns:# Logical Operation print(np.NaN == np.NaN) # Returns: False print(pd.NA == pd.NA) # Returns:

In simple terms, the choice between

pd.NA

and

np.NaN

depends highly on the context that you’re dealing with.

If you require compatibility with systems that expect the IEEE floating-point standard, or if your data is purely floating point where the conversion by

np.NaN

is not a concern, then

np.NaN

should be used.

Nonetheless, considering its universal application across different types, coupled with a more SQL-like behaviour for indicating missing values,

pd.NA

would be greatly efficient, providing a compelling alternative especially when dealing with integer, boolean or other non-float data.