| Error Code | Message | Description | Potential Solutions |

|---|---|---|---|

| 429 | You exceeded your current quota | This error implies that the volume of requests made within a particular timeframe to the OpenAI API has outpaced the limits imposed by OpenAI. These restrictions could be daily, hourly, or even per minute, based on the service agreement or package acquired. |

• Reduce the frequency of API calls. • Consider upgrading your API usage plan if the existing one is too restrictive. • Implement an ‘exponential backoff’ strategy for retries as recommended by the Google Cloud Platform guide on handling 429 errors.. |

This error is quite common when interacting with OpenAI’s GPT-3.5. It means the number of requests made by your application to the service provider, in this case OpenAI, has been too high and therefore exceeds the rate limit set by OpenAI. The purpose of these limitations is to prevent abuse of resources and to ensure fair use among all users of the service.

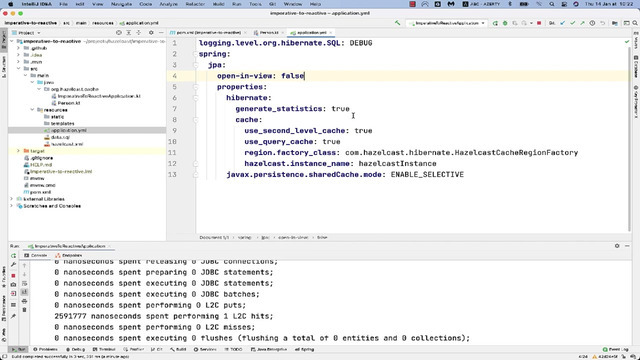

The most frequently employed strategy to fix this issue involves reducing the frequency of API calls which are being made by your software program. You might need to revise your code to minimize the number of API requests. Further, implementing effective caching in your data retrieval operations can assist in limiting excessive API requests.

Another beneficial approach can be upgrading your API usage plan, provided OpenAI offers higher or adjustable rate limits. A higher subscription tier may afford more leeway in terms of the quantity of permissible API requests.

A third option recommended by experts corresponds to the execution of an ‘exponential backoff’ strategy for retry attempts. More details regarding this technique, including examples of code snippets, can be found in the Google Analytics Dev Guide on Error Responses. This strategy entails delaying consecutive retry attempts exponentially, rather than trying over immediately, which gives the system ample time to alleviate its stressed state and potentially circumvent further errors.

Implementing such solutions isn’t only beneficial in tackling the OpenAI ChatGPT (Gpt-3.5) ‘API Error 429’, it’s also useful generally for managing APIs effectively and efficiently. This subsequently enhances the robustness of your software while enabling it to handle larger volumes of data without hindering user experience.

// An example of using exponential backoff in Javascript

let delay = 1; // Start delay at one second

function callApi() {

api.call()

.then(result => console.log(result))

.catch(err => {

console.log('Something went wrong. Retrying...', err);

setTimeout(callApi, delay * 1000);

delay *= 2; // Double the delay for the next attempt

});

}

callApi();

Coping with API quota limits requires careful programming, patience, and sometimes strategic approaches to maximize the value you receive from the API under the conditions of its quote limits. With the correct measures applied, you can ensure that your applications run smoothly, while also respecting the usage limits established by the provider.If you’re interacting with OpenAI’s ChatGPT (Gpt-3.5) API and unexpectedly encounter the error 429, this essentially communicates that you’ve exceeded your current quota.

In applications, status codes are sent by the server to communicate certain types of messages and interface successfully with clients. Here, the 429 status code signifies “Too Many Requests”. Embedded in the HTTP/1.1 specifications, it implies an entity has sent too many requests within a given timeframe. In OpenAI’s context, the error would mean you’re overstepping the capacity agreed upon for your account.

Inspecting the limitations for data transfer, quantity and/or timeframe allowed can help resolve error 429. Different types of accounts may possess varying limits:

- Free trial users: They typically get lower quotas.

- Paid users: They generally benefit from higher quotas.

Refer to the OpenAI Pricing page (https://openai.com/pricing) to get detailed insights regarding these limitations.

The message accompanying the error will provide more specific details about how you overstepped your limit. The error could be due to exceeding Rate Limits or the tokens Limit in a single API call. Two types of Rate Limits you need to focus on are:

RPM - Requests Per Minute

: The number of API calls you’re entitled to make per minute.

TPD - Tokens Per Day

: The total number of tokens you can use within a single day.

For example, if you have a rate limit of 60 RPM and 60000 TPD and you’re sending 70 requests in one minute, or you’ve used 61000 tokens in a day, you would certainly trip over Error 429.

Consider the following table to understand some general errors, causes and solutions:

| Error | Cause | Solution |

|---|---|---|

| Rate Limit Exceeded | Making too many API calls in a short duration | Implement cooling periods between requests |

| Tokens Limit Exceeded | Used up all allocated tokens in a day | Minimize the use of tokens or upgrade your plan |

Adjusting your usage pattern is the primary solution. You can distribute your requests more evenly throughout the application run time to avoid bombarding the server. In the case of high token consumption, consider using condensed text to allow more iterations within the same token budget.

In some cases, upgrading your subscription plan may be an effective solution if your usage needs genuinely exceed what your current plan offers. Visit the OpenAI pricing page to get more details about subscriptions.

For ready implementations using Python, developers refer to the OpenAI Cookbook’s guide on handling rate limits for inspiration.

The OpenAI GPT-3.5 API error 429, “You exceeded your current quota,” is a common issue developers come across while utilizing APIs. The error usually pops up when the number of requests you’re making surpasses the limit set by your API key’s usage checkpoint.

Let’s dive deep into some potential reasons why one might be exceeding the quota in the Gpt-3.5 API:

Excessive Requests

In most instances, constantly executing several requests in a brief span will trigger this error. Each API request counts towards your quota whether it was successful or failed. So if you loop through numerous quick succession requests, you may unknowingly exceed your assigned limit.

for user in users:

openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{

'role': 'user',

'content': f'I want to find all posts about {user.name}.'

}]

)

This code snippet loops through multiple users and runs an API call for each user, which could use up the quota very quickly if there are many users.

Too Many Tokens

Another factor could be the utilization of a high number of tokens per request. Although the amount of text generated seems small, remember that both input and output tokens count against your rate limits. Even non-text characters like spaces and punctuation are counted as tokens.

openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "This is a large block of text.........."}

]

)

In many languages, even spaces or other hidden characters can count as tokens, leading to unexpectedly high token usage.

Calls Made Through Multiple Devices/Browsers

If the calls are being made from different devices or browsers simultaneously, they cumulatively count toward the API usage limit, which could result in its breach.

Frequent Refreshing Of The Application

Suppose the methods that make API calls are placed incorrectly (i.e., in a part of the code that gets invoked on every refresh), the continuous refreshing of the application results in numerous unplanned API requests, resulting in exceeding the allocated quota.

Inefficient Error Handling Techniques

If the system isn’t configured to slow down or halt requests upon receiving an error notification, you may empty out your quota rapidly due to frequently recurring errors.

Overall, managing your quota effectively requires careful planning and an understanding of how your application interacts with the GPT-3.5 API. Ensure that you evenly distribute your requests, efficiently handle errors, and keep track of your token usage to avoid hitting quota limits. You can monitor your usage and adjust accordingly directly from the OpenAI platform.

Solutions to Overcome Quota Limitations in OpenAI’s Gpt-3.5

Seeing an API Error 429: “You exceeded your current quota.” message from the OpenAI ChatGPT (Gpt-3.5) can initially be frustrating and challenging to deal with. This error typically means that you have surpassed the maximum number of requests allowed for a particular period. However, several solutions could address this:

1. Check Your Application’s Rate & Size Limit(Resolving Efficiently):

One common cause of the error is breaching either or both the rate limit(count of requests per minute) and size limit(amount of data requested).

Consider adjusting your request pattern to stay within these parameters. For example, instead of sending several short SMS-like requests, you might decide to send fewer but slightly more extended interaction messages.

{

"messages": [

- { "role": "system", "content": "You are a helpful assistant." },

{ "role": "user", "content": "Who won world series in 2020?" }

]}

In the above scenario, the system message isn’t intended to elicit a response, so it is more efficient than sending multiple requests.

2. Upgrade Your Plan:

The simplest solution is always upgrading your plan by contacting OpenAI’s sales department or directly from your OpenAI account dashboard if option available. Depending on how extensively you’re using the OpenAI API, acquiring a higher-tier plan might prove more efficient and cost-effective in the long run.

3. Implementing Exponential Backoff:

Another effective approach to handle this error is to employ exponential back-off coded into your application logic. Retry failed requests starting with immediate retries gradually increasing the time between subsequent ones.

function callAPI(...args) {

try {

// Your code to call the OpenAI API here

} catch(err) {

if(err.code === 429) {

let retryIn = err.headers['openai-retry-in'] || 1;

setTimeout(callAPI, retryIn * 1000, ...args);

}

throw err;

}

}

This function will automatically retry calling the API after waiting for a specific duration suggested by OpenAI to ensure that there are no other unseen errors.

4. Optimize Resource Usage:

Observe and monitor the requests usage with the quota metrics(avg tokens, model utilized, etc.) available through OpenAI’s ‘usage’ API endpoint. Reduce tokens used per chat request as much as possible while retaining necessary information.

Implementing these solutions helps you navigate around and effectively use OpenAI’s resources without hitting the quota limitations and ensuring that you always have access when you need it.

For more extensive details, you can check out the OpenAI API documentation.

In respect to the OpenAI ChatGPT (Gpt-3.5) API, error 429 is indicative of reaching or surpassing the allocated quota limits. To avoid this pitfall, here are a few preemptive measures that can play an instrumental role:

1. Rate Limiting:

OpenAI enforces rate limits on its APIs ranging typically from 20 up to 60 requests per minute, depending on the tier of the user. Therefore, understanding and honoring these predetermined constraints is very essential. Construct your calls in such a way where the rate doesn’t exceed the stipulated limit.

For example, if there’s a limit of 40 requests per minute:

`Delay between each request = 60 seconds/the rate limit = 60/40 = 1.5 secs.`

This implies that you should space your API calls by at least 1.5 seconds to avoid breaching the quota.

2. Efficient Use of Tokens:

Every input and output in the GPT-3 API consumes tokens. The total number of tokens effects the cost, time taken for the call and whether the call works at all based on the maximum limit of tokens (4096 tokens for gpt-3.5-turbo per prompt). Reducing unnecessary text, abstracting particulars, or truncating long texts can help keep the token count under control.

3. Caching Responses:

For frequently called APIs with identical responses, it’s viable to introduce caching. This keeps a stored version of a once successful response and retrieves that instead of making a repeated API call.

4. API Monitoring:

Often times, being aware of your API usage pattern, peak traffic periods and typical request frequency can assist in avoiding overuse. Implementing system level monitoring with automated alerts when nearing the limit or dramatic spikes in usage occurs, can inject agility and prevent surprises.

Although preemptive measures significantly reduce the risk of hitting API limits, there might still be instances where the error pops up. In those scenarios, OpenAI’s guide on handling errors comes in handy.

Remember, effective management of API calls not only aids in avoiding “Rate Limit Exceeded” errors but also promises overall better system performance, improved user experience and decreased costs.

For managing and optimizing your usage of the OpenAI ChatGPT (GPT-3.5) API, error code 429 is a crucial checkpoint. In general, this HTTP status code indicates that the user has made too many requests in a specific time frame (often called “rate limiting”). When it comes to the GPT-3.5 API, getting a 429 error signifies exceeding the operation quota set for your account.

Monitoring your GPT-3.5 API usage can provide significant benefits:

* Resource Management: you will gain insights into how much of your allocated quota you’re using, where it’s being used, and when it’s being utilized, allowing you to allocate resources effectively and avoid interrupting operations due to frequently meeting quota limits.

* Avoid Excessive Costs: Every request sent to the API consumes some part of the quota, and exceeding the limit could potentially lead to additional charges. By monitoring closely, you can stay within budget.

* Optimizing Operations: Sometimes an excessively high number of requests or large text inputs might be more than you need for your application. By monitoring usage, you may identify opportunities to make the same operations more efficiently, either by reducing the frequency of requests or by making the input content smaller.

* Ensure Smooth Functioning: Too many quota hits can result in downtime for services relying on the API. Timely recognition through monitoring helps in risk prevention.

If you happen to encounter the error 429 from OpenAi, here are things you should consider in fixing:

{

"error": {

"code": 429,

"message": "You have exceeded your current quota."

}

}

The message is pretty straightforward; you’ve exceeded your rate limit. Your short-running fix would be to spread out your requests over time, ensuring they do not exceed the allowed rate.

In the long run, constantly checking your usage against the quota is not just about avoiding error 429, but also about maximizing the benefit from the allotted quota. Notably, understanding the stage at which your application generates the most API calls, you can better plan your requisitions. For instance, if certain times of day consistently produce higher demand, schedule non-essential calls for off-peak periods.

To address the Error 429 specifically while using OpenAI, keep track of the ‘usage’ field returned in the API response. This field provides insight into your API utilization, detailing how many tokens were used in your recent processing actions.

Monitor usage with python using this sample code:

import openai

openai.api_key = 'Your-API-Key'

response = openai.Completion.create(engine="text-davinci-003", prompt="Translate the following French sentence into English: '{Le ballon est rouge}'", max_tokens=64)

print(response['usage']['total_tokens'])

In the OpenAI API documentation, there’s further clarification on how to handle such situations and suggestions for adjusting your interaction with the API to accommodate the restrictions.

Remember, monitoring your GPT-3.5 API usage will help in improving efficiency, resource management, and cost-effectiveness while averting downtimes. Plus, you may end up discovering new ways to better utilize the powerful capabilities of the GPT 3.5 model!APIs play an instrumental role in the digital world, enabling applications to share data and functionalities with each other easily. However, to ensure optimal performance and fair distribution of services, APIs often come with a quota or rate limit. In the context of OpenAI’s GPT-3.5 API, these limitations can sometimes lead to an Error 429—”You exceeded your current quota,” which can adversely affect the API’s performance.

Understanding Quota Limits & Error 429

API quota limits are set bounds that regulate how frequently you can call upon an API within a specific timeframe—for instance, a specified number of requests per minute. Whenever you exceed such quota limits, you encounter an HTTP 429 error—which signifies too many requests.

It’s essential to acknowledge this status code, as it denotes that the user has sent too many requests in a given amount of time (‘rate limiting’), resulting into a degraded API performance.

The Impact of Quota Limits on API Performance

The API quota limits can have both beneficial and adverse impacts on API performance. The upsides include:

- Improved Quality of Service: By controlling the number of requests, servers are less likely to get overwhelmed, resulting in more reliable and responsive interactions for everyone.

- Fair Usage: Rate limiting ensures that all users get equitable access to the service, by inhibiting one user from monopolizing the server resources.

On the other hand, downsides come when these quotas are surpassed:

- Service Disruption: When you hit the API quota limit, additional requests won’t be fulfilled until the utilization period gets refreshed, causing disruptions in service.

- Reduced Throughput: Quotas can also limit the throughput—i.e., how much data can flow through—an API, potentially slowing down data-intensive operations.

Handling Error 429 with OpenAI Chat GPT (GPT-3.5) API

When you get a ‘Too Many Requests’ message from the OpenAI GPT-3.5 API, there are concrete steps you can take:

- Retry-After Header: The OpenAI API provides a

Retry-After

header in its response, suggesting how long you should wait before making another request.

- Throttling: You can implement a throttling mechanism on your end to control the rate of requests and thereby avoid hitting the quota limit.

For example, if you’re using Node.js, you could use middleware like ‘express-rate-limit’ to implement rate limiting:

const rateLimit = require("express-rate-limit");

const limiter = rateLimit({

windowMs: 15 * 60 * 1000, // 15 minutes

max: 100, // limit each IP to 100 requests per windowMs

});

app.use(limiter);

Bear in mind that reducing the rate of requests will likely slow down the operation of your application. Yet, it’s a necessary trade-off to achieve better API functionality and comply with the API usage policy. Be sure to plan your usage accordingly, taking into account peak times of operation.

Overall, understanding quota limits is necessary to sustainably leverage the power of APIs. By gracefully handling ‘Too Many Requests’ errors, optimizing usage, and respecting the shared nature of API resources, developers can maximize their benefits while maintaining vigorous performance.It seems you’ve run into Error 429, “You Exceeded Your Current Quota,” while using the OpenAI GPT-3.5 API. This is often an indication that the allocated rate limit for your account has been surpassed within a specified period.

Understand the Message

This error message is a standard HTTP status code. The status code ‘429’ stands for ‘Too Many Requests.’ In the context of APIs, it typically means that you have made too many requests in a given time period.

OpenAI enforces a certain number of API calls per minute (RPM) and tokens per minute (TPM) to manage server load and ensure fair usage. If the number of requests you’ve made exceeds this limit, the API will respond with the “You Exceeded Your Current Quota” message.

How to Handle this Error

There are several approaches to address this issue:

- Rate Limiting: You need to throttle your requests or implement some form of rate limiting on your client, reducing the RPM and TPM. One common method is an exponential backoff algorithm — when hitting the quota, increase the wait time exponentially before making another request.

- Quota Upgrade: If the current quota severely hampers your application’s functionality, you could reach out to OpenAI and request an increased quota, explaining the purpose and requirements clearly.

- Error Handling: Ensure to handle such errors properly in your code to avoid application crashes or unexpected behaviors. Use try-catch blocks around your API calls to orchestrate appropriate responses for different types of errors.

Here is how you could potentially handle such cases in Python using the `requests` library:

import time

import requests

def call_api():

response = requests.post('https://api.openai.com/v1/engines/davinci-codex/completions', headers={'Content-Type': 'application/json'}, data='Your data')

if response.status_code == 429:

print("Rate limit exceeded. Wait and retry.")

time.sleep(120)

call_api()

else:

# Process response

pass

In the code snippet above, we’re simply waiting for two minutes and then reattempting the API call if we receive a 429 status code.

Acknowledge Rate Limits

Bear in mind that the rate limits differ with API usage – free trial users usually get lower rate limits compared to Pay-as-you-go users. Always remember to adhere to these limitations as mentioned in the OpenAI Pricing Details.

By keeping a strict track of your API request rate and having robust error handling mechanisms in place, you can mitigate most impacts of hitting the “You Exceeded Your Current Quota” error from the Gpt-3.5 API.

When you encounter OpenAI ChatGPT (GPT-3.5) API Error 429: “You exceeded your current quota,” this error message signals that you’ve reached or surpassed the number of requests allotted to you under your current usage plan for the GPT-3.5 API. It’s important to remember:

- API Quota: Each OpenAI API usage plan comes with a distinct quota, which is essentially a cap on the amount of server resources available. The limit is set to ensure fair distribution among all users and prevent overloading the system.

- 429 Error: HTTP status code ‘429 Too Many Requests’ is thrown when the user sent too many requests in a given period.

- Rate Limiting: Resolving this issue will likely entail examining how you’re using the API and it might be necessary to adapt your rate of sending requests.

// Sample representation of an HTTP 429 response

HTTP/1.1 429 Too Many Requests

Content-Type: text/html

Retry-After: 3600

{

"message": "You exceeded your current quota"

}

Another solution to rectify this error would be to consider increasing your API quota. You can do this by signing up for a higher level plan. Before making this decision, understand that increasing your quota will entail further costs.

For instance,

// Example using Python

import time

import openai

openai.api_key = 'your-api-key'

while True:

try:

# Your API call here

break

except openai.error.RateLimitError as e:

time.sleep(e.retry_after)

As well as handling API errors, it’s also crucial to apply best practices when dealing with APIs such as:

- Error Handling: Implementing robust error handling in your code helps preclude unexpected failures during production runs

- Optimization: Try optimizing the calls made to the API by clumping together multiple operations into fewer standardized API calls to reduce the overall volume.

By comprehending and implementing these responses, you will not only bypass these challenges but also build a more efficient interaction routine that enhances your utilization of OpenAI’s advanced language model, GPT-3.5.