Why `Torch.Cuda.Is_Available()` Returns False Even After Installing Pytorch With Cuda?

html

| Issue/ problem | Potential Reason | Solution |

|---|---|---|

torch.cuda.is_available() returns false |

GPU not supported | Ensure your GPU supports CUDA. Nvidia GPUs with a compute capability > 3.0 are typically supported. |

torch.cuda.is_available() returns false |

CUDA was not correctly installed | Reinstall PyTorch ensuring that the correct CUDA version is selected during install. |

torch.cuda.is_available() | The correct environment variable paths are not set up | Set the CUDA_VISIBLE_DEVICES environmental variable. This can typically be solved by running

export CUDA_VISIBLE_DEVICES=0 . |

Now let’s dissect some reasons as to why `torch.Cuda.Is_Available()` may return `False` even after installing PyTorch with Cuda.

When we call `torch.Cuda.Is_Available()`, this command basically checks if the machine’s GPU is accessible and whether it supports CUDA capabilities. However, despite having installed PyTorch with CUDA, you might face situations where this function still returns `False`. The possible reasons for this discrepancy could be:

1. **Not having a CUDA-enabled GPU**: Not all GPU units support CUDA. So before you attempt to run PyTorch functions with Cuda, it’s important that you ensure your current hardware configuration houses a CUDA-enabled GPU. The general rule of thumb here is that most Nvidia GPU units manufactured after 2006 should support CUDA.

2. **Incorrect installation of CUDA**: It might also be the case that CUDA was not properly installed when Installing Pytorch. If this happens, you would need to re-install PyTorch and ensure that the correct CUDA Toolkit compatible with your system and PyTorch is selected during the installation phase.

3. **Incorrect Environment variable setup** : PyTorch utilizes certain environment variables to perform its tasks. It might be that the appropriate environment variables have not been correctly set while configuring PyTorch in your local development environment. The `CUDA_VISIBLE_DEVICES` variable needs to point to the right device number for utilizing the available resources. Setting this up is usually a one-line command in the terminal: `export CUDA_VISIBLE_DEVICES=0`.

Wherever the trouble may lie, the intense symbiotic relationship between PyTorch and CUDA only underscores our need to get this aspect right to gain full leverage from PyTorch’s functionalities.

Here are some online references:

* PyTorch official documentation

* NVIDIA CUDA Installation Guide if you want to dig deep into CUDA setup.

* Confirmed NVIDIA GPUs supporting CUDA.

* Setting up CUDA_VISIBLE_DEVICES on NVIDIA Developer ForumWhen we think about deep learning, fundamentally, it’s about leveraging vast amounts of computational resources to crunch enormous dataset for inferencing patterns using neural networks. PyTorch, an open source machine learning framework, enables this by simplifying the process of feeding data through these models and adjusting model parameters.

Now if you’re wondering why even after installing PyTorch with CUDA, `torch.cuda.is_available()` returns False, here are few aspects that need to consider:

– PyTorch-CUDA Dependency:

Not all versions of PyTorch work with every CUDA version. Depending on your PyTorch installation, it could have been built with a specific CUDA version. You can review the [PyTorch website](https://pytorch.org/) or its [GitHub Installation page](https://github.com/pytorch/pytorch#installation) to see which CUDA versions are supported by which PyTorch installations. For example, suppose Pytorch version 1.7.0 needs CUDA 9.x or later. If you have an older CUDA version installed, `torch.cuda.is_available()` would output False.

– GPU Compatibility:

Deep learning algorithms significantly benefit from parallel processing. NVIDIA’s CUDA (Compute Unified Device Architecture) technology is nicely poised here as it allows direct access to the GPU’s virtual instruction set and parallel computational elements. It becomes critical then, that your GPU supports CUDA. As such, having an incompatible GPU could also result in `torch.cuda.is_available()` reporting False.

– Correct Installation:

Did your CUDA installation go well? Are all environment variables correctly pointing to CUDA libraries? Remember, just because PyTorch is installed doesn’t mean CUDA was installed automatically too. An improper installation might be a cause behind this issue.

Here’s a way to double-check your CUDA installation:

nvcc --version

This command should return the current CUDA version installed. If an error appears or command not found is returned, it indicates that CUDA is not properly installed or the PATH variable isn’t correctly configured.

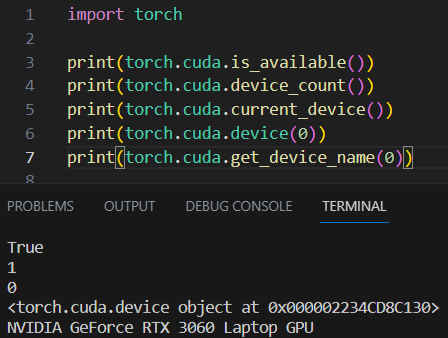

To determine if PyTorch is recognizing the CUDA installation, you can use the following PyTorch commands:

import torch

print(torch.version.cuda)

If None is returned, this means PyTorch does not recognize the CUDA installation.

Resolving these issues will likely give you a ‘True’ when running ‘torch.cuda.is_available()’ and ensure that you make most of your GPU while working on Deep Learning projects with PyTorch.Getting to grips with PyTorch and CUDA can be tricky; one common issue faced by many coders is that `torch.cuda.is_available()` still returns False even if they’ve installed PyTorch with CUDA support. This might seem confusing, but the reason is usually down to a few common causes.

# Example: Checking if CUDA device is available. import torch print(torch.cuda.is_available())

## What does `torch.cuda.is_available()` do?

This method returns true if the PyTorch is capable of utilizing your machine’s GPU. It performs a series of hardware and software checks to make sure everything needed for CUDA-based computations is properly configured.

For example:

* Its backend operations are written in C++ and need to communicate directly with the CUDA API which requires specific libraries and drivers.

* It checks if your system has at least one CUDA-capable GPU.

* It verifies that the correct CUDA libraries and drivers are installed and available for PyTorch.

## Why `torch.cuda.is_available()` can return false

If `torch.cuda.is_available()` consistently returns `False` despite you knowing that your machine has an operable GPU and CUDA installed, then it’s likely due to one of the following issues:

### 1. Incompatible CUDA version for the installed PyTorch

Incompatibilities between PyTorch’s version and CUDA’s version can cause the method to return `False`. For optimal performance and compatibility:

* Make sure to use the most recent compatible versions of both PyTorch and CUDA. To see the supported CUDA versions for each PyTorch version refer to the Pytorch release notes.

# Use the command below to check your PyTorch version print(torch.__version__)

### 2. Incorrect Environment Variables

It’s possible that PyTorch cannot locate your CUDA installation due to incorrect environment variables. The $PATH variable should include directories with bin and library files from the CUDA Toolkit (reference).

### 3. Issue with NVIDIA Drivers

Drivers allow your operating system and other software to communicate with your hardware. If your NVIDIA drivers are out-of-date or not installed correctly, PyTorch may fail to identify CUDA capability. You can download the latest logical NVIDIA drivers from their official download page. For installation instructions, you can refer to the CUDA Linux installation guide provided by NVIDIA.

## Summary

To sum up, calling `torch.cuda.is_available()` verifies whether PyTorch can interact with your machine’s CUDA capability, allowing tasks to be offloaded to your GPU. This can return `False` due to a number of reasons such as having incompatible versions of PyTorch and CUDA, wrongly set environment variables, or issues with the NVIDIA drivers. Debugging involves making sure you’re running compatible software versions, correctly setting environment variables, and ensuring your NVIDIA drivers’ compatibility and proper installation.Let’s dive into answering this query: “Why does `torch.cuda.is_available()` return False even after installing PyTorch with CUDA?”

This situation is typically brought about by a handful of common issues. It’s essential to remember that the `torch.cuda.is_available()` function is meant to return True only if PyTorch detected your system’s GPU and its CUDA drivers correctly.

GPU Absence or Unsupported GPU

PyTorch utilizes CUDA, a parallel computing platform from Nvidia, for performing operations on its tensors (datasets). Unsurprisingly, CUDA requires an Nvidia GPU in order to work. If your system doesn’t possess an Nvidia GPU, `torch.cuda.is_available()` will return False. Furthermore, not all Nvidia GPUs are supported. For instance, older GPUs might not support the CUDA version needed by your PyTorch installation.

Incompatible Versions of CUDA and PyTorch

A differing version of CUDA installed on your system compared to what your PyTorch package was built with, can result in `torch.cuda.is_available()` returning False. When you install PyTorch with CUDA, it’s delivered with the CUDA/CUDNN binaries inside the same package. As PyTorch gets updated frequently, it’s crucial to ensure the version compatibility between PyTorch and CUDA.

Here’s how you check for the CUDA version embedded with PyTorch:

import torch print(torch.version.cuda)

Bypassed CUDA Installation During PyTorch Setup

You might’ve unintentionally skipped the inclusion of CUDA while setting up PyTorch. Depending upon the method you used for the process, it might not have installed CUDA by default. For example, in Anaconda you must specify the CUDA toolkit version when installing PyTorch:

conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

Absence of CUDA Drivers or Wrong Environment Path

Even though PyTorch comes bundled with the necessary CUDA (and cuDNN) libraries, you still need appropriate CUDA drivers installed on your machine. If they’re missing or incorrectly installed, `torch.cuda.is_available()` will return False.

Also, inaccurately set environment variables could lead to PyTorch not discerning CUDA installation. In such cases, verifying and rectifying the system’s PATH and LD_LIBRARY_PATH can be beneficial. To investigate environment variables, use the below statements:

import os print(os.environ['PATH']) print(os.environ['LD_LIBRARY_PATH'])

Remember: settling any discrepancies in software versions, ensuring correct PATH variables and validating hardware drivers’ presence are prerequisites to making best use of PyTorch’s deep learning functionalities.

For more specific error debugging, always refer to PyTorch’s official documentation.When working with PyTorch, you may experience the ‘

torch.cuda.is_available()

‘ function returning False even after you’ve installed PyTorch with CUDA. This usually is a sign that CUDA wasn’t correctly installed or isn’t accessible to PyTorch for some reason. There could be a range of problems causing this issue.

Let’s start by taking a look at PyTorch installation. As you know, the preferred method of installing PyTorch is through the pip or conda command. To ensure PyTorch works with CUDA, you should use commands specific to your CUDA version. For instance, if you’re using CUDA 10.2, your PyTorch installation command would look like this:

conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

Make sure to check what CUDA version you have installed in order to use the correct variant of this command.

Though it seems straightforward, quite a few things can go wrong during the installation, causing

torch.cuda.is_available()

to return False. SEO keywords here include PyTorch, torch.cuda.is_available(), PyTorch/CUDA compatibility issues, and Python coding practices.

• One common problem is a mismatch between the CUDA versions of your system and PyTorch. You must install a variant of PyTorch compatible with your CUDA version.

• Even if the versions line up, the

cudatoolkit

package bundled with PyTorch might not properly integrate with your system’s CUDA drivers. In such case, it’s better to replace it with a standalone CUDA toolkit available from the official NVIDIA website (link).

• The PATH environment variable settings could affect CUDA’s interaction with PyTorch. The CUDA toolkit/binaries’ path needs to be included in the system’s PATH.

Now, let’s look at troubleshooting. Here are some steps you can take to try and solve the issue:

– First and foremost, verify the CUDA version on your system with

nvidia-smi

. Using this information, uninstall and then reinstall PyTorch, specifying the correct cudatoolkit version.

– Make sure all drivers are up-to-date. It’s essential to have the most recent driver version supporting your CUDA version installed.

– Verify GPU availability. Try running the following command:

!nvidia-smi

This command will show you whether your GPU is being correctly recognized. If nothing shows up, that signals an issue with your graphics driver.

– Finally, set the LD_LIBRARY_PATH which is used by the dynamic linker. Add these lines to your .bashrc or equivalent:

export PATH=/usr/local/cuda-10.0/bin${PATH:+:${PATH}}$

export LD_LIBRARY_PATH=/usr/local/cuda-10.0/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

Remember to replace 10.0 with your CUDA version.

In essence, ensuring the correct interplay between your system, CUDA, and PyTorch is crucial for utilizing CUDA. Take careful note of version compatibilities and synchronize all moving parts in the installation and setup process. If followed precisely, these measures should resolve any issues causing

torch.cuda.is_available()

to return False despite having installed PyTorch with CUDA support.You might have faced the issue where

torch.cuda.is_available()

returns

False

after installing PyTorch with CUDA. There could be several reasons for this, including incompatible CUDA versions. Let’s break down what factors could interfere and the steps to resolve them.

Incompatible CUDA Versions: One of the leading causes is having a PyTorch version that doesn’t match your system’s local CUDA. To check your PyTorch version, use the following command:

import torch print(torch.__version__)

And to check your CUDA version, use:

nvcc --version

We can compare these versions against the official PyTorch release notes. If it turns out they are incompatible, either downgrade or upgrade your PyTorch installation to match the right CUDA version using:

pip install torch==1.x.xx+cu102 torchvision==0.x.xx+cu102 torchaudio===0.x.xx -f https://download.pytorch.org/whl/torch_stable.html

Remember to replace ‘1.x.xx’ and ‘0.x.xx’ with the correct PyTorch and torchvision versions respectively.

Mismatch between Python and PyTorch bit versions: A 64-bit Python installation requires a 64-bit PyTorch installation and the same applies for 32-bit. The incorrect version may result in

torch.cuda.is_available()

returning

False

. We suggest always using 64-bit versions due to memory limitation issues with 32-bit systems.

CUDA Not Enabled on Your GPU: Your GPU must support CUDA and have it enabled. Check your GPU CUDA compute capability at NVIDIA’s Official Website.

Incorrect Environment Path: An incorrect environment path may cause recognition problems. Add these lines to your

~/.bashrc

file:

export PATH=/usr/local/cuda/bin:$PATH export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

After making changes, update your environment with

source ~/.bashrc

.

To implement these modifications, you should fully understand each step. Not proceeding carefully could potentially damage the entire system configuration. Remember, troubleshooting code-related problems is a process that requires patience and analytical skills.

When your PyTorch install successfully completes with CUDA but

torch.cuda.is_available()

still returns False, it can be incredibly frustrating. This issue typically arises due to conflicts between versions or settings of different software components involved in the GPU computation process. It’s crucial to understand that

torch.cuda.is_available()

returning False simply means that PyTorch is unable to access NVIDIA’s CUDA toolkit necessary for GPU-accelerated computation.

Why this happens?

Various factors may come into play:

• Compatibility Issues: The installed version of PyTorch may not be compatible with the CUDA version installed on your machine.

• Incorrect CUDA Path: The CUDA toolkit may not be reachable or discoverable by PyTorch due to an incorrect PATH variable.

• GPU Driver Incompatibility: The graphics driver that interfaces with the CUDA might not be compatible.

Solutions: Ensure Version Compatibility

Firstly, ensure the compatibility of your dependencies. Check if PyTorch and integrated CUDA versions are compatible. Refer to the official PyTorch Website for the recommended CUDA version.

If you find a mismatch, uninstall the PyTorch version and reinstall with the proper CUDA toolkit:

pip uninstall torch torchvision torchaudio

Following that, installation with specified CUDA version will look like this:

pip install torch==1.8.1+cu111 torchvision==0.9.1+cu111 torchaudio===0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

This installs PyTorch 1.8.1 with CUDA 11.1.

Solution: Correct Your CUDA Path

You also have to ensure that your CUDA installation path is correctly set in your environment variables. If CUDA is not added to your PATH, add it using this command:

export PATH=/usr/local/cuda-10.1/bin${PATH:+:${PATH}}

Here /usr/local/cuda-10.1 is the typical default installation path of CUDA toolkit 10.1. Adjust this path as per your installation.

Solution: Update Your GPU Drivers

Make sure your GPU drivers are up-to-date. Each CUDA Toolkit version corresponds to a specific minimum driver version. You can refer here for the CUDA-GPU driver compatibility. Use NVIDIA’s site to download the suitable driver if yours is outdated.

It’s important to note: Changes in CUDA versions or GPU drivers may require restarting your system. So, if

torch.cuda.is_available()

continues to return False, try rebooting before panicking.

Remember to approach this problem patiently. These issues often pop up due to a small misconfiguration or a version mismatch. Once you successfully resolve these discrepancies,

torch.cuda.is_available()

should start returning True, allowing you to embark on your GPU-accelerated deep learning journey!When diving into the depths of neural networks, machine learning, and deep learning algorithms, PyTorch is a fantastic tool. Having a complement like CUDA for GPU acceleration enhances its features and boosts processing speed. However, sometimes you might have run into instances where after installing PyTorch with CUDA, you question why

Torch.Cuda.Is_Available()

returns false. Don’t worry; I’ve got your back! Let’s delve into some strategies to ensure a successful implementation of PyTorch with CUDA and address this issue.

Ensuring Correct Installation:

Firstly, verify that your PyTorch installation includes CUDA support. Initially, PyTorch was developed without CUDA support. Therefore, if you’re working with an older version or installed PyTorch via pip defaults, CUDA support might be missing. Check your PyTorch version using the following command:

import torch print(torch.__version__)

Cross-check the printed version with PyTorch versions listed on the official site. If CUDA support does not seem included, reinstall PyTorch by specifying CUDA during installation.

Validate CUDA Toolkit:

One of the possible reasons

Torch.Cuda.Is_Available()

might return false is the absence or misconfiguration of the CUDA toolkit. Ensure you are running a compatible CUDA version for your specific PyTorch release (PyTorch Release Notes can help).

Install the necessary NVIDIA software, which generally includes the CUDA toolkit and suitable GPU drivers. To validate, you can check the CUDA version in the terminal using

nvcc --version

.

Assess GPU Compatibility:

CUDA requires NVIDIA GPUs that support it. Thus, if you’re getting false from

Torch.Cuda.Is_Available()

, kindly look into your GPU compatibility (here). Consider upgrading your hardware if it is not compatible.

Vital System Variables:

Missing system variables could be causing trouble too. Environmental variables, including PATH and LD_LIBRARY_PATH, should incorporate the essential CUDA paths.

Make the following additions:

# For PATH export PATH=/usr/local/cuda/bin:$PATH # For LD_LIBRARY_PATH on bash shell export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

A correct CUDA path is necessary – ‘/usr/local/cuda’ is a typical one but verify according to your specific installation.

Conflict Between PyTorch and Pre-installed CUDA:

If you already had CUDA on your device before installing PyTorch, inconsistency between the two CUDA versions could be throwing up this issue. A better strategy is to install PyTorch without CUDA and let PyTorch use the existing CUDA.

Now armed with these strategies, your issue should be fixed. Hopefully,

Torch.Cuda.Is_Available()

will return true and mark the successful implementation of PyTorch with CUDA, taking you one step closer to successfully implementing your deep learning models. Happy Learning!

Alright, so you might be experiencing a common issue where

torch.cuda.is_available()

returns false even though Pytorch has been installed with CUDA support. There are some specific aspects about this situation we should delve more into, and in doing so, it’s my hope that the explanation will help you understand what exactly is going on under the hood.

Firstly, let’s discuss why this might occur. With any software installation, there can be discrepancies between dependencies causing malfunctions. The same holds for Pytorch and your CUDA installation, which are closely knit together to function optimally.

When

torch.cuda.is_available()

returns false, it essentially means that your Pytorch offering isn’t recognizing CUDA availability. Taking apart what could cause this, I’ve outlined three primary reasons:

- Incorrect CUDA installation

If CUDA hasn’t been installed properly, or there exist version mismatches between CUDA and Pytorch, it’s likely

torch.cuda.is_available()

will return false.

- Incompatible GPU drivers

Perhaps your GPU driver versions aren’t compatible with the CUDA toolkit version you’re using. This could prevent Pytorch from detecting CUDA.

- Hardware limitations

Your machine’s hardware capabilities could be below the requirements for running CUDA applications. If your GPU cannot support CUDA, Pytorch won’t be able to recognize it.

Consider these steps if you’re facing the above issue:

Step One: Verify your CUDA installation using the command

nvcc --version

Step Two: Check your GPU drivers compatibility with your CUDA version. It’s crucial to ensure compatibility lest you encounter trouble during execution. You can verify this by referring to NVIDIA’s official documentation: CUDA Toolkit Release Notes by NVIDIA.

Step Three: Ensure your GPU supports CUDA computation. You might have to upgrade your hardware if it doesn’t meet the minimum requirements set by NVIDIA.

If you’ve followed all the steps meticulously and your problem persists, I suggest uninstalling both Pytorch and CUDA before reinstalling them again. Make sure to install versions of Pytorch and CUDA known to work well together. For example, according to Pytorch’s official page, Pytorch 1.8.1 works smoothly with CUDA 11.1.

It’s interesting to note how intertwined Pytorch and CUDA are. While their successful collaboration results in efficient computations, getting there could require battling ambiguity, more so when

torch.cuda.is_available()

frustratingly returns false despite having Pytorch installed with CUDA. By addressing possible issues such as incorrect installation, incompatible drivers, or hardware limitations, one can get past this challenge and towards effective utilization of the computational prowess that Pytorch along with CUDA offers.