next()

and

iter()

functions in Pytorch’s Dataloader behave in a similar manner to their namesakes in Python. However, their application here is strictly confined to the domain of Pytorch’s DataLoader object.

| Function | Description |

|---|---|

iter(DataLoader_object) |

Returns an iterator for the given DataLoader object, allowing you to manually iterate over batches in the dataset. |

next(iterator_object) |

Returns the next item from the DataLoader iterator. If the end of the iterator is reached, it will trigger the StopIteration exception. |

Firstly,

iter()

method comes into play when we want to generate an iterator from a DataLoader object. Calling this function initiates the beginning of the iterative process over the batched data from your dataset. We pass a DataLoader object as its argument. It can then be utilized to load data in a loop-like structure efficiently. The state of the data iteration gets remembered between each call, allowing us to sequentially obtain different chunks/batches of data until we’ve traversed through all of the data points.

On the other hand,

next()

is an integral part of the process too. This function helps by providing us with the power to control the iteration manually, getting us the next element available from the iterator. In other words, whenever we say

next(iterator)

, we request the subsequent batch of data from our existing iterator. If there aren’t any more items left to iterate upon, a StopIteration exception would be raised.

It’s important to note that combining these functions can form a very manual but accurate method of going over your DataLoader object without necessarily utilizing a loop structure. You’re essentially iterating over the dataset one batch at a time, manually controlling when to advance to the next batch. Which could provide you with more granular control over your dataset during processing or model fitting function!

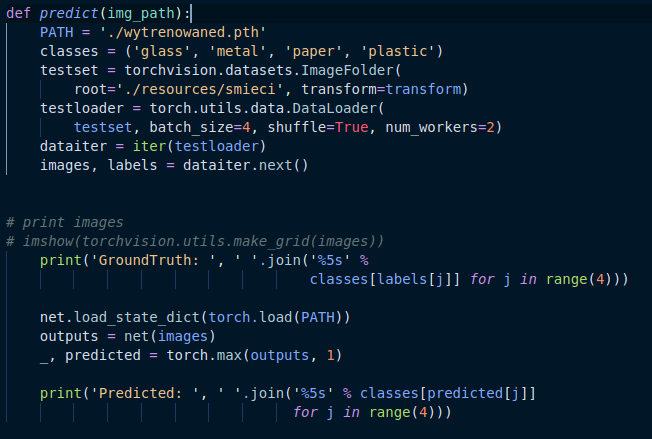

Here’s a small code fragment using a hypothetical DataLoader object:

data_loader = DataLoader(dataset, batch_size=4) data_iter = iter(data_loader) images, labels = next(data_iter)

In this example,

iter(data_loader)

creates an iterator, while

next(data_iter)

grabs the first batch of images and labels from the dataset. As a coder, getting to grips with these methods allows you to squeeze out every bit of flexibility possible from PyTorch’s DataLoader class to create customizable, efficient, and powerful pipelines for deep learning models [PyTorch Official Documentation].In your quest to unravel the intricacies of PyTorch’s Dataloader, I’m certain you’ve crossed paths with the intriguing duo:

next()

and

iter()

. These two functions, seemingly simple in their operations, are the heart of data handling in PyTorch. Understanding how they function and relate within the context of PyTorch’s Dataloader is pivotal to managing datasets in machine learning projects.

The role of Pytorch’s Dataloader cannot be overemphasized. It offers an viable mechanism for loading datasets and feeding them into your model. Appreciation of its purpose and structure isn’t complete without diving into

next()

and

iter()

– two intrinsic functions it relies on.

Iter(): This function instructs the Python interpreter to use an iterator over a sequence (list, string, etc.). An iterator is basically an object encapsulating a countable number of values, granting us the ability to iterate over these embedded values. With Pytorch’s Dataloader, iter() enables efficient looping through the dataset.

dataset = range(10) iterator = iter(dataset)

Next(): Working beautifully in tandem with iter(), next() fetches the next item from whatever iterable it’s applied to. Dispatched after calling iter() on the dataset, this significant function marches through the entire train-loader iterable calmly until it exhausts all available batches. The absence of more items triggers the StopIteration exception breaking the loop.

element1 = next(iterator)

Now you can see how delicate the interplay between both functions is. Iter() sets up the iterable platform and next() takes the lead, running through the iterable one data sample at a time, providing your model with datasets.

What happens when used with Pytorch’s Dataloader? Let’s imagine a scenario:

from torch.utils.data import DataLoader train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True) dataiter = iter(train_loader) images, labels = dataiter.next()

Above, Pytorch’s Dataloader initializes

train_loader

with your train dataset, where each batch has 64 images that are shuffled randomly. An iterator using iter() was created, setting the stage for traversing through the train_loader instance.

When

dataiter.next()

came into play, it pulled out a batch of images and their corresponding labels from the established iterator. We can say that for any dataset processing need in Pytorch, iter() fits perfectly as the setup-guy while next() holds the baton, running tirelessly through your dataset until it reaches the finish line.

Certainly, having this knowledge under your belt would undeniably make your experience using Pytorch’s Dataloader a lot more exciting and seamless. You now know just what happens behind the scenes as iter() and next() go about ensuring that nothing stands between your model and the datasets that fuel it.

Additional resources:

Understanding next()

Understanding iter()

PyTorch’s Dataloader documentationThe

next()

and

iter()

functions in the Pytorch’s

DataLoader()

play a crucial role in traversing through and loading the dataset during the model training process.

In Python, an iterator is an object that contains a countable number of values and can be iterated upon, meaning you can traverse through all the values. The

iter()

function in Python, and consequently in Pytorch’s DataLoader, is used to create an iterator object. This means it sets up the dataset so that we can go through its elements one by one.

Example of Using Iter()

Let’s take a look at how we can implement this with a simple example:

import torch

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

# Define trivial dataset.

data = [i for i in range(100)]

loader = DataLoader(data, batch_size=10)

# Create an iterator object.

iter_obj = iter(loader)

Now, calling

iter(loader)

will produce an object that can retrieve batches from

loader

when used with

next()

.

On the other hand,

next()

is a standard Python functionality that retrieves the next item from the iterator. In Pytorch‘s DataLoader context, the

next()

function fetches the next batch of data out of your dataloader.

Example of Using Next()

Continuing from our previous example:

# Obtain the next batch of data with `next()`.

next_batch = next(iter_obj)

Here,

next(iter_obj)

will return the first batch of 10 integers (since we defined our batch size as 10 in DataLoader). If we call

next()

again, it will then give us the next 10 integers, and so on, until it has traversed the entire dataset. When there are no more items in the DataLoader object, it raises a StopIteration exception which typically signals the end of an epoch in most Deep Learning programs.

One significant advantage of using

next()

with Pytorch’s DataLoader is that the data fetching process benefits from multiprocessing, and thus, for large datasets, there’s a remarkable speedup due to parallelization, which directly impacts how fast the Neural Networks can be trained.

| Function | Description |

|---|---|

iter() |

Converts an iterable (like Pytorch’s DataLoader) into an iterator. |

next() |

Returns the next item from an iterator. |

For further exploration, you can consult the official Pytorch Documentation. It provides deep insights into various functionalities and customizable options provided by Pytorch’s DataLoader utility.Sure. Let’s deep-dive into the methods

iter()

and

next()

in PyTorch’s DataLoader class. It’s understandable, given the complexity of machine learning architectures that practices like batch processing might need additional explanation.

First up, PyTorch developers make use of the

DataLoader()

method extensively. This manages the datasets – loading, manipulating, batching, and iterating over data systematically.

So, how does

iter()

work?

iter()

is a Python built-in function that generates an iterator from an iterable object. In our context, the iterable object is the

DataLoader()

. Now, what is an iterable? An iterable is any Python object capable of returning its elements one at a time, permitting it to be iterated over in a loop.

When dealing with machine learning, we often feed model with batch of images/samples instead of single data points. That’s where PyTorch’s DataLoader kicks in! The power of DataLoader lies in the ability to provide seamless iteration through batches created from the dataset. Once

iter()

is called on an instance of DataLoader, it returns an object of type ‘_DataLoaderIter’. This is more of a pointer which holds position until

next()

is called.

Now for

next()

.

next()

is another built-in function in Python. It retrieves the next item from the iterator. Every time when you call

next()

, it fetches the next batch of data from the DataLoader.

Here’s a quick illustration:

dataloader = torch.utils.data.DataLoader(dataset) data_iter = iter(dataloader) # Get the Iterator images, labels = next(data_iter) # Fetch the next batch

In this scenario, your ‘images’ and ‘labels’ will contain the batch of data (as per defined batch size). After fetching data using

next()

, the iterator advances its position, so another call to

next()

would result in the subsequent batch being loaded.

You can have these steps looped over all your data batches by calling the

next()

function repeatedly until

StopIteration

exception happens, indicating no more batches are left in the DataLoader.

Table mapping built-in python functions to their role in PyTorch’s DataLoader:

| Python Function | Role in PyTorch’s DataLoader |

|---|---|

| iter() | Returns an Iterator on DataLoader object |

| next() | Returns the next batch of data fetched from DataLoader |

Reference learning from PyTorch documentation here makes this concept crystal clear. Deciphering such built-in functions in the context of complex classes like DataLoader further supports efficient data handling practices critical for successful machine learning workflow designs.Before diving into the nitty-gritties of Next() and Iter() in the context of Pytorch’s DataLoader(), it is essential to understand what they mean individually in their typical Pythonic context.

next()

is a built-in Python function that retrieves the next item from an iterator.

iter()

, on the other hand, is used to convert an iterable into an iterator. Together, they form a potent duo helping to seamlessly traverses across data structures.

In PyTorch’s DataLoader(), which is a major component of the comprehensive PyTorch library used for machine learning and deep learning, both of these functions take on special roles. PyTorch’s DataLoader() is essentially responsible for feeding in the data batches (in appropriate sizes as per the model demands) during the model training process.

Here’s how

next()

and

iter()

play out:

# Assume 'dataloader' is an instance of DataLoader() data_iterator = iter(dataloader) data_batch = next(data_iterator)

The

iter(dataloader)

creates an object (which we call data_iterator here) such that this object will iterate over our dataloader. Then, using

next(data_iterator)

, we get one batch of our data each time it’s called. At the end, it automatically handles the StopIteration exception and stops providing batches once all data is exhausted.

Here are some key points to note about their working in DataLoader():

– It allows for iterator style data loading, rather than indexing-style. It leverages Python’s iterator protocol, where an object can be iteratively called using those functions.

– Leveraging

next()

allows Pytorch to load only one batch at a time creating an efficient pipeline. This is critical when dealing with large datasets, where loading everything simultaneously might not be feasible due to memory limitations.

– Using these methods also enable DataLoader() to work with infinite or open-ended datasets, i.e., datasets that keep continuously generating data. By checking if there is any new data batch available, it can manage such dynamic datasets.

Acknowledging the intertwined relationship between next() and iter(), especially in the PyTorch’s DataLoader instance is vital for creating robust and efficient Machine Learning models. They lie at the heart of data iteration and batch loading. Aspiring Data Scientists and ML engineers should unravel their underlying mechanics to improve data handling during model training, ultimately leading to more reliable ML deployments.

| Function | Description |

| next() | Fetches the next item from an iterator. |

| iter() | Converts an iterable into an iterator. |

Reference Link: [PyTorch Documentation](https://pytorch.org/docs/stable/data.html)

Note: The reference is the official PyTorch documentation, boasting comprehensive details about its functions including DataLoader(), iter(), and next(). Exploring it would provide you a hands-on familiarity with them along with their diverse applications, making your walk through PyTorch smoother.When working with PyTorch,

Dataloader()

plays a key role. By loading data in parallel using multi-threading, it significantly speeds up the process and increases efficiency. Two integral methods to be aware of when dealing with the dataloader are

next()

and

iter()

. In this context, I’ll like to proffer some insight on these unique features and the benefits of using them.

| Methods | Usage |

|---|---|

next() |

Fetches the next item from a Dataloader’s iterators. |

iter() |

Converts the Dataloader object into an iterable that returns its elements one at a time. |

Let’s delve deeper into these methods:

–

next()

The

next()

function retrieves the next item in line from an iterator created by the dataloader.

Example:

dataloader = DataLoader(dataset, batch_size=3, shuffle=True) iterator = iter(dataloader) data_batch_1 = next(iterator)

In a situation where you’re using a for-loop to iterate over your dataloader, you might not find much use for

next()

. However, there are times where manual control over the order of iteration can prove important: suppose you have different dataloaders for training and validation sets and you want to interleave their usage.

The benefit? Utilizing

next()

gives you finer control of iteration through your set or loader.

–

iter()

Simply put,

iter()

function converts the dataloader object into an iterator. In essence, it establishes the groundwork necessary before you can utililize

next()

.

Example:

dataloader = DataLoader(dataset, batch_size=3, shuffle=True) iterator = iter(dataloader) # now `iterator` can be used with `next()`

One significant advantage of

iter()

is allowing your dataloader to become iterable, which means you can iterate over the dataset just once (ideal when you want to avoid repeating epochs). This feature is priceless in situations where you only want to process your data once, like during testing phases.

Both the

iter()

and

next()

methods are essential operations in Python’s iterator protocol, a core part of how Python handles sequences and containers of objects. Within the context of Pytorch’s Dataloader, they provide greater control over data iteration, making your machine-learning models more flexible and powerful when processing data.

References:

PyTorch data loading tutorial

Python Official Documentation – Next

Python Official Documentation – IterUnderstanding how

next()

and

iter()

functions work in Pytorch’s DataLoader is integral to efficiently implementing them. Constructing an efficient data loading pipeline can significantly enhance the model training speed, hence improving overall machine learning program efficiency. In this regard, knowing what these methods do helps us to comprehend their relevance clearly.

PyTorch’s DataLoader provides an iterable over a dataset with support for automated batching, transformations, and shuffling, among other things. Its underlying mechanism of operation revolves around two key Python functions:

next()

and

iter()

.

iter()

function:

iter()

, a built-in Python function, is used to generate an iterator from an iterable object. In the context of Pytorch’s DataLoader, when calling

iter()

on a dataloader instance, it creates an iteratable object (a Python generator essentially), which allows going over batches from the dataset one by one.

dataiter = iter(trainloader)

next()

function:

The

next()

built-in Python function retrieves the next item from the iterator generated via

iter()

. Therefore, if you implement

next()

on the iterator created using dataloader, it will give you the next batch of data from your dataset.

images, labels = next(dataiter)

To maximize their efficiency, consider the following points:

Preloading data: You should preload as much data as possible. It helps reduce the I/O or network overhead while fetching data during training. One way to do that is through the num_workers parameter in the DataLoader’s constructor, which specifies the number of sub-processes to use for data loading.

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True, num_workers=2)

In this example, the DataLoader will spawn 2 additional Python worker processes that will load the data in parallel.

Avoid degrading performance due to random access: When dealing with a disk-based dataset and not doing many transformations, organizing your data in a way that reduces the need for random access will improve performance.

Collate function: A function that decides how the data from the dataset will be agglomerated into a batch. Default collate should be fine for most uses, but sometimes you might have custom needs or optimizations you want to make.

Batch-size tuning: Choose an optimal batch size that balances between memory consumption and computational efficiency.

Overall, understanding how the

next()

and

iter()

functions work in PyTorch gives you greater control in building an efficient data loading pipeline. This will ultimately allow you to make your training loops more efficient and potentially lead to faster convergence times. For anyone interested in delving deeper into this subject matter, check Pytorch’s official Data loading and processing tutorial.Some of the most powerful recipes in the data science cookbook utilize Python’s built-in `iter()` and `next()` functions to iterate over datasets. Specifically, when using PyTorch’s `DataLoader()`, they become incredibly handy. By supplying iterative capability, these functions can tap into colossal datasets that would otherwise be too large to load into memory all at once.

Let’s delve deeper into how `iter()` and `next()` work within PyTorch’s Dataloader.

The Iterator: iter()

Simply put, an iterator in Python helps us navigate through a collection such as a list or a dictionary. When we call `iter()` on a collection, an iterator object is returned that remembers its state and knows how to get to the next value. In the context of PyTorch’s `DataLoader()`, `iter()` has a similar role.

Consider this vanilla example to illustrate the concept before connecting it with PyTorch:

my_list = ['apple', 'banana', 'cherry'] my_iter = iter(my_list)

After initializing the iterator `my_iter` by calling `iter()` on `my_list`, we already have a way of navigating through `my_list`.

The Navigating Tool: next()

`next()` takes this iterator and returns the next item from the iterator. This is where we move through our dataset. For instance:

print(next(my_iter)) # prints 'apple' print(next(my_iter)) # prints 'banana' print(next(my_iter)) # prints 'cherry'

Using `next()` in association with `iter()` is an elegant approach to stepping through collections – this sort of construct is referred to as Python’s iterator protocol.

How `iter()` and `next()` are used inside Pytorch’s DataLoader

PyTorch’s `DataLoader()` takes a dataset and produces an iterable over batches of the dataset. It achieves this using the principles we outlined above: `iter()` and `next()`. Here’s how you’d typically use this during training machine learning models:

data_loader = DataLoader(my_dataset, batch_size=4, shuffle=True)

data_iter = iter(data_loader)

for i in range(len(my_dataset) // 4):

batch = next(data_iter)

# your model training code here

The `iter(data_loader)` statement transformed our `data_loader` object into an iterator. We then called `next(data_iter)` every time we needed a new batch for processing.

Once all pairs (of `iter()` and `next()`) exhaust, a StopIteration exception is raised, signaling that there are no more elements left to retrieve. However, if we’re iterating over dataset batches in PyTorch, the DataLoader allows us to circularly iterate over our dataset, resetting when it reaches the end and starting from the beginning again.

Hopefully, the examples provided above serve to demonstrate the importance of the `iter()` and `next()` functions in PyTorch’s `Dataloader()`. These functions allow PyTorch to handle datasets efficiently even if they are larger than the available memory, by loading only what is necessary to fit in memory for each training iteration. Now go ahead, and experiment with these brilliant functionalities to streamline your ML model training endeavors.So, let’s decode what `next()` and `iter()` actually do in Pytorch’s DataLoader(). In Python, an iterator is an object that consists of a countable number of values which you can iterate upon. This is where the built-in functions

iter()

and

next()

come into play.

The

iter()

function takes two parameters:

– object – object whose iterator has to be created (list, tuple, etc.)

– sentinel (Optional) – special value that is used to represent the end of a sequence.

It returns an iterator object. It simply calls the __iter__() method on the given object. For example, when using DataLoader in PyTorch:

loader = torch.utils.data.DataLoader(...) it = iter(loader)

Here,

iter()

converts the data loader object to an iterator, so you can loop over it.

On the other hand,

next()

, just retrieves the next item from the iterator. It also takes two parameters:

– iterator – an iterator object

– default (Optional) – this value is returned if the iterator is exhausted (there is no next item)

For instance, in the context of PyTorch’s DataLoader, once we have our iterator ‘it’, we use

next()

to get the next element in the sequence:

data, target = next(it)

What happens here is that

next()

loads the next mini-batch of data and targets by calling the Data Loader’s __next__() method internally.

To summarize,

iter()

and

next()

are built-in Python functions that transform a data object to an iterator and fetch the next item from the iterator respectively. When applied in PyTorch’s DataLoader, they help to efficiently load and serve data in batches for training or testing of neural networks.

If you want to dive deeper, you might find Pytorch’s official DataLoader documentation (source) useful. Furthermore, Python’s official documentation on their built-in functions could provide even more insight:

iter()

next()

Kindly note that while this information pertains specifically to PyTorch’s DataLoader, the concepts of iterators and the use of

iter()

and

next()

apply broadly across different areas of Python programming, making them vital tools in your coding arsenal.