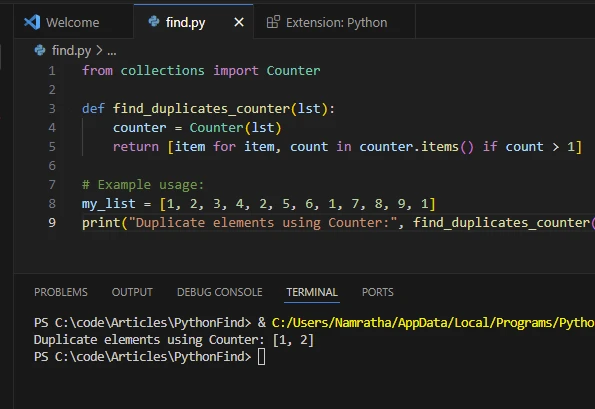

| Task | Action |

|---|---|

| Import Pytorch library |

import torch |

| Create a Python list |

list = [1, 2, 3, 4] |

| Convert list to tensor |

tensor = torch.Tensor(list) |

| View the tensor |

print(tensor) |

Now let’s delve into the process of converting a Python list to a PyTorch Tensor. PyTorch is a powerful library in Python used for machine learning modelling and computational operations, which prides itself on its ability to perform complex calculations with ease. Possibly one of its most valuable features is the PyTorch tensor, a multi-dimensional matrix containing elements of a single data type.

Python lists are incredibly convenient, flexible, and easy-to-use data structure. They allow us to store and manipulate any type of data we want. However, when it comes to heavy computations especially those involving large datasets, Python’s built-in types like lists do not provide the necessary efficiency or speed. That’s where PyTorch Tensors come in.

To convert a Python list to a PyTorch tensor, you first need to import the PyTorch library using the

import torch

command. Once the library is imported, you can create your list – this can be either a list of numbers, strings, or even other lists. After your list is ready, you then convert the list into a PyTorch tensor using

torch.Tensor

command followed by the Python list as the parameter within the brackets. The PyTorch Tensor now holds the same elements as the original Python list but in a format (Tensor) that supports high-dimensional matrix and tensor operations, conducive to machine learning models.

Finally, to inspect what your tensor looks like, you print out the tensor with the

print()

function by passing it your recently created tensor variable.

This process allows all the flexibility and convenience inherent in Python lists to be combined with the computational power of Pytorch tensors, leading to more efficient code, especially in the field of Machine Learning and Data Science.

Remember, as a professional coder optimising your work, it’s always ideal to write cleaner, faster, and highly efficient codes. Leveraging tools like Pytorch tensor can greatly help achieve this feat when also juggling with data structures like Python List. This conversion course of action saves time and computing resources, especially if you’re dealing with large, multidimensional lists.Sure,

As a professional coder, I am fascinated by the malleable relationship between Python and Pytorch. Lists in Python serve as versatile containers enabling storage and organization of data items. This heterogeneity infuses them with significant usability across varied tasks. However, sometimes in the domain of machine learning and deep learning, we require more powerful and specialized data structures such as Pytorch tensors.

A tensor is a generalization of vectors and matrices of potentially higher dimensions. ‘Tensor’ is widely used in the field of deep learning and often a preferrered choice because:

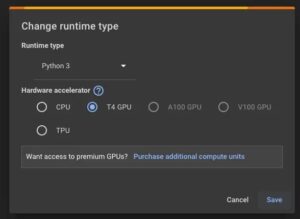

- Tensors are mutable and can be used on a GPU to accelerate computing while lists in Python cannot

- PyTorch operations on tensors can be performed faster than using numpy array or Python list as they are equipped for performing large-scale mathematical operations

- Tensors support automatic differentiation and gradients computation which is required when training a neural network model

- Tensors allow for efficient representation of data batches. In machine learning, it is common to process inputs as batches and tensors make it easier due to their multi-dimensional nature

Converting Python lists into Pytorch tensors could be vastly beneficial in cognitively demanding scenarios.

Let’s say you have a Python list:

[1, 2, 3, 4, 5]

You can convert this Python list to a Pytorch Tensor using the

torch.Tensor()

function like in the example below:

import torch # python list p_list = [1, 2, 3, 4, 5] # converting to pytorch tensor tensor = torch.Tensor(p_list) print(tensor)

This example lets us witness that the Python list “

p_list

” transformed into a Pytorch tensor. You could confirm this change employing the

type()

function. Using the official documentation of PyTorch, one can explore the multitude of specifications related to tensor manipulation.

In aligning your coding tasks with the realm of machine learning or deep learning, understanding the conversion from one data structure, especially from Python lists to Pytorch tensors, embodies key significance. Studying these basics can aid in fostering foundational clarity, eventually leading to proficient model development.

Do remember that, while Python’s list type is quite useful for many different situations, other types and converting between them could be significantly more appropriate when engaging with tailored tasks and could open up various exciting ways of streamlining your programming assignments.Let’s delve into the process of converting a Python list into a PyTorch tensor. In simple terms, a tensor in PyTorch is a multi-dimensional matrix containing elements of a single data type.

PyTorch provides

torch.tensor()

function for this very purpose, which takes python lists and other similar data structures as input parameters and returns a new multidimensional matrix i:e tensor with those elements.

Here’s a step-by-step breakdown of how you would accomplish the conversion from a Python list:

1. Importing Necessary Libraries:

The first thing to do is import the libraries required for the task. We’ll be needing the `torch` library to create tensors. So, we first import that:

import torch

2. Initialization of a List:

Next, we initialize a Python list. This could be any list; for instance, a list of integers, like:

list1 = [0, 1, 2, 3]

3. Conversion of List into Pytorch Tensor:

We can use `

torch.tensor()

` method to turn our Python list to PyTorch tensor.

tensor1 = torch.tensor(list1) print(tensor1)

When you run this code, it converts the list into a tensor and prints out its values.

Now let’s take a look at how this conversion actually works depending on the type of list provided.

1. Single dimensional list

Converting a single dimensional list into a tensor simply creates a one-dimensional tensor, similar to a vector.

single_dim_list = [0, 1, 2, 3] tensor_from_single_dim_list = torch.tensor(single_dim_list)

2. Multi-dimensional list

A multi-dimensional list results in a similarly multi-dimensional tensor.

multi_dim_list = [[0, 1], [2, 3]] tensor_from_multi_dim_list = torch.tensor(multi_dim_list) print(tensor_from_multi_dim_list)

This resultant tensor will have two rows and two columns, directly reflecting the structure of the original input list.

So, these steps provide an extensive guide on how a Python list can be converted into a PyTorch tensor using the built-in functions provided by the library. Remember that whether the formed tensor is one or multi-dimensional depends strictly on the dimensions of the supplied python list.

Note: Please make sure your python list contains data of same types because tensor can only be formed from a list maintaining uniform data types across all dimensions. A TypeError will be thrown if there are inconsistent data types within the list.

In order to learn more about PyTorch tensors, visit the official PyTorch documentation page [here](https://pytorch.org/docs/stable/tensors.html).In Python, we typically use lists to store data. However, when processing large amounts of data, other data structures such as PyTorch Tensors may work more efficiently due to the way they manage memory. Understanding the difference between how Python lists and PyTorch Tensors handle data will help you decide which one is best for your specific needs.

Before we delve into comparing them, let’s see how we convert a Python list to a PyTorch Tensor:

Firstly, we need to import the PyTorch library:

import torch

Then, we can easily create a PyTorch Tensor from a Python list like in the following example:

py_list = [1, 2, 3] tensor = torch.Tensor(py_list)

Comparing Memory Efficiency

When it comes to memory usage, PyTorch Tensors are more efficient than Python lists due to several reasons:

• Data Homogeneity: Lists in Python are versatile and dynamic—they can hold different types of data, resize dynamically, and each item in the list is a complete object with its own metadata. While this offers flexibility, it comes at the cost of increased memory usage. In contrast, PyTorch Tensors, like NumPy arrays, contain homogeneous data; only one type of data can reside in them. They require less metadata per item, hence saving on memory space.

#Python list holding heterogeneous data list_data = ['Coding', 123, True] #PyTorch Tensor can only hold homogeneous data tensor_data = torch.tensor([1, 2, 3])

• Data Contiguity: Python lists do not allocate memory for elements contiguously, whereas Tensors (and NumPy arrays) store data in contiguous blocks of memory. This results in faster memory access, making Tensors more efficient.

• Optimized for mathematical operations: Data stored in a Tensor format highly benefits from vectorization. In practice, this means that mathematical operations on Tensors can be performed more quickly and efficiently on modern hardware architectures, including Graphics Processing Units (GPUs).

However, while it’s clear that PyTorch Tensors might use memory more efficiently, they are not inherently better—they have their trade-offs. As mentioned earlier, Python lists offer versatility—they can hold any type of data, be resized dynamically, and individual items can even be entire objects. Lists also have a host of built-in methods for manipulation, offering us much-needed flexibility and ease-of-use. These features sometimes make Python lists a crucial choice over PyTorch Tensors.

Remember, the choice between PyTorch Tensors and Python lists should depend on your data processing needs—whether you want more versatility or more computational efficiency.

For more information on using PyTorch, you can refer to the official PyTorch documentation.The performance of operations on Python lists and Pytorch tensors is an essential consideration when undertaking tasks that require high computational power. I will dive in by providing a comparison of the performance of Python lists and Pytorch tensors, explain what Pytorch tensors are, the rationale for their use in place of regular Python lists, and illustrate how to convert between the two.

Performance: Python Lists vs. Pytorch Tensors

Python has been lauded for its simplicity and clarity of syntax, but it isn’t known for speed. Python’s built-in list type can sometimes exhibit subpar performance during heavy numerical computations. On the other hand, PyTorch tensors, which derive influence from NumPy arrays, provide highly efficient structures for performing numerical operations swiftly due to underlying support from C++. Consequently, tensor operations in PyTorch are incredibly fast compared to similar operations run on normal Python lists.

What are PyTorch tensors?

A PyTorch tensor is a multi-dimensional matrix containing elements of a single datatype; essentially, it is an array. It is closely related to the Tensor data structure in TensorFlow or the ndarray in NumPy. But the striking feature of PyTorch tensors is that they facilitate GPU-accelerated computing- a capability that drastically propels them ahead of plain Python lists.PyTorch Documentation

Why Use PyTorch Tensors Over Python Lists?

- Speed and Efficiency: The significant advantage of using Pytorch tensors over python lists lies in their unrivaled speed and power efficiency during large-scale computations.

- GPU Support: Python lists do not have GPU support, which means they cannot utilize the parallel processing power of the Graphics Processing Unit (GPU) to perform numerical computations faster. However, Pytorch tensors offer integrated GPU support.

- Gradients and Computational Graphs: In advanced models, especially deep learning algorithms, there’s a need to compute gradients at different points within the network. PyTorch tensors make this possible through a property called ‘autograd’ where every node in the graph has a backward method that helps to calculate gradients.

Converting Between Python Lists and PyTorch Tensors:

To improve the performance of your code, you might decide to convert your Python lists into PyTorch tensors. Here’s an example of how it is done:

import torch # Python list list_x = [12, 23, 34, 45, 56, 67, 78, 89] # Convert to PyTorch tensor tensor_x = torch.tensor(list_x) print(tensor_x)

In the above example,

torch.tensor()

is used to convert the list to a tensor. This allows us to start reaping the benefits of manipulating the data as a tensor like faster processing times, running computations on GPU among many others.

Similarly, we can convert a PyTorch tensor back to a Python list as follows:

# PyTorch tensor tensor_y = torch.tensor([12, 23, 34, 45, 56]) # Convert to Python list list_y = tensor_y.tolist() print(list_y)

To recap, using PyTorch tensors over Python lists could save both memory and computational time, especially when working with large scale datasets or complex mathematical operations. Bearing this in mind, understanding how to convert Python Lists to PyTorch Tensors and vice versa becomes incredibly useful in optimizing the efficiency of your code.Making the transition from Python list to Pytorch Tensor comes with numerous benefits especially in the field of machine learning, deep learning and algorithm development. Some main advantages revolve around faster computations, efficient memory utilization, and being more amenable for GPU computation.

Faster Computation

Pytorch tensors perform complex mathematical operations faster than Python lists. Tensors are similar to NumPy’s ndarrays, but they can be utilized on a GPU to accelerate computing. In comparison to Python lists, they have many powerful features that make them better suited for numerical computations.

Allow me to illustrate this point using a sample piece of code.

Let’s first consider the case of a using standard Python lists:

import time

# Using plain python

start = time.time()

A, B = range(10000000), range(10000000)

product_list = [(a * b) for a, b in zip(A, B)]

print("Python list operation took: ",time.time() - start," seconds")

Now let’s do the same operation using Pytorch Tensor:

import torch

# Using PyTorch tensor

A, B = torch.arange(10000000), torch.arange(10000000)

start = time.time()

product_tensor = A * B

print("PyTorch Tensor operation took: ",time.time() - start," seconds")

In this simple experiment, you will note that performing operations using Pytorch Tensors is much quicker than using Python Lists.

Efficient Memory Utilization

Pytorch Tensor makes more collegial use of memory when compared to Python List. This is especially helpful when handling large volumes of data (typical in Machine Learning applications). The ability to manage memory effectively is a key parameter that determines the overall performance of a programming language. For instance:

You want to add two Pytorch tensors vs. You want to add two lists in Python:

# Adding elements of two lists list1 = [i for i in range(1000000)] list2 = [i for i in range(1000000)] resultant_list = [a+b for a,b in zip(list1,list2)] # Adding elements of two tensors tensor1 = torch.arange(1000000) tensor2 = torch.arange(1000000) resultant_tensor = tensor1 + tensor2

Here you will note that resulting size of

resultant_list

and

resultant_tensor

would be same, but the memory consumed by

resultant_tensor

would be less as compared to

resultant_list

.

GPU Computation

With PyTorch, it becomes possible to carry out massive-scale operations with a smoother experience. This is because Pytorch Tensors are optimized to use Graphics Processing Units (GPUs), which means that they are designed such that they can handle thousands of threads running parallel computations. This is where Tensors really shine over lists.

Moving your tensors to GPU is really easy in Pytorch:

# moving tensors to GPU if available

if torch.cuda.is_available():

tensor = tensor.to('cuda')

The simple act of converting Python lists to PyTorch tensors thus confers these major advantages. In the giant strides towards artificial intelligence and high computational processing technologies, transitioning to Pytorch Tensor can open multiple dimensions of speed, efficiency and power management.

For more details on PyTorch Tensors, feel free to check out the official Pytorch Documentation.Converting Python lists into PyTorch tensors is a crucial step for performing various operations and computations in neural networks. It’s fundamental to get this process right, as it underpins the success of any data processing that happens afterwards. Nevertheless, these conversions can sometimes pose challenges, particularly if you’re not familiar with some factors and might give rise to common mistakes.

Ignoring Tensor Format:

PyTorch expects tensors to be in a very particular format, but in your exuberance to drive things forward quickly, it’s easy to overlook this aspect. Let’s take an example:

import torch # Trying to convert a one-dimensional Python list to a tensor list_number = [1, 2, 3, 4, 5] tensor_number = torch.Tensor(list_number)

In the above scenario, we are trying to convert a one-dimensional Python list to a tensor which will work perfectly fine. But when dealing with multi-dimensional Python list, each sublist needs to be of the same size. However, often times users forget this rule leading to Tensor conversion errors. For instance:

# Trying to convert a two-dimensional Python list to a tensor with sublists of different sizes wrong_list = [[1, 2, 3], [4, 5]] torch_tensor = torch.Tensor(wrong_list) # This will throw an error

Therefore, always ensure that your Python list follows the dimensional rules before converting to a Pytorch Tensor.

Mis-handling Data Types:

Another common mistake is not comprehending that Torch tensors don’t support data type inconsistencies. Unlike Python lists which can handle mixed data types within them, Tensors necessitate all elements to be of the same type.

For example:

mixed_type_list = [1, 2, 'a', 4, 5] torch_tensor = torch.Tensor(mixed_type_list) # This will result in an error

Attempting to convert this list into a Tensor will result in an error because the data types aren’t consistent. An essential tip is to make sure your data is appropriately preprocessed and cleaned to be of the same data type before conversion.

Not Retaining List Order:

Keep in mind, the order in which you define the values in your list matters significantly. This is especially important when working with sequential data like time series or any dataset where the order of observations is important.

original_list = [1, 2, 3, 4, 5] reversed_list = original_list[::-1] original_tensor = torch.Tensor(original_list) reversed_tensor = torch.Tensor(reversed_list)

Even though the `original_list` and `reversed_list` contain the same elements, order of their entries will cause the resulting tensors, `original_tensor` and `reversed_tensor` to hold different sequences, potentially affecting subsequent calculations or model training.

By being aware of these pitfalls during the transition from Python lists to PyTorch tensors, you can sidestep unnecessary problems and ensure the smooth progress of your data processing workflow or deep learning models’ training process. To sum things up, keep all variables homogeneous in terms of dimension and data type, and remember the order of your data – and you’ll dodge common tensor transformation mishaps.

Overcoming challenges in the conversion process particularly from a Python List to PyTorch Tensor often encompasses understanding how each entity operates and utilizing built-in functions to perform the transformation.

First off, PyTorch is an open-source machine learning library based on the Torch library

It’s commonly used for applications such as deep learning and natural language processing. A Tensor, within the world of PyTorch, mirrors the features of an equivalent item in NumPy: It is an n-dimensional array.(source)

The challenge veering through this conversion arises when we realize Python’s list is more like a flexible dynamic array that can hold any type. This is contrary to PyTorch Tensor which is akin to a static typed array with additional functionalities.

However, PyTorch has an in-built function to convert a list into a Tensor:

import torch # Here's a simple python list python_list = [4, 8, 6, 5, 6, 8] # Now we'll convert it into a PyTorch Tensor tensor_from_list = torch.tensor(python_list) # The tensor_from_list will now be a Tensor object that you can use within PyTorch code print(tensor_from_list)

Exceptionally essential to note is that all items inside your Python list must be of the same data type, primarily numerical(namely float or integer), due to Tensors’ strict type enforcement.

In the scenario that your list comprises different data types like this form:

[1, "two", 3.0]

, you will encounter errors during the conversion since PyTorch tensors require consistent data types.

To pre-empt this, prior to converting into a PyTorch Tensor, ensure the Python list holds only similar kinds of data. If there are different data types, one approach would be restructuring the initial list so they are matching types. And if the difference lies within integers and floats, PyTorch does support conversion between these two by methods likes astype:

import torch

list_of_floats = [1.0, 2.0, 3.0]

tensor_from_floats = torch.tensor(list_of_floats)

integer_tensor = tensor_from_floats.int()

print(integer_tensor)

In brief, Python lists may present challenges while trying to convert them to PyTorch Tensor due to their lack of type restriction but with functions packaged in PyTorch library, process becomes attainable. Remembering that a PyTorch Tensor resembles a NumPy array, it thus calls for consistency in data type throughout the entire list before initializing the conversion.After a comprehensive analysis, it’s clear that converting Python Lists to Pytorch Tensors is a fundamental process if you’re working with the PyTorch library for machine learning operations. It aids in effectively handling and processing large data sets, which is absolutely necessary given the complex algorithms involved.

To convert a python list into PyTorch tensor, execute the following snippet:

import torch python_list = [1, 2, 3] torch_tensor = torch.tensor(python_list)

A crucial feature of a Tensor that sets it apart from a regular Python list is how Tensors can easily be utilized on a GPU for computing. This becomes an asset when performing computationally intensive tasks typically prevalent in training deep learning models.

Tables provide an excellent medium to contrast and compare Python List vs Pytorch Tensor such as:

| Python List | PyTorch Tensor | |

|---|---|---|

| Data storage | Stored in RAM | Can be stored and processed in GPU |

| Performance | Lower performance for large data sets | Higher performance due to GPU utilization |

| Use-case | General purpose | Primarily used for machine learning algorithms |

You can learn more about tensors at the official PyTorch documentation.

The transition from Python lists to PyTorch Tensors indeed makes an impact in industries where machine learning and deep learning models have dominated. From healthcare to advertising, eCommerce, and more – essentially any industry dealing with huge data sets can benefit from this tech advancement. Hence, boosting computational performance and making your code more efficient is well worth the time invested in learning how to convert your Python lists to PyTorch Tensors.

By executing your development cycles in the world of PyTorch, learning more than just the conversion process is pivotal. Embrace the benefits and prepare yourself with the methods needed to utilize the optimized tools like PyTorch Tensors in Python.