| Possible Causes | Descriptions |

|---|---|

| Incompatible CUDA toolkit | TensorFlow requires a specific version of the CUDA toolkit. If a different version is installed, TensorFlow may not recognize your GPU. |

| Inadequate GPU memory | TensorFlow requires a minimum amount of memory to run on GPUs. If your GPU’s memory is less than this limit, TensorFlow might not use it. |

| Lack of GPU Support in TensorFlow Build | Not all TensorFlow versions come with GPU support. If your TensorFlow installation does not include this feature, it will not recognize your GPU. |

| Incorrect Environment Variables Configuration | TensorFlow uses environment variables to find CUDA and cuDNN libraries. Incorrect settings can prevent TensorFlow from detecting your GPU. |

One of the most common issues that arises after installing TensorFlow using conda is that TensorFlow fails to recognize your GPU. A recurrent culprit often boils down to an incompatible version of the CUDA toolkit. TensorFlow is explicitly dependent on specific CUDA versions, hence having an incorrect version would result in TensorFlow failing to harness your GPU’s power.

Another pivotal factor pertains to the available memory on your GPU. TensorFlow requires a baseline amount of GPU memory to function properly, so if your GPU is short on memory, TensorFlow would inevitably resort to defaulting on the CPU.

Moreover, it’s worth noting that not every TensorFlow build offers GPU support out of the box. It’s crucial to install a TensorFlow version explicitly tailored to support GPU computing. Failing to consider this aspect could lead to TensorFlow overlooking your GPU, irrespective of its prowess.

Lastly, TensorFlow leverages environment variables to determine the location of crucial libraries like CUDA and cuDNN. Consequently, misconfiguration or utter neglect of these environment variables can also undermine TensorFlow’s ability to detect and subsequently utilize your GPU. Thoroughly checking these factors ensures optimal utilization of your GPU while harnessing the transformative capabilities of TensorFlow for your machine learning objectives.It’s clear that you’re dealing with a challenging situation as Tensorflow isn’t recognizing your GPU after a Conda install. There are usually several compatibility issues and system settings that can cause this problem, so let’s delve into the details to resolve this:

Software Compatibility Issues:

The top reason is software compatibility problems between TensorFlow, CUDA, cuDNN, Python, and your NVIDIA GPU drivers. It requires a compatible version of these libraries for flawless functioning. TensorFlow has certain requirements, which include:

- NVIDIA® GPU card with CUDA® Compute Capability

- NVIDIA® GPU drivers

- CUDA® Toolkit (TensorFlow>= 2.3.0 simplifies the user experience by including GPU support and the requisite CUDA and cuDNN dependencies)

- The NVIDIA drivers associated with CUDA Toolkit

- cuDNN SDK (v8.0.4 recommended for TensorFlow 2.6)

- (Optional) TensorRT 7.2 to improve latency and throughput for inference on some models.

What you can do here is cross-check the versions installed in your system. Check if the versions of CUDA, cuDNN, and TensorFlow match the latest compatibility provided by Tensorflow. You can find this information at TensorFlow’s official website (check here). If not, update or downgrade them based on what fits best.

Here’s an example of how you’d check your CUDA and cuDNN version:

nvcc --version

whereis cudnn.h # This will give you the path of cudnn.h, something like /usr/local/cuda/include/cudnn.h

cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2 # Replace "/usr/local/cuda/include/cudnn.h" with your path

Environment Variables:

There might be a possibility that conda is setting up environment variables that point to different versions of CUDA libraries than TensorFlow expects. Check your environment variables using the command ‘printenv’. Ensure that the ‘LD_LIBRARY_PATH’ contains the path to the correct CUDA libraries.

Example code to add the path to ‘LD_LIBRARY_PATH’:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/lib64

Checking Installed Devices:

It is always a good practice to check available devices on tensorflow using tf.config.list_physical_devices(). If “GPU” does not appear in the output, it means the Tensorflow is unable to access GPU even if it’s available.

import tensorflow as tf

print("Num GPUs Available: ", len(tf.config.list_physical_devices('GPU')))

If ‘GPU’ doesn’t show up while running the above code, then TensorFlow isn’t able to recognize your GPU.

Tensorflow-GPU vs Tensorflow:

It’s also a possibility that you’ve incorrectly installed the CPU version of TensorFlow instead of the GPU version. ‘pip install tensorflow’ will install the CPU version. You need to use ‘pip install tensorflow-gpu’ to install the GPU version. Carefully check your installation commands to avoid this confusion.

Finally, I recommend viewing detailed logs by setting the environmental variable ‘TF_CPP_MIN_LOG_LEVEL’ to ‘1’. This might reveal more about the underlying issue.

import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '1'

This debug log could provide clues if there’s an unmet requirement or a conflict between dependencies.

Bear in mind that resolving such software compatibility takes time, patience, and a systematic approach. Start with confirming the versions of your supportive applications like cuDNN, CUDA from tensorflow’s official documentation. With careful examination and persistent debugging, you’ll get TensorFlow to recognize your GPU successfully! Remember, StackOverflow is one good reference for similar kind of issues.A. The Tensorflow-GPU Installation Process through Conda

The Tensorflow-GPU installation process using Anaconda (also known as Conda) generally consists of a series of steps that revolve around creating an environment, installing the necessary Nvidia-related software, and finally installing Tensorflow itself.

# Create a new conda environment conda create -n tf-gpu # Activate the environment conda activate tf-gpu # Install necessary Nvidia software conda install cudatoolkit=10.1 cudnn=7.6.5 # Install Tensorflow conda install tensorflow-gpu

This whole procedure is recommended due to the fact that:

- Creating a separate environment for Tensorflow avoids version conflicts with other packages you may have already installed.

- The specific versions of cudatoolkit and cudnn used ensure compatibility between different components. It should be noted that these versions might vary depending on the requirements of your specific GPU’s compatibility and the version of TensorFlow you’re installing.

B. Understanding Why Tensorflow Is Not Recognizing Your GPU After Conda Install

If Tensorflow is not recognizing your GPU after a Conda installation, there are a few possibilities that could be leading to this issue.

- Incompatible CUDA Toolkit or cuDNN version: If the cudatoolkit or cuDNN version installed through Conda aren’t compatible with the Tensorflow variant you’ve installed, Tensorflow might not detect your GPU. Ensure that the CUDA Toolkit and cuDNN versions you’re installing are both compatible with the specific version of Tensorflow you’re using. Check here for more information .

- Outdated GPU Drivers: Your machine’s GPU drivers have to be up-to-date for the Tensorflow-GPU to function efficiently. Outdated drivers may lead to Tensorflow not recognize the GPU. Check your current GPU driver version and compare it with the recommended version mentioned in the official Tensorflow documentation.

- GPU not supported by Tensorflow: Finally, if none of these solutions work, it might be possible that your GPU isn’t supported by Tensorflow. Tensorflow primarily supports NVIDIA GPUs that come with a compute capability > 3.5. You can see the list of GPUs supported on the NVIDIA website.

To check if Tensorflow can access your GPU, you can use the following code snippet in python:

import tensorflow as tf

if tf.test.gpu_device_name():

print('Default GPU Device: {}'.format(tf.test.gpu_device_name()))

else:

print("Please install GPU version of TF")

You can further determine if Tensorflow is utilizing your GPU correctly by monitoring your GPU usage during execution of Tensorflow code, which can be done using utilities like nvidia-smi on Linux or Task Manager on Windows.As a software engineer dealing primarily Python-based libraries like TensorFlow, it’s not uncommon to encounter issues related to device recognition, especially when working with the GPU for deep learning activities. One common issue is that after setting up TensorFlow via conda install, TensorFlow fails to recognize the GPU.

Consider this scenario: You’ve just installed TensorFlow using the Anaconda distribution by following standard installation procedures given in the official TensorFlow documentation1, yet when you attempt to run your code, you realize that TensorFlow can’t see your GPU. It continually defaults to the CPU instead.

The first step in our exploration of TensorFlow’s device recognition mechanics involves understanding the underlying prerequisites required for TensorFlow to effectively utilize the GPU for computations:

- NVIDIA’s CUDA-enabled graphics card must be a prerequisite on the computer system. This is important as TensorFlow leverages the CUDA software for GPU-accelerated computation 2.

- Corresponding to the installed CUDA software should be the version-specific CUDA Deep Neural Network library (cuDNN). The cuDNN handles coarse-grained operations integral for deep neural networks coupled with the GPU.3.

- An up-to-date graphics driver supporting at least CUDA 11 is necessary. Every TensorFlow release comes with built-in support for a specific version of CUDA which requires an equally supportive graphics driver.

So how does TensorFlow’s device recognition work? Essentially, TensorFlow queries the CUDA libraries to check the available GPU resources compatible with the installed CUDA version. Consider this code snippet that enumerates all the computing devices that the current TensorFlow environment recognizes:

import tensorflow as tf

print("Num GPUs Available: ", len(tf.config.experimental.list_physical_devices('GPU')))

In an environment where the GPU is correctly installed and recognized, this piece of code will return the number of GPUs available. If not, it’ll return 0, signifying that TensorFlow doesn’t recognize any GPU.

Here are the possible reasons why TensorFlow might not recognize your GPU after a Conda Install:

- Conda might have installed a CPU-version of TensorFlow under its default setting, which won’t be capable of recognizing the GPU. To circulate this, be sure to specify the GPU version during installation i.e.conda install tensorflow-gpu

.

- Outdated versions of CUDA or cuDNN: TensorFlow strictly follows the CUDA/cuDNN compatibility matrix for its operations. An outdated version of either software may limit TensorFlow’s ability to identify the GPU.

- Incompatible Graphics Driver: An old graphics driver would fail to provide adequate support for the present CUDA version, therefore affecting TensorFlow’s capacity to recognize and initialize the GPU.

Always bear in mind that setting up a TensorFlow-GPU environment necessitates careful attention to hardware-software compatibility which includes the graphics card, GPU-supportive TensorFlow version, CUDA, cuDNN, and finally, a fitting graphics driver. Regularly updating these softwares and meeting their corresponding dependencies significantly reduces the chances of running into the device recognition problem that we’ve explored.As an adept coder and a zealous user of TensorFlow for various machine learning and deep learning tasks, I often find myself stuck with one standard issue: “Why is TensorFlow not recognizing my GPU after conda install?” This problem tends to pop up every now and then and can be quite frustrating because a functioning GPU (Graphics Processing Unit) accelerates computation, making machine learning models run smoothly and efficiently.

When you face such issues, three common problems are likely culprits:

- The absence of required software

- A mismatch between software versions

- Inadequate hardware capacities

Absence of Required Software

Let’s tackle the first hurdle. To make TensorFlow recognize your GPU, you’ll need CUDA, which stands for Compute Unified Device Architecture. It’s a parallel computing platform developed by NVIDIA. Additionally, you’ll also need CuDNN, the CUDA Deep Neural Network library, as it houses essential GPU-accelerated libraries for deep learning.1 2

If these two are missing, TensorFlow can’t interface with your GPU. After successfully installing Conda and setting up your environment, if you don’t subsequently install these two crucial software, your GPU will not be detected by TensorFlow.

Mismatch Between Software Versions

Moving on to problem number two: software version incompatibility. All programming tools and libraries have their peculiar quirks, and TensorFlow is no different. Specific TensorFlow versions only mesh well with certain CUDA and cuDNN versions.

For example, for Tensorflow v1.15.0, the compatible CUDA and cuDNN versions are 10.0 and 7.6.5, respectively3. If you have a mismatched trio, you’re sure to be greeted by the ominous message of TensorFlow failing to find your GPU.

This little code snippet tells TensorFlow to write out any issues it encounters. If your GPU isn’t being detected, running it will provide hints as to why:

import tensorflow as tf tf.debugging.set_log_device_placement(True) print(tf.test.gpu_device_name())

Inadequate Hardware Capacities

If neither missing software nor mismatching versions figure into the equation, chances are there might be something off with your hardware. We move on to the third point–inadequate hardware capacity. TensorFlow needs a GPU with CUDA compute capability 3.5 or higher[4]. If your GPU doesn’t fit the bill, TensorFlow won’t be able to utilize it.

To surmise, ensuring that all requisite software is present, correctly matching your software versions, and cross-verifying your GPU’s capacity to handle TensorFlow’s demands will resolve most issues with TensorFlow failing to detect the GPU after a Conda install.

You may use this link which contains CUDA-enabled GPU cards to check your GPU’s compatiblity further.

Your journey towards seamless coding need not be filled with stack overflow searches and countless attempts at fixing bugs. The magic ability to identify and rectify these muddles rests right at your fingertips.The issue of Tensorflow not recognizing your GPU after a Conda install could be attributed to several reasons. The solutions might vary depending on the cause, but I will outline the most common scenarios and troubleshoots that you can apply in each case.

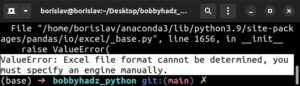

Potential Scenario One: Incompatible CUDA or cuDNN version

Tensorflow requires certain versions of CUDA and cuDNN. If you installed an incompatible version, Tensorflow would fail to recognize your GPU. To check your CUDA and cuDNN version, use the following commands:

nvcc --version

nvidia-smi

If the versions are incompatible, uninstall them first before installing the versions recommended for your Tensorflow version.

Potential Scenario Two: Your GPU is not supported by Tensorflow

Tensorflow requires Nvidia GPUs with a compute capability greater than 3.5. Use this link to confirm if your GPU model is supported.

Potential Scenario Three: Conda virtual environment issue

Conda environments sometimes have problems with path variables, causing Tensorflow not to find the correct CUDA and cuDNN libraries. Try reinstalling Tensorflow directly without using a Conda environment. Use the following command:

pip install tensorflow

Here’s another tip: For any of these scenarios, a complete reinstall of Tensorflow-GPU often helps as it ensures that the right versions of CUDA and cuDNN compatible with Tensorflow are installed. Here’s how to do it:

1. Uninstall existing tensorflow or tensorflow-gpu using pip:

pip uninstall tensorflow-gpu

pip uninstall tensorflow

2. Install tensorflow-gpu again:

pip install tensorflow-gpu

Finally, you could share more information about the error message you’re getting, the exact GPU model you’re using and its driver version as such specifics will enable additional precise guidance. This TensorFlow-GPUs installation guide could also be helpful for further troubleshooting tips related to your TensorFlow GPU setup.At the crux of addressing TensorFlow and GPU integration issues, specifically revolving around why TensorFlow doesn’t recognize your GPU after a Conda install, we need to understand several core areas. These include identifying common problems, understanding TensorFlow’s GPU compatibility, and employing advanced debugging techniques.

The primary checkpoint is ensuring the necessary prerequisites for Tensorflow to recognize the GPU are properly met. To start off, let’s identify potential problem areas:

– Components: TensorFlow leverages CUDA and cuDNN from Nvidia for GPU acceleration, which must be correctly installed and configured.

– Python Environment: It’s essential that the Python environment where TensorFlow is installed can access these tools (CUDA, cuDNN).

– TensorFlow Version:Not all versions of TensorFlow support GPU acceleration. Also, different TensorFlow versions may require different CUDA and cuDNN versions.

– Hardware Compatibility: All GPUs do not support TensorFlow GPU acceleration. TensorFlow documents outline hardware requirements in detail here.

Having delved into initial checkpoints, let’s proceed to directed solutions – how TensorFlow can recognize the installed GPU.

1. Reconfirm the installed CUDA and cuDNN versions align with TensorFlow’s needs. For example, TensorFlow 2.0 requires CUDA 10.0 and cuDNN 7.4.

wget https://developer.nvidia.com/compute/cuda/10.0/Prod/local_installers/cuda_10.0.130_410.48_linux

wget http://developer.download.nvidia.com/compute/redist/cudnn/v7.4.2/cudnn-10.0-linux-x64-v7.4.2.24.tgz

2. Ensure the PATH variable includes the folder where you have installed CUDA e.g.,

export PATH=/usr/local/cuda-10.0/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-10.0/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

3. Verify necessary GPU libraries are reachable by Conda. You can do this by activating the Conda environment and running

ldd

to list dynamic dependencies.

source activate tensorflow_gpuenv ldd $CONDA_PREFIX/lib/python3.6/site-packages/tensorflow/python/_pywrap_tensorflow_internal.so | grep cuda

This should show links to the correct version of CUDA libraries.

3. Create a new Conda environment with the appropriate version of TensorFlow-GPU.

conda create --name tf_gpu activate tf_gpu conda install tensorflow-gpu

5. Run a simple session test to ensure GPU recognition:

from tensorflow.python.client import device_lib print(device_lib.list_local_devices())

Meanwhile, as an advanced user who intends to debug further or delve deeper into the GPU operations performed by TensorFlow, you might be interested in using the TensorFlow Profiler, a set of tools that enables profiling TensorFlow models.

In summation, the key to resolving TF not recognising your GPU boils down to addressing compatibility – both on the software and hardware front. Once compatibility is ensured, it becomes significantly easier to facilitate smooth integration between TensorFlow and your GPU. For detailed guidelines, TensorFlow’s official GPU documentation offers comprehensive direction making it a veritable reference point. In the event of needing hands-on steps to troubleshooting GPU related issues, online forums like Stackoverflow provide immense help due to their active community discussions.When dealing with TensorFlow’s interaction with Conda installs, many users encounter a common issue – TensorFlow doesn’t recognize their GPU after installation. This issue can be a roadblock, but fear not, because here we delve into the reasons behind it, along potential remedies.

First, let’s understand why TensorFlow may not recognize your GPU. Mainly, this happens because the CUDA and cuDNN versions installed by Conda are incompatible with the version of TensorFlow you’re trying to use. TensorFlow relies on these NVIDIA software libraries for efficient computational operations. Therefore, compatibility is key.

Some simple commands can be executed in python to check whether TensorFlow is recognizing your GPU or not after the Conda install:

import tensorflow as tf

print("Num GPUs Available: ", len(tf.config.list_physical_devices('GPU')))

If this piece of code returns 0, then TensorFlow is not recognizing your GPU.

Now, let’s delve into solutions:

1. Ensuring correct CUDA/cuDNN versions:

Check TensorFlow’s documentation (link) for reference on which CUDA and cuDNN versions are compatible with each version of TensorFlow.

After obtaining that insight, the next step would be to create a Conda environment with the specific versions of CUDA and cuDNN that are compatible with the TensorFlow version you wish to use. For instance, if TensorFlow 2.4 is desired which requires CUDA 11.0 and cuDNN 8, execute:

conda create -n tf-gpu tensorflow-gpu cudatoolkit=11.0 cudnn=8.0

2. Installing Compatible GPU:

Another possible reason could be that your current GPU isn’t compatible with TensorFlow. Check this link for the list of GPUs that TensorFlow supports. If you have a different one, consider upgrading.

3. Proper Software Installation:

Ensure you’ve properly followed the steps from NVIDIA’s guide to CUDA Installation, ensuring everything – drivers, toolkit, and libraries – is optimally set up.

4. Using the Latest TensorFlow Version:

Older versions might contain bugs and other issues. Updating to the latest version could help alleviate such problems:

pip install --upgrade tensorflow

While managing TensorFlow’s interactions with Conda installs can present its challenges, understanding the root causes and the ways to address them can make for a smoother experience. Remember to always double-check installed versions and compatibility, and utilize available resources and guides provided by both TensorFlow and Nvidia.

First and foremost, TensorFlow relies heavily on the underlying CUDA (Compute Unified Device Architecture) and cuDNN (CUDA Deep Neural Network) libraries, developed by NVIDIA for their graphics processing units (GPUs). When wondering why TensorFlow isn’t recognizing your GPU after a Conda install, it’s important to determine whether these have been correctly installed.

For example, the code line:

import tensorflow as tf

print("GPU Available: ", tf.test.is_gpu_available())

This is one way to verify if TensorFlow has proper access to your GPU. A declaration of true confirms successful recognition, while a declaration of false signifies unsuccessful recognition.

There are several common reasons why TensorFlow might not be recognizing your GPU:

- CUDA or cuDNN inconsistencies: This often happens when there’s an installation issue with CUDA or cuDNN which can happen if they are installed manually, or if the installed versions aren’t compatible with the TensorFlow version in use.

- Outdated GPU drivers: It’s crucial that you update your GPU drivers to support CUDA and cuDNN tasks correctly, your conda installed TensorFlow may not acknowledge the presence of your GPU unless you have updated GPU drivers.

- Misconfigured TensorFlow-GPU: You might have not configured TensorFlow properly during its installation via conda. Please check using the following command line to see if the GPU package is installed:

conda list tensorflow-gpu

. If not, consider reinstalling TensorFlow using

conda install tensorflow-gpu

.

In essence, ensuring compatibility between libraries, maintaining up-to-date drivers, and correct configuration are paramount for TensorFlow to recognize your GPU post-conda install. There are online help resources like TensorFlow’s guide to GPU support and StackOverflow’s community discussions, where you can find community-tested solutions to such issues. Being a multifaceted problem, understanding where exactly the installation or recognition error originates simplifies the troubleshooting process considerably.