- Home

- 2024

- January

- 27

- Runtimeerror: Cudnn Error: Cudnn_Status_Not_Initialized Using Pytorch

“When working with Pytorch, encountering the RuntimeError: CuDNN error: CUDNN_STATUS_NOT_INITIALIZED can typically be resolved by ensuring your CUDA toolkit and cuDNN versions are compatible. Updating these elements or reinstalling them could clear this common pytorch engine issue.”Certainly, let’s begin with a brief synopsis of the RuntimeError: CuDNN error: CUDNN_STATUS_NOT_INITIALIZED encountered in PyTorch. This error is thrown when you’re trying to leverage GPU processing for your deep learning tasks using PyTorch, but CuDNN (CUDA Deep Neural Network library) is not properly initialized.

Let’s break down this error message into understandable parts:

– RuntimeError: This Python exception is raised when there is an error occurring during runtime but wasn’t detected before execution.

– CuDNN error: This indicates that the issue is with NVIDIA’s CuDNN, the hardware-accelerated library for training deep neural networks.

– CUDNN_STATUS_NOT_INITIALIZED: This CuDNN status code means CuDNN failed to initialize. Usually, it is due to incorrect versions of CUDA toolkit and CuDNN being installed, or GPU memory exhaustion.

Now, let’s create a summary of common causes and solutions using HTML table:

html

| Error |

Cause |

Solution |

| CuDNN error: CUDNN_STATUS_NOT_INITIALIZED |

Incompatible CUDA Toolkit and CuDNN versions |

Check and ensure compatibility between NVIDIA driver, CUDA Toolkit, and CuDNN. Update/install if necessary. |

| CuDNN error: CUDNN_STATUS_NOT_INITIALIZED |

GPU memory exhaustion |

Optimized model size, batch size or use lighter models to reduce GPU memory usage. Use tools such as nvidia-smi to monitor GPU utilization. |

This table depicts two typical reasons behind encountering “CuDNN error: CUDNN_STATUS_NOT_INITIALIZED” when utilizing PyTorch. The leading cause often revolves around version incompatibility between t he officially installed NVIDIA driver, the CUDA Toolkit, and CuDNN. Therefore, ensuring compatibility between these resources would eliminate the possibility of this error.

Furthermore, another potential trigger for this bug is overutilization of GPU memory. Granted, deep learning models are heavily demanding on computational power and memory capacity. Thus, a large model or a high batch size might deplete available GPU memory, subsequently causing the initialization failure of CuDNN. Accordingly, you can tackle this issue by optimizing model size, adjusting the batch size, using less memory-consuming models, or simply switching to more powerful hardware if possible. Monitoring utilities such as `nvidia-smi` will aid greatly in determining GPU usage and managing resource allocation effectively.

Finally, it’s worth noting that software bugs could also be a culprit. Given so, keeping PyTorch and other associated libraries up-to-date would be a prudent practice to bypass this or other similar errors.

The

RuntimeError: CuDNN error: CUDNN_STATUS_NOT_INITIALIZED

typically pops up when the CUDA Deep Neural Network library (abbreviated to CuDNN) isn’t properly initialized in PyTorch. This runtime error is specific to NVIDIA’s graphic processing units (GPUs) and signifies some underlying problems relating to the GPU, CuDNN library, or the communication between PyTorch and these hardware elements.

Cause of the Error

A number of reasons can be responsible for the occurrence of such an error:

-

Incompatibility Issues:

Sometimes, the CUDA toolkit version might not be compatible with the installed CuDNN library or your PyTorch version. These compatibility issues often lead to initialization errors.

-

Improper Installation:

The error can result from an improper or incomplete installation of the CUDA toolkit or CuDNN library.

-

Excessive GPU Memory Usage:

If your model or data size exceeds the available GPU memory, you may encounter the cuDNN initialization error.

Ways to Fix the Error

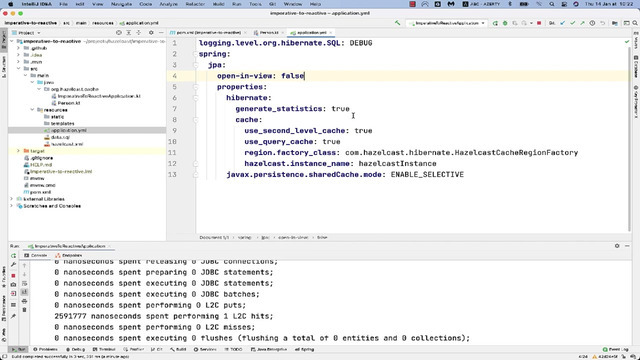

To fix this error, the first step would be to verify and ensure the compatibility of your CuDNN version with the CUDA toolkit and PyTorch versions. Here’s a table showing the compatibility of different CUDA toolkit versions with various releases of the cuDNN library.

| CUDA Toolkit Version |

Compatible cuDNN Versions |

| 9.0 |

7.x |

| 10.0 |

7.x |

| 10.1 – 10.2 |

7.x, 8.x |

| 11.0+ |

8.x |

You can check your current versions using the below Python code:

import torch

print("PyTorch Version:", torch.__version__)

print("CUDA Version:", torch.version.cuda)

print("CuDNN Version", torch.backends.cudnn.version())

If any discrepancies are found, rectify by installing compatible versions.

Secondly, verify the complete and proper installation of both the CUDA toolkit and the CuDNN library. Following the official NVIDIA documentation here will ensure correct installation.

Lastly, monitor GPU usage to ensure your model and dataset do not exceed available memory. You can use the nvidia-smi command in the terminal to check real-time GPU usage. Adjusting your batch size or even reducing your model complexity could be potential solutions for memory overflow.

If this error persists despite taking the aforementioned steps, consider seeking help in PyTorch forums. They contain a wealth of knowledge contributed by the community. This includes many threads discussing similar errors, potentially offering more tailored solutions based on individual project details.

Resources:

Let’s delve into the heart of PyTorch, a popular deep learning open-source library developed by Facebook’s artificial-intelligence research group. We’ll look at its functionality, especially around convolutional networks, and tackle an error that frequently plagues users: the RuntimeError: CuDNN error: CUDNN_STATUS_NOT_INITIALIZED.

Like a well-oiled machine, PyTorch offers tools for everything from manipulating tensors to building deep learning models with automatic differentiation using CUDA. But the ride isn’t always smooth—sometimes errors rear their heads. The CUDNN error specifically can seem daunting but is often straightforward to handle once you understand why it occurs.

Let’s set our scenario by addressing a common function in PyTorch: training a model on GPU.

# Training the model

model = MyModel()

model.to(device) # Let's assume device='cuda'

epochs = 5

for epoch in range(epochs):

for images, labels in dataloader:

images = images.to(device)

labels = labels.to(device)

optimizer.zero_grad()

outputs = model(images)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

Here we are just moving our input data and model to the CUDA device before starting the train loop.

That’s where the fun begins and also where we might hit a roadblock in the form of the Runtime Error: CuDNN error: CUDNN_STATUS_NOT_INITIALIZED.

This error primarily occurs when there is a mismatch between CUDA, Pytorch, and cuDNN versioning or if they are not properly installed. cuDNN is a GPU-accelerated library for Deep Neural Networks. It provides highly optimized routines for primitive functions, such as addition and multiplication of multi-dimensional arrays, that are fundamental to neural networks.

Firstly, to resolve this issue, you need to ensure your system’s CUDA version is compatible with the PyTorch version. PyTorch supports specific CUDA versions with each of its releases. For your reference, you can find these here.

You also need to verify if cuDNN is installed and recognized correctly. On Linux, you can use the following command:

nvcc --version

cat /usr/include/cudnn.h | grep CUDNN_MAJOR -A 2

The nvcc –version command checks the CUDA compiler, while the second command provides information about the cuDNN version.

Next, you should ensure that both your graphics drivers and the CUDA toolkit are up-to-date. Updating them usually goes a long way in fixing the problem.

Another possible reason could be that PyTorch is attempting to use more memory than what is available. In Pytorch, we can check if our tensors in cuda memory have been cleared or not:

import torch

print(torch.cuda.memory_summary(device=None, abbreviated=False))

If there are some tensors in memory, try freeing them up before restarting your training process. Apply the command:

Ultimately, by taking the steps outlined above, we can address and rectify the CuDNN error: CUDNN_STATUS_NOT_INITIALIZED in PyTorch. Although PyTorch provides us with a comprehensive tool for working extensively with Deep Learning algorithms, like any technology, it’s not without its quirks. Thankfully, faults like these often boil down to compatibility issues, causing minimal disruption once addressed.

As we continue to evolve in unison with technological development, staying updated proves key in the coding world. This assures smoother operations free from hiccups like initialization errors. Keeping your software versions in line assures a seamless coding experience, letting you enjoy all that PyTorch has to offer.The CuDNN (CUDA Deep Neural Network) is a GPU-accelerated library for deep neural networks. In PyTorch, you might encounter the error message:

Runtimeerror: Cudnn Error: Cudnn_Status_Not_Initialized

. This error typically happens when your code tries to utilize the CuDNN functionalities before it’s been correctly initialized.

To start with, let’s do a quick understanding of CuDNN. CUDA itself is a parallel computing platform developed by NVIDIA that allows developers to use NVIDIA GPUs for general purpose processing (GPGPU). On top of CUDA, NVIDIA built CuDNN, a GPU-accelerated library specifically designed for deep neural networks. CuDNN provides highly optimized implementations for primitive functions, such as convolution and normalization, that are frequently used in deep learning.

Roughly speaking, any time you perform a computation involving a tensor in PyTorch that is on a device with an NVIDIA GPU, behind the scenes PyTorch is likely using CuDNN to carry out the computation. If CuDNN has not been properly initialized, then PyTorch will throw this exception when trying to get CuDNN to perform a computation.

There are several possible reasons why this

Cudnn_Status_Not_Initialized

error can occur:

– Out-of-date or mismatched versions of CUDA and CuDNN: Ensure that the installed versions of CUDA and CuDNN are compatible with each other and with your version of PyTorch. Check your CUDA version with

, and check your CuDNN version by looking at the file version in your CuDNN path, typically

/usr/local/cuda/include/cudnn.h

.

– GPU memory issues: The problem could be that your GPU does not have enough memory available. This can happen if there are other processes running on the same GPU that are using up GPU memory.

– Incorrect environment variables: The CUDA toolkit expects certain environment variables to be set, such as `CUDA_HOME`. If these are not correctly set, it can cause initialisation problems.

You can solve these issues by taking the following steps:

– Verify your CUDA and CuDNN installations: You should reinstall or update CUDA and/or CuDNN if their versions are not compatible with your PyTorch version.

– Free up GPU memory: Stop other running processes that are consuming GPU memory or switch to a GPU with more memory.

– Set the correct environment variables: For example, you can add

export CUDA_HOME=/usr/local/cuda

to your

file for Linux or

export CUDA_HOME=/usr/local/Cellar/cuda

on macOS (assuming typical installation paths).

Remember to reboot your machine after making changes to ensure they take effect. Once the underlying issues are resolved, that nefarious

Cudnn_Status_Not_Initialized

error should be banished from your PyTorch runtime.

Please refer to the official CUDA Toolkit documentation on Getting Started Guide for Linux, Getting Started Guide for Windows, and Getting Started Guide for Mac for more detailed instructions on installation, configuration, and verification.RuntimeError in PyTorch occurs when the cuDNN library can’t be initialized properly. The error “cudnn_Status_Not_Initialized” means that there was some issue while initializing CUDA Deep Neural Network (cuDNN) library which is used by PyTorch for acceleration on NVIDIA GPUs.

Errors like these are common, especially when you’re dealing with hardware-specific software like cuDNN. These problems sometimes occur due to incorrect setup or hardware resource related issues. Here are a few insights and troubleshooting steps that might help:

– Check the Compatibility

Make sure your system has compatible versions of CUDA, cuDNN, and PyTorch as incompatible versions often cause such problems. Each version of PyTorch corresponds with a particular CUDA and cuDNN version. Cross verifying and ensuring compatibility can resolve this issue on many occasions.

– Installation Issues

There might be installation errors. Be certain that the cuDNN library is installed properly and make sure it’s in your PATH.

export LD_LIBRARY_PATH=/path/to/cudnn/lib:$LD_LIBRARY_PATH

export CPATH=/path/to/cudnn/include:$CPATH

export LIBRARY_PATH=/path/to/cudnn/lib:$LIBRARY_PATH

Replace “/path/to/cudnn” with the actual path to your cuDNN libraries.

– GPU Compatibility Issues

Ensure that not only are all components compatible with each other, but also with your GPU. Sometimes using an old GPU might limit you from using later versions of the CUDA toolkit.

– Multiple GPUs Issue

If you’re using multiple GPUs, try using just one. Using multiple GPUs and running out of memory could cause initialization errors with cuDNN.

– Synchronization issue with multithreading

PyTorch uses multiple threads, which might sometimes lead to asynchronous behavior causing failure in proper initialization of cuDNN. This is mainly seen in Jupyter notebooks where cells may execute in a non-linear order.

The key to solving initialization issues lies in careful examination. For a python programmer working with PyTorch, understanding how cuDNN works will be advantageous. The initialization of cuDNN typically precedes the initialization of the main application because it sets up resources that your application uses. Being familiar with how this works helps understand why these errors occur and ultimately how they can be fixed.

To deep dive into what cuDNN is and how to properly initialize it, refer to the official user guide from NVIDIA on cuDNN here.

For future reference, keep handy the Python Like You Mean It guide on PyTorch usage, which provides practical detail about handling PyTorch functionalities. It’s extremely beneficial not just for solving initialization issues but also for other PyTorch-related queries.

Also, don’t forget to dive into the Official Nvidia Developer Forum cudNN boards. It’s full of smart people who have probably encountered your problem before.One common error encountered while utilizing PyTorch, especially for GPU acceleration, is the “Runtimeerror: cudnn error: cudnn_status_not_initialized”. This error is generally indicative of an initialization issue with Nvidia’s cuDNN library, which is used for high-performance GPU-accelerated execution of neural networks.

To tackle this, let’s dive into some potential causes and their solutions:

Solution 1: Check your CUDA and cuDNN versions

In many cases, an incompatible or outdated version of CUDA or cuDNN might result in this kind of error. It’s always recommended to cross-verify your CUDA and cuDNN versions compatibility with your PyTorch version. The official PyTorch website provides all these specific details. Here’s a way you can check the version on Linux:

\$ nvcc --version

\$ cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

Solution 2: Reinstall CUDA and cuDNN

If the versions are confirmed to be compatible yet the error persists, a reinstallation of both may be required. There might have been a problematic installation previously that’s causing this persisting problem. Before reinstalling make sure to completely uninstall the current installations first.

Solution 3: Sync device runtime

The error could also occur due to a runtime issue where the host and the device are not sync. One approach to solving this involves syncing your runtime by calling ‘torch.cuda.synchronize()’ before initializing your models.

torch.cuda.synchronize()

model = Model().cuda()

Solution 4: Environment variables

One final attempt at troubleshooting this problematic error involves setting the environment variable data type size (DTS) limit. The DTS limit being too low may cause an initialization error with cuDNN. To solve this, you can increase the value of “DTS limit” manually.

import os

os.environ['DTS'] = '4096'

By employing these methods, you’ll have taken proactive steps towards resolving the common cudnn error of “cudnn_status_not_initialized” when using PyTorch. However, it is always recommended to consult the latest PyTorch documentation or community forums for new updates involving these types of issues.

PyTorch interacts with CUDA and CuDNN to enable training predictive models on GPUs, speeding up the computation time significantly compared to CPU-based training. However, one common problem encountered while using PyTorch with CUDA is RuntimeError: Cudnn Error: Cudnn_Status_Not_Initialized. Let’s do a deep dive into what goes behind in such scenarios.

CUDA Interaction with PyTorch

CUDA is a parallel computing platform and API model that allows software developers to use Nvidia hardware for general purpose computing. PyTorch utilizes this feature by providing the torch.cuda API. This API consists of several important components:

- torch.cuda.device: Context manager that changes the selected device.

- torch.cuda.memory_allocated: Returns the current GPU memory usage by tensors in bytes.

- torch.cuda.max_memory_allocated: Returns the maximum GPU memory usage by tensors in bytes.

You can initialize a tensor in GPU instead of CPU using

argument or calling

post initialization.

tensor_gpu = torch.tensor([1.0, 2.0], device='cuda')

tensor_cpu = torch.tensor([1.0, 2.0])

tensor_gpu = tensor_cpu.cuda()

PyTorch’s interaction with CuDNN

CuDNN (CUDA Deep Neural Network library) provided by Nvidia, is a GPU-accelerated library for deep neural networks. It helps with the computations associated with the layers in the neural networks like convolutions, pooling etc. Pytorch also uses it for efficient performance.

Runtimeerror: Cudnn Error: Cudnn_Status_Not_Initialized

This error occurs when CUDA fails to initialize the CUDNN backend. When PyTorch attempts to use a CUDA function, it checks whether CuDNN functions are available. If they’re not, it throws a runtime error stating that CUDNN is not initialized.

This error might be related to:

- Interference from other GPU processes

- Machine has run out of memory

- Presence of old or incompatible Cuda versions

Note: Always ensure that the version of CUDA toolkit and cuDNN installed match the version PyTorch was compiled with.

Solving the “cudnn_Status_Not_Initialized” issue usually involves a few steps:

- Restarting the Machine: All running processes will be killed and any GPU core dumps interfering with new operations will be cleared.

- Upgrading the Software: Ensuring you have the latest versions of CUDA, cuDNN, and PyTorch. Nvidia provides guidelines on how to properly upgrade your CUDA toolkit here: Nividia CUDA Installation Guide.

- Checking Memory Usage: Unwanted processes could be eating up GPU memory. Check GPU memory usage regularly and kill unnecessary processes.

If the errors persist after these steps, they may be due to problems with GPU drivers, the CUDA installation, or hardware issues. You should reach out to your hardware manufacturer or seek help at the official PyTorch forums in such cases.

Certainly, Let’s dig deeper into this topic and understand the connection between PyTorch, GPUs, CUDA, cuDNN, and how this results in the Runtimeerror: Cudnn Error: Cudnn_Status_Not_Initialized when using PyTorch.

PyTorch is a Python-based library that provides two high-level features:

- Dazzling tensor computing (like numpy) with strong backing from GPU acceleration

- Deep Neural Networks are synthesized on a tape-based autograd system.

PyTorch is closely linked to a Graphics Processing Unit (GPU) which accelerates computing speed. If you want Pytorch to tap into the full computational power of your GPU, you need CUDA.

CUDA, an acronym for Compute Unified Device Architecture, is an Nvidia GPU’s parallel computing architecture. Any library built on top of CUDA can leverage this powerful technology. However, the issue arises when PyTorch encounters an error at runtime regarding CUDA drivers, leading to an error:

RuntimeError: CUDNN error: CUDNN_STATUS_NOT_INITIALIZED

Now you may wonder, why is there such a high dependence on CUDA? The reason is simple – running machine learning models on a CPU is sluggish compared to a GPU. CUDA helps PyTorch by streamlining ML computations on the GPU smoothly and swiftly. But CUDA isn’t the only one contributing to this; we have another player in this ecosystem, cuDNN.

cuDNN stands for CUDA Deep Neural Network library. It performs primitive functions to train deep neural nets. PyTorch depends heavily on many CUDA libraries, especially cuDNN, an optimized library to perform GPU accelerated tasks. In short, cuDNN fast-tracks training DNNs on GPU by taking advantage of CUDA’s capabilities.

However, if there’s a version mismatch between CUDA, cuDNN and PyTorch, or if these aren’t installed correctly, this leads to errors such as

RuntimeError: CUDNN error: CUDNN_STATUS_NOT_INITIALIZED

. This error often emerges when the GPU doesn’t initialize cuDNN, perhaps due to incorrect installation or an unsuccessful build.

To solve this issue, ensure:

Additionally, consider setting

torch.backends.cudnn.benchmark = True

before training your model. This selects the most optimized algorithm for performance on your specific hardware setup.

Remember, ML frameworks like PyTorch rely on CUDA and cuDNN (libraries by NVIDIA) to optimize TensorFlow performance and make sure that computation-intensive jobs are offloaded to the GPU.

For detailed instructions on this process, refer to the official PyTorch and NVIDIA cuDNN documentation.

When dealing with the RuntimeError: CudNN error: CUDNN_STATUS_NOT_INITIALIZED in using PyTorch, it’s crucial to understand the underlying causes.

The source of this error could be dependence on incompatible GPU drivers and CUDA frameworks while using a system with NVIDIA hardware. Essentially, any discrepancy between the specifications of your GPU and the versions of CUDA and CuDNN you have installed can cause this error.

Primarily, let’s examine potential solutions:

– Upgrade or downgrade your GPU driver version. For example, if your system has an NVIDIA graphics card that isn’t compatible with the currently installed version of the CUDA toolkit, CudNN library, or PyTorch framework, updating or rolling back your GPU driver could resolve the issue.

– If the software discrepancies remain unresolved, consider altering your machine learning (ML) configurations. You may want to use a version of PyTorch that comes pre-packaged with the correct versions of CUDA and CuDNN for your specific hardware. Try running

conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

using suitable version instead of 10.2 to ensure compatibility.

– A faulty GPU could also trigger this error. Be sure to check your GPU’s health and replace it if necessary by monitoring system-level metrics via tools such as NVIDIA SMI.

Furthermore, it’s essential to understand that optimal runtime environment setup plays a significant role towards efficient machine learning tasks mainly when using libraries like PyTorch.

# To prevent the occurrence of the RuntimeError: CudNN error,

# always ensure that your hardware and software versions are compatible

Moreover, to avoid such initialization errors in PyTorch, a regular system audit should become habitual—keep your system updated and regularly check forums like Pytorch Discussion for compatibility issues and updates.

Brought to light above is the intricate relationship between hardware and software versions for smooth ML functionality, particularly when it comes to handling sensitive tasks involving GPU operations. Understanding the source of errors such as RuntimeError: CudNN error: CUDNN_STATUS_NOT_INITIALIZED helps you to streamline your runtime environment better, ensuring smoother processes and less downtime, which are vital for time-sensitive AI projects and tasks.

Always refer to detailed guides, articles, and community discussions when navigating any unknowns or troubleshooting errors in the coding world. This article featuring the RuntimeError: CudNN error would be a beneficial reference point for fellow coders experiencing similar pitfalls.