Actually, I should clarify – I spent last Tuesday night staring at a terminal window, waiting for a traceback that never came. My AI agent—a supposedly “smart” customer support bot running on GPT-4o—had just hallucinated a refund policy that doesn’t exist, promised a customer $500 in credit, and then politely closed the ticket.

The Python logs? Clean.

The HTTP status codes? 200 OK across the board.

The standard observability tools? Useless.

But when an LLM fails, it doesn’t crash. It drifts. It confidently walks off a cliff while smiling.

After that $500 incident (which I caught before the API call actually fired, thank god), I stopped trying to debug agents like they were standard Python scripts. I realized I needed a “Flight Recorder.” A way to reconstruct the cognitive stack trace of the model, not just the execution stack of the runtime.

The “Why” is Missing

Standard logging tells you what happened. “Function call_refund_tool executed.” Great. But why did the agent think that was the right move?

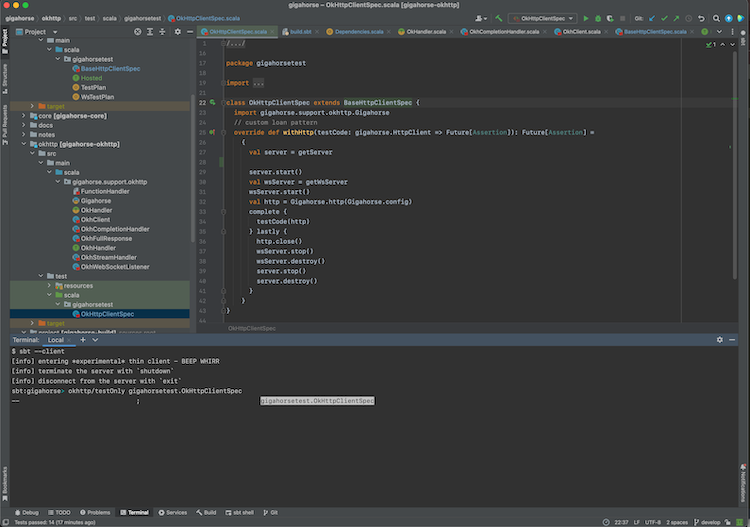

I built a lightweight system to capture this. No heavy SaaS integrations, just a local SQLite file. Why SQLite? Because I can query it with SQL, it’s fast, and I don’t need an internet connection to debug my local dev environment.

Building the Flight Recorder

The goal is to log the “Cognitive Frame” of every step. A standard stack trace is a snapshot of memory. A cognitive stack trace is a snapshot of context.

import sqlite3

import json

from datetime import datetime

from typing import Any, Dict, Optional

class FlightRecorder:

def __init__(self, db_path: str = "agent_trace.db"):

self.conn = sqlite3.connect(db_path)

self.cursor = self.conn.cursor()

self._init_db()

def _init_db(self):

# We're logging the 'thought process', not just the error

self.cursor.execute("""

CREATE TABLE IF NOT EXISTS trace_log (

id INTEGER PRIMARY KEY AUTOINCREMENT,

run_id TEXT,

step_number INTEGER,

timestamp DATETIME,

intent TEXT, -- What the agent WANTED to do

tool_call TEXT, -- The actual function called

tool_output TEXT, -- What the world sent back

policy_verdict TEXT, -- Did it pass guardrails?

violation_reason TEXT, -- If it failed, why?

full_context JSON -- The expensive part: full prompt state

)

""")

self.conn.commit()

def log_step(self,

run_id: str,

step: int,

intent: str,

tool: str,

output: Any,

verdict: str = "PASS",

violation: Optional[str] = None):

self.cursor.execute("""

INSERT INTO trace_log

(run_id, step_number, timestamp, intent, tool_call, tool_output, policy_verdict, violation_reason)

VALUES (?, ?, ?, ?, ?, ?, ?, ?)

""", (

run_id,

step,

datetime.now(),

intent,

tool,

json.dumps(output),

verdict,

violation

))

self.conn.commit()

def query_crash(self, run_id: str):

# This is the "Stack Trace" view

print(f"--- INVESTIGATION FOR RUN {run_id} ---")

rows = self.cursor.execute(

"SELECT step_number, intent, policy_verdict, violation_reason FROM trace_log WHERE run_id = ? ORDER BY step_number",

(run_id,)

).fetchall()

for row in rows:

step, intent, verdict, reason = row

status = "🔴" if verdict == "FAIL" else "🟢"

print(f"{step}. {status} [{verdict}] Intent: {intent}")

if reason:

print(f" ERROR: {reason}")The “Aha” Moment

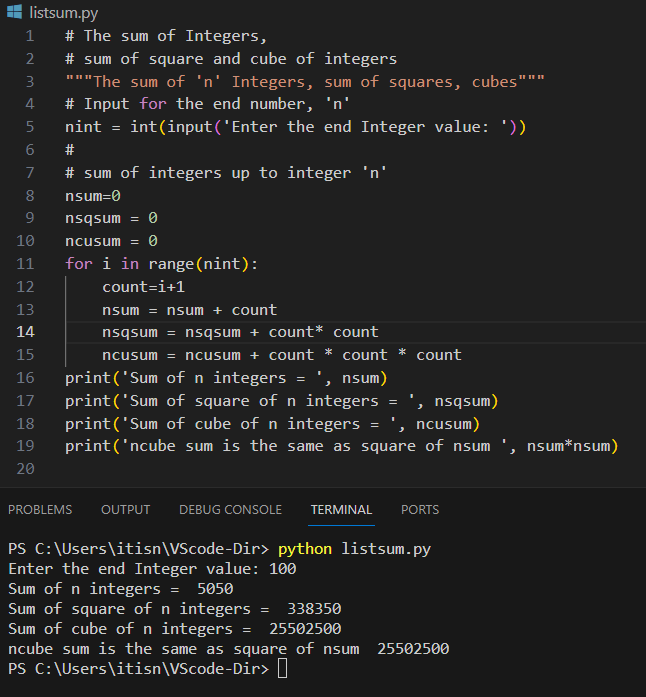

The very next day, I had a regression. The agent started failing to look up order history. Instead, I just ran a SQL query:

SELECT intent, tool_call, tool_output

FROM trace_log

WHERE policy_verdict = 'FAIL'

ORDER BY timestamp DESC LIMIT 5;The result was immediate. The intent column showed: “User wants order status. Searching for order #12345.”

But the tool_call column showed: search_orders(query="12345").

The tool_output was: [] (Empty list).

That is the stack trace. Not a line number error, but a logic gap between “Expectation” (fuzzy search exists) and “Reality” (exact match only).

The Storage Trade-off (A Real-World Gotcha)

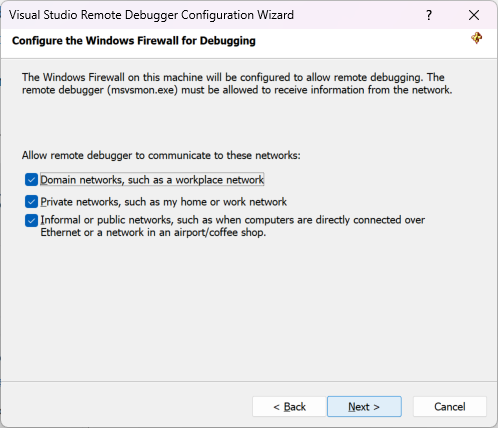

Do not log the full context window by default.

I also added a simple retention policy—a cron job that runs every Sunday at 3 AM to vacuum the DB and delete rows older than 7 days.

Why This Matters Now

Governance isn’t just a corporate buzzword; it’s about knowing why your code did something. If your agent violates a policy—like suggesting a competitor’s product or being rude—you need to point to the exact logic step where the guardrail failed.

Without a flight recorder, you’re just guessing. You’re tweaking the prompt, re-running, and hoping. That’s not engineering; that’s superstition.

So, stop relying on stdout. Build a table. Log the intent. Query the crash. It’s the only way to stay sane.