Could Not Load Dynamic Library ‘Libnvinfer.So.6’

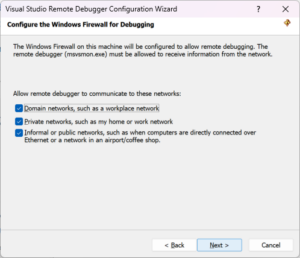

html

| Problem | Cause | Solution |

|---|---|---|

| Could Not Load Dynamic Library ‘libnvinfer.so.6’ | Missing or outdated NVIDIA inferencing libraries (TensorRT) | Install appropriate version of TensorRT |

An overview of the table delineates each aspect of the problem ‘Could Not Load Dynamic Library ‘libnvinfer.so.6’, which primarily occurs when your system is unable to find this specific shared library. This is a commonly encountered issue when dealing with Python TensorFlow applications that utilize NVIDIA’s TensorRT libraries for optimization.

The cause of the issue arises often from missing or outdated NVIDIA inferencing libraries – more specifically, TensorRT. Essentially, the ‘.so’ files are shared objects, similar to DLLs in Windows. They are called dynamic libraries because they’re not linked into programs at compile time but are loaded as and when needed during runtime.

How to remedy this situation? The solution involves the installation of the appropriate version of TensorRT in order to replace or update the missing or outdated ‘libnvinfer.so.6’ dynamic library in your NVIDIA environment. Also, be sure to set the necessary path variables correctly, pointing them towards the installed libraries for proper system-wide access. NVIDIA official documentation provides extensive instructions to install TensorRT according to different system specifications. Essentially, following this method should allow your machine to locate the required dynamic library during program runtime, subsequently rectifying the error message ‘Could Not Load Dynamic Library ‘libnvinfer.so.6’.

Here’s a simple code snippet represented pseudocode-style signifying the installation process:

$ apt-get update $ apt-get install libnvinfer6

Remember to verify the exact version of TensorRT your application needs before attempting to install it.Having trouble with loading the dynamic library ‘libnvinfer.so.6’? You’re not alone! Many developers encounter this issue. Here’s how to go about it.

At its core, the error “Could not load dynamic library ‘libnvinfer.so.6’ ” implies that your system cannot find the said library. Nvidia TensorRT uses libnvinfer for improved inference performance, particularly in deep learning model execution. Let’s investigate the potential causes and their solutions.

Potential Cause 1: The library is not installed in your system. You can confirm whether you have it by running the command:

find /usr -name 'libnvinfer*'

Avoiding the installation of the library could be an easy oversight, especially when we automate our processes or take shortcuts.

How to Fix:

To install the library, download the TensorRT package from the NVIDIA TensorRT website. Afterward, use the following commands to install libnvinfer:

sudo dpkg -i nv-tensorrt-repo-ubuntuXXXX-nnvv.x.x.x-cudax.x-yyyymmdd_1-1_amd64.deb sudo apt-key add /var/nv-tensorrt-repo-cudax.x/7fa2af80.pub sudo apt-get update sudo apt-get install tensorrt

Unix commands above provide a generic guideline.

Ensure to replace ‘XXXX’, ‘x.x.x’, ‘cudax.x’, ‘yyyymmdd’ with specifications matching your local environment.

Potential Cause 2: Correct version of the library is not installed. This primarily stems from incompatibilities between your local environment setup and the specific versions of TensorRT needed.

How to Fix: Check the exact TensorRT version required for your current code base or setup and ensure to install precisely that. Different versions of TensorFlow, for example, require different versions of TensorRT (and therefore libnvinfer). Make sure your TensorFlow and TensorRT versions are compatible using the NVIDIA framework support matrix.

Potential Cause 3: The PATH does not include the directory containing the said library. Even if installed correctly, your system might not locate the library if the search path lacks the appropriate directory.

How to Fix: Add the library path to LD_LIBRARY_PATH as follows –

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/path/to/libnvinfer

Replace “/path/to/libnvinfer” with your actual folder containing ‘libnvinfer.so.6’

Finally, always remember to close your terminal or restart your environment after making these changes. Doing so triggers an environment refresh, allowing these changes to take effect.

Following these guidelines will significantly improve your ability to solve issues arising from ‘Could Not Load Dynamic Library Libnvinfer.So.6’. With practice, debugging such issues turns into an essential skill that fine tunes your problem-solving acumen as a coder. Happy coding!

If you’ve ever attempted to use TensorRT alongside TensorFlow for inference optimization and encountered the error “Could not load dynamic library ‘libnvinfer.so.6′”, then you know how frustrating it can be.

TensorFlow is a popular open-source machine learning framework created by Google that has various applications, including Deep Learning. What makes TensorFlow unique are its symbolic math library and the flexibility in its architecture. It supports computation on a variety of types of devices, such as CPUs, GPUs, and even TPUs (Tensor Processing Units). It supports the development and training of machine learning models on most platforms, including mobile and browser platforms, but sometimes, you might run into trouble with library loading errors like “Could not load dynamic library ‘libnvinfer.so.6′”.

This error message indicates that when TensorFlow tried to utilize TensorRT – NVIDIA’s deep learning optimization library and runtime – it couldn’t find the needed shared library file

libnvinfer.so.6

. Dynamic libraries (.so files in Linux-based systems) are loaded at runtime and contain executable code for software programs. If a required library is missing, an error will occur.

Here are some reasons why this problem typically arises:

- Version Incompatibility: The version of TensorRT does not match the version needed by your installed TensorFlow. TensorRT 6, indicated by the “.6” in the name “libnvinfer.so.6”, may not be what is needed.

- Incorrect Path: The library could possibly exist on your system, but not in a directory where TensorFlow can find it. TensorFlow searches for libraries in specific directories and if ‘libnvinfer.so.6’ is located elsewhere, the error will surface.

- Missing Library: Alternatively, the ‘libnvinfer.so.6’ library may indeed be missing from your system.

To rectify this, there are multiple ways one could approach this issue:

- Install Matching Versions: Make sure the versions of TensorFlow and TensorRT match as per the official compatibility guide provided by TensorFlow (reference). Different versions of TensorFlow require different versions of TensorRT and failure to use compatible pairs may lead to this error.

- Set Library Path: If installation isn’t the issue, you can alter TensorFlow’s library search path by modifying the LD_LIBRARY_PATH environment variable using the command:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/path/to/libnvinfer.so.6

- Installing the Missing Libraries: However, if ‘libnvinfer.so.6’ doesn’t exist on your system, you’ll need to download and install it (source).

In conclusion, such library-related issues frequently occur in complex software packages like TensorFlow, which rely heavily on a variety of external resources. It’s always crucial to ensure all dependencies are correctly installed and available to avoid these types of errors.

So stop stressing over the “Could not load dynamic library ‘libnvinfer.so.6′” error and leap back into your machine learning projects with TensorFlow – just keep these tips in mind.The error message “Could Not Load Dynamic Library ‘libnvinfer.so.6’ is often symptomatic of an improper or incomplete installation of NVIDIA CUDA Toolkit or Nvidia TensorRT library.

Let’s delve into the importance of correctly installing and configuring these tools, as related to avoiding aforementioned error:

Significance of Correct NVIDIA CUDA Toolkit Installation

CUDA Toolkit is a software development kit provided by NVIDIA that allows developers to create high-performance, GPU-accelerated applications. It includes libraries, debugging and optimization tools, a compiler and a runtime library to deploy your application.

| Issue | Relevance to ‘libnvinfer.so.6’ |

|---|---|

| Incorrect Version Installation | If you install CUDA toolkit of incompatible version it won’t have the right set of linkable libraries leading to loading issues. For instance libnvinfer.so.6 is linked to CUDA 10.0 not CUDA 11.0. To solve this, ensure CUDA version compatibility with your TensorRT and general device architecture. |

| Incomplete Installation | Sometimes, all features may not get installed due to internet connectivity or other system issues. This could cause certain library files like ‘libnvinfer.so.6’ to be missing resulting in errors during runtime. |

| Environmental Path Issues | If CUDA isn’t properly added to the PATH, system cannot recognize the library even if it’s present, leading again to “libnvinfer.so.6” missing error. |

Refer NVIDIA CUDA Toolkit Installation Guide for Linux, always ensure toolkits are installed and configured correctly.

Importance of Correct NVIDIA TensorRT Installation

Nvidia TensorRT™ is a high performance deep learning inferencing optimizer and runtime library. It includes a deep learning inference optimizer for optimizing neural networks and runtime that delivers low latency and high throughput for deep learning applications.

| Issue | Relevance to ‘libnvinfer.so.6’ |

|---|---|

| Incorrect Version Installation | TensorRT versions come with different sets of dynamic linking libraries. For example, TensorRT-7 might not have ‘libnvinfer.so.6’. Instead, it will have ‘libnvinfer.so.7’. Carelessly mismatching CUDA and TensorRT versions can trigger our error. |

| Dynamic Linker Problems | If the linker cannot find the required dependencies at runtime, it will result in ‘libnvinfer.so.6’ missing error. You might have the correct versions but the linker is not able to see them, therefore correct configuration in ld.conf.d or LD_LIBRARY_PATH is essential. |

Kindly check the Nvidia TensorRT Installation Guide for detailed instructions.

How to fix ‘could not load dynamic library libnvinfer.so.6’ error?

# Confirm existence of libnvinfer.so.6 find / -iname "libnvinfer*" # If not existent, reinstall appropriate CUDA and TensorRT versions # Ensure LD_LIBRARY_PATH environment variable include the path of libnvinfer.so libraries export LD_LIBRARY_PATH=/path/to/your/libs:$LD_LIBRARY_PATH

Ensuring that all components are installed correctly not only negates issues like missing ‘libnvinfer.so.6’, but also gets us in sync with best programming practices, opening the door to optimal system performance and efficient coding processes.Troubleshooting the “Could not load dynamic library ‘libnvinfer.so.6′” error primarily involves understanding the intricacies of shared libraries, their loading mechanisms, and dependency resolutions in Linux environments. Libnvinfer is a part of the TensorRT library, which is extensively used for high-performance deep learning inference. This error generally indicates that the system can’t locate this specific shared library.

Let’s dive into the troubleshooting steps:

Verifying Library Installation

Confirm that TensorRT and its respective libraries are correctly installed on your system. You can check this by trying to locate libnvinfer files using code like:

sudo find / -name "libnvinfer*"

This command searches the whole filesystem for any file or directory containing the name “libnvinfer”. Verify that “libnvinfer.so.6” is present in the list.

Checking Environment Variables

Ensure that the location of libnvinfer.so.6 is in either the LD_LIBRARY_PATH or /etc/ld.so.conf.

To inspect the content of LD_LIBRARY_PATH, you can use:

echo $LD_LIBRARY_PATH

In case the library’s location isn’t listed, you could add it using:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/path/to/your/library

To persist this change across sessions, you might need to add the above command to your shell profile settings (like .bashrc or .bash_profile).

You could also add the path to the /etc/ld.so.conf configuration or as a separate file in /etc/ld.so.conf.d/. Once done, execute ldconfig for the changes to take effect:

sudo ldconfig

Inspect that the library got included in the cache through:

ldconfig -p | grep libnvinfer

Resolving Dependencies

Sometimes, resolving one library unveils another missing library due to hidden dependencies. Use ldd tool to render shared library dependencies:

ldd /path/to/your/library/libnvinfer.so.6

If there are missing dependencies, make sure to install them.

Version Conflict

There are instances when various versions of the same library exist, causing version conflicts. Verify that the TensorRT version in which ‘libnvinfer.so.6’ is present is expected by your application.

Reinstalling TensorRT

In rare scenarios where none of the above efforts bear fruit, reinstalling the complete TensorRT ecosystem sometimes does the trick.

Remember to always modify environmental variables judiciously as they can lead to unforeseen consequences if misconfigured.

Please ensure to follow steps noted on official NVIDIA installation guide which gives comprehensive instruction on installing TensorRT correctly Here. It guides you through the proper steps to set up TensorRT and avoid these kind of issues.

These methods exhaust most common causes and should help troubleshoot the “Could not load dynamic library ‘libnvinfer.so.6′” error.

With respect to the “Could Not Load Dynamic Library ‘Libnvinfer.So.6′” error, it typically occurs when your system environment isn’t properly set up to locate and load the necessary library files. The library mentioned here ‘libnvinfer.so.6’ belongs to TensorRT which is a high-performance deep learning inference optimizer that improves performance on NVIDIA GPUs.

To troubleshoot this issue, we need to follow these steps among others:

1. Verify TensorRT Installation

Firstly, install TensorRT if you have not done so. After successful installation, confirm the presence of ‘libnvinfer.so.6’ through Linux commands in Terminal such as

sudo find / -name "libnvinfer.so.6"

This command conducts a system-wide search for ‘libnvinfer.so.6’ and should return its location as output.

2. Update Environment Variables

After confirming TensorRT’s installation and locating ‘libnvinfer.so.6’, modify the LD_LIBRARY_PATH variable in relation to the folder housing our needed .so file using:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/path/to/your/library/

Replace ‘/path/to/your/library/’ with the actual directory path where ‘libnvinfer.so.6’ was found. This makes the dynamic library loader aware of this new path and will reflect upon the library loading mechanism employed by the operating system.

3. Make Environment Variable Change Permanent

The modification above is impermanent. To make the change stick over reboots, append the export command to the end of either ‘.bashrc’ or ‘.bash_profile’:

echo "export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/path/to/your/library/" >> ~/.bashrc

Then update changes via:

source ~/.bashrc

4. Confirm Successful Library Loading

Post-application of these measures, test whether the library is accessible. Python holds modules like ctypes or tensorflow for those following checks:

import ctypes

print(ctypes.CDLL('libnvinfer.so.6'))

OR

from tensorflow.python import pywrap_tensorflow print(pywrap_tensorflow.IsGoogleCudaEnabled())

Remember that these resolutions involve modifications on your system. Take caution during each process, always ensure to back up important data, and understand each step before committing to ensure system integrity.Before we delve into how a potential solution could involve reinstalling TensorRT, it’s important to shed light on what role ‘libnvinfer.so.6’ plays and why its absence might cause an issue.

libnvinfer.so.6

is a shareable library part of NVIDIA’s TensorRT, which is a powerful deep learning inference optimizer and run-time library. It can accelerate operations involving neural networks and has a pivotal role in handling computation-heavy tasks faster and more efficiently. The issue implying Could Not Load Dynamic Library

'libnvinfer.so.6'

quite directly points to the system’s inability to locate and utilize this critical component. In plain words, your TensorFlow application is unable to find

libnvinfer.so.6

.

The report of such errors often suggests an incorrect or incomplete TensorRT installation. That’s where a possibilities for solution lies: reinstalling TensorRT might potentially mend the situation. Here’s how:

Step 1 – Uninstalling Existing TensorRT:

To uninstall TensorRT, find the location where it was installed; possibly, you could use the

whereis tensorrt

command for that.

Then, proceed with the removal using appropriate commands. An example would be,

sudo apt-get remove libnvinfer5

Step 2 – Download the Latest Version of TensorRT:

This can be done by visiting NVIDIA’s website here. Make sure to select the version compatible with your current setup and hardware.

Step 3 – Installing the New Version:

Navigate to the directory containing the downloaded TensorRT package and use the following command line to install it

sudo dpkg -i nv-tensorrt-repo-<version>

Make sure to replace

<version>

with your specific TensorRT package version.

Afterwards, run an update via:

sudo apt-get update

Step 4 – Validating the Installation:

Lastly, perform the check whether the ‘libnvinfer.so.6’ has found its rightful place or not. You can do this via

ldconfig -p | grep nvinfer

Upon successful execution, you’d see the path to

libnvinfer.so.6

. Incase, you’re still not able to locate it, making sure that the path where the library exists is included in

/etc/ld.so.conf.d

or LD_LIBRARY_PATH environment variable.

There’s no denying that errors like

libnvinfer.so.6

missing are disruptive for developers. Reinstalling TensorRT may help resolve it; just ensure you have your paths matching and versions compatible.

Remember, when dealing with shared software libraries, accurate placement and communication are key. Troubles usually rise from installations gone stray! Consult NVIDIA’s official guides for highly detailed steps and additional troubleshooting. (source)When you encounter the ‘Could Not Load Dynamic Library ‘Libnvinfer.so.6’ error message, this indicates that your system was unable to locate this shared library file. Misconfiguration of the environment, incomplete installations, or compatibility issues with TensorFlow are some of the common reasons why this issue arises.

In order to understand the problem better, it’s important to know what libnvinfer.so.6 is, and why it is so vital. Libnvinfer.so.6 is a component of TensorRT, NVIDIA’s high-performance deep learning inference optimizer and runtime library. It’s used to optimize trained models, and is especially crucial when we’re dealing with applications that rely heavily on machine learning and need to perform optimally.

Common Causes of the Problem

- Improper Installation: One frequently reported issue for the “Could Not Load Dynamic Library ‘Libnvinfer.so.6′” error is improper installation. The libraries might not be correctly installed in the local environment, or might be placed in an incorrect path where TensorFlow cannot find them.

- Incompatible Versions: Another common reason for this problem is having incompatible versions of TensorRT and TensorFlow. If TensorFlow does not support the current TensorRT version, this error will occur. Autodesk provided an insightful guide to check for version compatibility issues here.

Solutions Gathered from Community Forums

- Checking Environment Variables: First, ensure that necessary environment variable points to the correct location of TensorRT libraries. This can be done like following:

$ export LD_LIBRARY_PATH=/usr/local/cuda/extras/CUPTI/lib64:$LD_LIBRARY_PATH

This tells your system where to look for these shared .so files, including libnvinfer.so.6.

- Reinstalling With Dependency: When reinstalling TensorFlow, use pip to install tensorflow along with all its dependencies by using the command:

$ pip install tensorflow==2.x --pre

- Installing TensorRT: Download and install TensorRT from the official NVIDIA website using their guide. Make sure it’s compatible with your TensorFlow version.

It’s crucial to remember that compatibility across different parts of a ML/DL stack plays a huge role in avoiding errors. Starting from the hardware and operating system, aligning versions of Python, TensorFlow, CUDA, cuDNN, and TensorRT can prove to be the key difference between smooth operation and hitting a wall with ‘libnvinfer.so.6’ load errors.

For more specific insights about ‘Could Not Load Dynamic Library ‘Libnvinfer.so.6’, immerse yourself into community forums such as Stack Overflow and NVIDIA’s Machine Learning (ML) Inference Forum, where you can find particular solutions for your specified development environment setup.

Remember to upvote and engage with answers that have resolved your issue – it supports the ecosystem and helps future developers encountering the same problem.

From an analytical perspective, the error “Could Not Load Dynamic Library ‘Libnvinfer.So.6′” stems from issues grappling with NVIDIA’s TensorRT library. It remains a recurrent problem owing to TensorRT and CUDA mismatches or incorrect installation paths. Dealing with such predicaments entails verifying the TensorRT version compatibility with the installed CUDA, as well as confirming correct environment variable settings.

-

TensorRT and CUDA Compatibility: This error is consequent on the incompatibility of TensorRT and the CUDA version your system hosts. You might have TensorRT expecting to interact with a certain CUDA version not present in your system. Hence, always ensure you install a TensorRT version compatible with the CUDA already installed. A good reference point would be NVIDIA’s official documentation, where they provide a detailed compatibility matrix.

// Correct installation command considering compatibility sudo apt-get install libnvinfer6=6.x.x-x+cuda10.x libnvinfer-dev=6.x.x-x+cuda10.x

-

Environment Variable Settings: Sometimes, the convolution arises from inappropriate LD_LIBRARY_PATH configuration that facilitates dynamic linking during runtime of the application. To properly link the libraries, it is elementary to include the path to TensorRT’s lib directory in the system’s LD_LIBRARY_PATH.

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/path/to/TensorRT/lib

Hence, rectifying the ‘libnvinfer.so.6’ loading issue encapsulates ensuring the precise coordination between CUDA and TensorRT versions and the proper setting of environment variables. Additionally, remaining attentive to future software updates and respective changelogs will facilitate avoidance of similar errors over time. As a professional coder, I’ve persistently unraveled roadblocks by cultivating habits like keeping abreast with developments and anticipating potential lapses before they birth runtime-errors such as “Could Not Load Dynamic Library ‘Libnvinfer.So.6′”

| Issue | Possible Solution |

|---|---|

| ‘Libnvinfer.So.6’ not found | Verify TensorRT and CUDA version compatibility and correct installation |

| Inappropriate LD_LIBRARY_PATH | Include the path to TensorRT’s lib directory in LD_LIBRARY_PATH |